Searching for "phone classroom"

- Zohoorian-Fooladi, N., & Abrizah, A. A. (2014). Academic librarians and their social media presence: a story of motivations and deterrents. Information Development, 30(2), 159-171.

http://login.libproxy.stcloudstate.edu/login?qurl=http%3a%2f%2fsearch.ebscohost.com%2flogin.aspx%3fdirect%3dtrue%26db%3dllf%26AN%3d95801671%26site%3deds-live%26scope%3dsite

Librarians also believed that social media tools are suitable not only to communicate with users but also

to facilitate the interaction of librarians with each other by creating librarian groups.

Librarians also believed that social media tools are suitable not only to communicate with users but also

to facilitate the interaction of librarians with each other by creating librarian groups. (p. 169)

- Collins, G., & Quan-Haase, A. (2014). Are Social Media Ubiquitous in Academic Libraries? A Longitudinal Study of Adoption and Usage Patterns. Journal Of Web Librarianship, 8(1), 48-68. doi:10.1080/19322909.2014.873663

http://login.libproxy.stcloudstate.edu/login?qurl=http%3a%2f%2fsearch.ebscohost.com%2flogin.aspx%3fdirect%3dtrue%26db%3drzh%26AN%3d2012514657%26site%3deds-live%26scope%3dsite

- Reynolds, L. M., Smith, S. E., & D’Silva, M. U. (2013). The Search for Elusive Social Media Data: An Evolving Librarian-Faculty Collaboration. Journal Of Academic Librarianship, 39(5), 378-384. doi:10.1016/j.acalib.2013.02.007

http://login.libproxy.stcloudstate.edu/login?qurl=http%3a%2f%2fsearch.ebscohost.com%2flogin.aspx%3fdirect%3dtrue%26db%3daph%26AN%3d91105305%26site%3deds-live%26scope%3dsite

- Chawner, B., & Oliver, G. (2013). A survey of New Zealand academic reference librarians: Current and future skills and competencies. Australian Academic & Research Libraries, 44(1), 29-39. doi:10.1080/00048623.2013.773865

http://login.libproxy.stcloudstate.edu/login?qurl=http%3a%2f%2fsearch.ebscohost.com%2flogin.aspx%3fdirect%3dtrue%26db%3daph%26AN%3d94604489%26site%3deds-live%26scope%3dsite

- Lilburn, J. (2012). Commercial Social Media and the Erosion of the Commons: Implications for Academic Libraries. Portal: Libraries And The Academy, 12(2), 139-153.

http://login.libproxy.stcloudstate.edu/login?qurl=http%3a%2f%2fsearch.ebscohost.com%2flogin.aspx%3fdirect%3dtrue%26db%3deric%26AN%3dEJ975615%26site%3deds-live%26scope%3dsite

The general consensus emerging to date is that the Web 2.0 applications now widely used in academic libraries have given librarians new tools for interacting with users, promoting services, publicizing events and teaching information literacy skills. We are, by now, well versed in the language of Web 2.0. The 2.0 tools – wikis, blogs, microblogs, social networking sites, social bookmarking sites, video or photo sharing sites, to name just a few – are said to be open, user-centered, and to increase user engagement, interaction, collaboration, and participation. Web 2.0 is said to “empower creativity, to democratize media production, and to celebrate the individual while also relishing the power of collaboration and social networks.”4 All of this is in contrast with what is now viewed as the static, less interactive, less empowering pre-Web 2.0 online environment. (p. 140)

Taking into account the social, political, economic, and ethical issues associated with Web 2.0, other scholars raise questions about the generally accepted understanding of the benefits of Web 2.0. p. 141

- The decision to integrate commercial social media into existing library services seems almost inevitable, if not compulsory. Yet, research that considers the short- and long-term implications of this decision remains lacking. As discussed in the sections above, where and how institutions choose to establish a social media presence is significant. It confers meaning. Likewise, the absence of a presence can also confer meaning, and future p. 149

- Nicholas, D., Watkinson, A., Rowlands, I., & Jubb, M. (2011). Social Media, Academic Research and the Role of University Libraries. Journal Of Academic Librarianship, 37(5), 373-375. doi:10.1016/j.acalib.2011.06.023

http://login.libproxy.stcloudstate.edu/login?qurl=http%3a%2f%2fsearch.ebscohost.com%2flogin.aspx%3fdirect%3dtrue%26db%3dedselc%26AN%3dedselc.2-52.0-80052271818%26site%3deds-live%26scope%3dsite

- BROWN, K., LASTRES, S., & MURRAY, J. (2013). Social Media Strategies and Your Library. Information Outlook,17(2), 22-24.

http://login.libproxy.stcloudstate.edu/login?qurl=http%3a%2f%2fsearch.ebscohost.com%2flogin.aspx%3fdirect%3dtrue%26db%3dkeh%26AN%3d89594021%26site%3deds-live%26scope%3dsite

Establishing an open leadership relationship with these stakeholders necessitates practicing five rules of open leadership: (1) respecting the power that your patrons and employees have in their relationship with you and others, (2) sharing content constantly to assist in building trust, (3) nurturing curiosity and humility in yourself as well as in others, (4) holding openness accountable, and (5) forgiving the failures of others and yourself. The budding relationships that will flourish as a result of applying these rules will reward each party involved.

Whether you intend it or not, your organization’s leaders are part of your audience. As a result, you must know your organization’s policies and practices (in addition to its people) if you hope to succeed with social media. My note: so, if one defines a very narrow[sided] policy, then the entire social media enterprise is….

Third, be a leader and a follower. My note: not a Web 1.0 – type of control freak, where content must come ONLY from you and be vetoed by you!

All library staff have their own login accounts and are expected to contribute to and review

- Dority Baker, M. L. (2013). Using Buttons to Better Manage Online Presence: How One Academic Institution Harnessed the Power of Flair. Journal Of Web Librarianship, 7(3), 322-332. doi:10.1080/19322909.2013.789333

http://login.libproxy.stcloudstate.edu/login?qurl=http%3a%2f%2fsearch.ebscohost.com%2flogin.aspx%3fdirect%3dtrue%26db%3dlxh%26AN%3d90169755%26site%3deds-live%26scope%3dsite

his project was a partnership between the Law College Communications Department, Law College Administration, and the Law Library, involving law faculty, staff, and librarians.

- Van Wyk, J. (2009). Engaging academia through Library 2.0 tools : a case study : Education Library, University of Pretoria.

http://login.libproxy.stcloudstate.edu/login?qurl=http%3a%2f%2fsearch.ebscohost.com%2flogin.aspx%3fdirect%3dtrue%26db%3dedsoai%26AN%3dedsoai.805419868%26site%3deds-live%26scope%3dsite

- Paul, J., Baker, H. M., & Cochran, J. (2012). Effect of online social networking on student academic performance.Computers In Human Behavior, 28(6), 2117-2127. doi:10.1016/j.chb.2012.06.016

http://login.libproxy.stcloudstate.edu/login?qurl=http%3a%2f%2fsearch.ebscohost.com%2flogin.aspx%3fdirect%3dtrue%26db%3dkeh%26AN%3d79561025%26site%3deds-live%26scope%3dsite

#SocialMedia and students place a higher value on the technologies their instructors use effectively in the classroom. a negative impact of social media usage on academic performance. rather CONSERVATIVE conclusions.

Students should be made aware of the detrimental impact of online social networking on their potential academic performance. In addition to recommending changes in social networking related behavior based on our study results, findings with regard to relationships between academic performance and factors such as academic competence, time management skills, attention span, etc., suggest the need for academic institutions and faculty to put adequate emphasis on improving the student’s ability to manage time efficiently and to develop better study strategies. This could be achieved via workshops and seminars that familiarize and train students to use new and intuitive tools such as online calendars, reminders, etc. For example, online calendars are accessible in many devices and can be setup to send a text message or email reminder of events or due dates. There are also online applications that can help students organize assignments and task on a day-to-day basis. Further, such workshops could be a requirement of admission to academic programs. In the light of our results on relationship between attention span and academic performance, instructors could use mandatory policies disallowing use of phones and computers unless required for course purposes. My note: I completely disagree with the this decision: it can be argued that instructors must make their content delivery more engaging and thus, electronic devices will not be used for distraction

- MANGAN, K. (2012). Social Networks for Academics Proliferate, Despite Some Doubts. Chronicle Of Higher Education, 58(35), A20.

http://eds.b.ebscohost.com/eds/detail?vid=5&sid=bbba2c7a-28a6-4d56-8926-d21572248ded%40sessionmgr114&hid=115&bdata=JnNpdGU9ZWRzLWxpdmUmc2NvcGU9c2l0ZQ%3d%3d#db=f5h&AN=75230216

Academia.edu

While Mendeley’s users tend to have scientific backgrounds, Zotero offers similar technical tools for researchers in other disciplines, including many in the humanities. The free system helps researchers collect, organize, share, and cite research sources.

“After six years of running Zotero, it’s not clear that there is a whole lot of social value to academic social networks,” says Sean Takats, the site’s director, who is an assistant professor of history at George Mason University. “Everyone uses Twitter, which is an easy way to pop up on other people’s radar screens without having to formally join a network.

- Beech, M. (2014). Key Issue – How to share and discuss your research successfully online. Insights: The UKSG Journal, 27(1), 92-95. doi:10.1629/2048-7754.142

http://login.libproxy.stcloudstate.edu/login?qurl=http%3a%2f%2fsearch.ebscohost.com%2flogin.aspx%3fdirect%3dtrue%26db%3dlxh%26AN%3d94772771%26site%3deds-live%26scope%3dsite

the dissemination of academic research over the internet and presents five tenets to engage the audience online. It comments on targeting an audience for the research and suggests the online social networks Twitter,LinkedIn, and ResearchGate as venues. It talks about the need to relate work with the target audience and examines the use of storytelling and blogs. It mentions engaging in online discussions and talks about open access research

For those students who hate group work Manager’s Choice

Mary BartEditor, Faculty FocusTop Contributor

“I’d really rather work alone. . .” Most of us have heard that from a student (or several students) when we assign a group project, particularly one that’s worth a decent amount of the course grade. It doesn’t matter that the project is large,…

-

jasim

-

Steve

-

Alan

-

Brian R

-

Grace

-

Darrin

-

Shagufta Tahir

-

Mary

-

Steve

-

Stephen W.

-

Robin

-

David

-

Rana

-

Robin

-

Ron

-

Ron

-

David

-

Ron

-

David

-

Yaritza

-

Ron

-

Ron

-

Ron

-

Alan

-

Davina

-

Wethington

-

David

-

Kip

-

Amy Lynn

-

Rana

-

Wethington

-

Michael

-

Wethington

-

Kip

-

Kip

-

Susan

-

Robin

-

Alan

-

Dr.Maj. Kappagomtula

-

howard

-

Amy Lynn

-

hassan

-

Christina

-

Alan

-

Steve

-

Grace

-

Christina

-

Tery

-

Rae

Do student evaluations measure teaching effectiveness?Manager’s Choice

Mauricio Vasquez, Ph.D.Assistant Professor in MISTop Contributor

Higher Education institutions use course evaluations for a variety of purposes. They factor in retention analysis for adjuncts, tenure approval or rejection for full-time professors, even in salary bonuses and raises. But, are the results of course evaluations an objective measure of high quality scholarship in the classroom?

—————————-

-

Daniel

-

Muvaffak

-

Michael

-

Rina

-

Robert

-

Dr. Virginia

-

Barbara

-

Sri

-

Paul S

-

Bonnie

-

Pierre

-

Maria

-

David

-

Cathryn

-

Hans

-

robert

-

John

-

Simon

-

Laura

-

Dr. Pedro L.

-

Steve

-

robert

-

Cindy

-

yasir

-

yasir

-

joe

-

joe

-

Sonu

-

Dvora

-

Michael

-

Aleardo

-

George

-

Laura

-

Sethuraman

-

Edwin

-

Cesar

-

Steve

-

Diane

-

Nira

-

robert

-

Sami

-

-

Anne

-

Christa

-

Mat Jizat

-

orlando

-

Stephen

-

Allan

-

Allan

-

Olga

-

Penny

-

Robson

-

Chris

-

Steve

-

Eytan

-

Daniel

Campus Technology, a leading periodical in the use of technology in education, lists for consideration the 2014 technology trends for education:

- Mobile Platforms and Bring Your Own Device (BYOD)

- Adaptive Learning (personalization of online learning)

- Big Data (predictive analysis)

- Flipped Classroom

- Badges and Gamification (assessment and evaluation)

- iPADs and Other Tablets (mobile devices)

- Learning Management Systems (on SCSU campus – D2L)

The Journal

has a similar list:

- BYOD (it is a trend going up)

- Social Media as a Teaching and Learning Tool ( trend going up))

- Digital Badges (split vote, some of the experts expect to see the us of badges and gamification as soon as in 2014, some think, it will take longer time to adopt)

- Open Educational Resources (split vote, while the future of OER is recognized, the initial investment needed, will take time)

- Desktop Computers (it is a trend going down; every market shows a decline in the purchase of desktop computers)

- iPADs: (trend going up)

- ePortfolios (trend going down)

- Learning Management Systems, on SCSU campus – D2L (split vote). LMS is useful for flipped classroom, hybrid and online education uses CMS, but gradual consolidation stifles competition

- Learning Analytics, Common Core (trend going up)

- Game-Based Learning (split vote), but the gaming industry is still not to the point to create engaging educational games

Regarding computer operating systems (OS):

- Windows (trend going down)

- Apple / Mac OS X (split vote)

- iOS (iPhone, iPAD etc) (trend going up)

- Android (trend going up)

The materials in these two articles are consistent with other reports as reflected in our IMS blog:

IMS offers an extensive numbers of instructional sessions on social media, D2L and other educational technologies:

Please email us with any other suggestions, ideas and requests regarding instructional technology and instructional design at:

ims@stcloudstate.edu

Stoodle is a web application which allows you, without any download or registration, to create a quick classroom space. By sharing the URL of the classroom you can invite other participants. You can use microphone or text based chat, and upload files (images) to discuss.

24 Good iPad Math Apps for Elementary Students

http://www.educatorstechnology.com/2013/12/24-good-ipad-math-apps-for-elementary.html

1- Motion Math

Feed your fish and play with numbers! Practice mental addition and subtraction with Motion Math: Hungry Fish, a delightful learning game that’s fun for children and grownups.

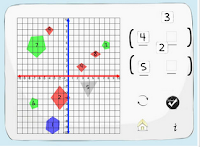

2- Geoboard

The Geoboard is a tool for exploring a variety of mathematical topics introduced in the elementary and middle grades. Learners stretch bands around pegs to form line segments and polygons and make discoveries about perimeter, area, angles, congruence, fractions, and more.

3- Math Vs Zombies

The world is overrun with zombies. You are a part of a squad of highly trained scientists who can save us. Using your math skills and special powers you can treat infected zombies to contain the threat.

4- Mystery Math Town

It’s part math drills, part seek and find game and totally engaging. Kids ages five and up should find this both fun and challenging. Parents should rejoice that finally there is a way to get kids to want to do more math. – Smart Apps for Kids

5- Montessori Numbers

Montessori Numbers offers a sequence of guided activities that gradually help children reinforce their skills. Each activity offers several levels of increasing complexity

6- Free Kids Counting Game

FREE and fun picture math games for kids designed by the iKidsPad team. This free iPad math app dynamically generates thousand of beginning counting games with different themes and number levels. Great interactive and challenging games helps young children build up basic counting skills and number recognition.

7- Math Puppy

Math Puppy will take you on a journey of educational fun like never before!

From toddlers to grade school, for Children of all ages – Math Puppy is the perfect way to build up your math skills. Your child will be able to enjoy a constructive, supportive, interactive fun filled environment while mastering the arts of basic math.

8- Mathmateer

While your rocket is floating weightlessly in space, the real fun begins! Play one of the many fun math missions. Each mission has touchable objects floating in space, including stars, coins, 3D shapes and more! Earn a bronze, silver or gold medal and also try to beat your high score. Missions range in difficulty from even/odd numbers all the way to square roots, so kids and their parents will enjoy hours of fun while learning math.

9- Math Ninja

Use your math skills to defend your treehouse against a hungry tomato and his robotic army in this fun action packed game! Choose between ninja stars, smoke bombs, or ninja magic – and choose your upgrades wisely!

10- Everyday Mathematics

The Equivalent Fractions game by McGraw Hill offers a quick and easy way to practice and reinforce fraction concepts and relationships. This game runs on the iPad, iPhone, and iPod Touch.

11- Elementary School Math

Based on the classroom hit Middle School Math HD, Elementary School Math HD is a stunningly beautiful and powerfully engaging application built for today’s technology-driven elementary school classroom. Emphasizing game-playing and skill development, the eight modules in Elementary School Math HD have been carefully designed by classroom teachers to provide the perfect balance between fun and the practice of fundamental skills

12- Math Tappers

MathTappers: Multiples is a simple game designed first to help learners to make sense of multiplication and division with whole numbers, and then to support them in developing fluency while maintaining

accuracy.

13- Ordered Fractions

Two Player Bluetooth Math Game! You can use two devices and play competitively or cooperatively with your classmates or parents. Ordered Fractions provides a comprehensive tool that offers an innovative method of learning about comparing and ordering fractions.

14- SlateMath

SlateMath is an iPad app that develops mathematical intuition and skills through playful interaction. The app’s 38 activities prepare children for kindergarten and first grade math. SlateMath forms the foundation of numbers, digit writing, counting, addition, order relation, patterns, parity and problem solving.

15- NumberStax

Number Stax is a puzzle game to test your number skills! Drop numbers and operators in the correct places to match the number or expression shown at the top of the screen to score. You can’t remove tiles but you can swap them around. You can freeze the game at any time, but remember to watch the clock! Eliminate tiles, score points, and earn bonuses and achievements for as long as possible until your grid is full! Remember, the longer you play the faster it gets. Share with your family and friends to see who can get the highest score.

16- Grid Drawing

With a sheet of paper, a grid and a template, your children will be able to draw 32 drawings on iPad, 16 on the iPhone in the Lite version, more than 120 (60 on iPhone) in the full version.

17- Motion Math

Developed at the Stanford School of Education, Motion Math HD follows a star that has fallen from space, and must bound back up, up, up to its home in the stars. Moving fractions to their correct place on the number line is the only way to return. By playing Motion Math, learners improve their ability to perceive and estimate fractions in multiple forms.

18- 5th Grade Math

Splash Math is a fun and innovative way to practice math. With 9 chapters covering an endless supply of problems, it is by far the most comprehensive math workbook in the app store.

19- Sushi Monster

Meet Sushi Monster! Scholastic’s new game to practice, reinforce, and extend math fact fluency is completely engaging and appropriately challenging.

20- Math Monsters

Math Monsters Bingo is a new, fun way to master math on your iPhone, iPad and iPod touch. The game lets you practice math anytime and anywhere using a fun Bingo styled game play.

21- Grid Lines

Grid Lines is a Battleship-style math game used to teach students the coordinate plane by plotting points in all four quadrants.

22- Marble Math

Teacher’s Guide to Socrative 2.o

http://www.educatorstechnology.com/2013/12/teachers-guide-to-socrative-2o.html

Socrative is a smart student response system that empowers teachers to engage their classrooms through a series of educational exercises and games via smartphones, laptops, and tablets. Socrative is designed in such a way to help teachers make classes more engaging and interactive.It also helps teachers initiate activities and prompt students with questions to which students can respond using their laptops or smartphones. The good thing about ScSocratives that it can run on any kind of device with internet connection: iPads, iPods, laptops, smartphones so students will never miss out on any learning acidity.

From: Miltenoff, Plamen

Sent: Wednesday, November 20, 2013 4:09 PM

To: ‘technology@lists.mnscu.edu’; ‘edgamesandsims@lists.mnscu.edu’

Cc: Oyedele, Adesegun

Subject: virtual worlds and simulations

Good afternoon

Apologies for any cross posting…

Following a request from fellow faculty at SCSU, I am interested in learning more about any possibilities for using virtual worlds and simulations opportunities [in the MnSCU system] for teaching and learning purposes.

The last I remember was a rather messy divorce between academia and Second Life (the latter accusing an educational institution of harboring SL hackers). Around that time, MnSCU dropped their SL support.

Does anybody have an idea where faculty can get low-cost if not free access to virtual worlds? Any alternatives for other simulation exercises?

Any info/feedback will be deeply appreciated.

Plamen

After Frustrations in Second Life, Colleges Look to New Virtual Worlds. February 14, 2010

—–Original Message—–

From: Weber, James E.

Sent: Wednesday, November 20, 2013 5:41 PM

To: Miltenoff, Plamen Subject: RE: virtual worlds and simulations

Hi Plamen:

I don’t use virtual worlds, but I do use a couple of simulations…

I use http://www.glo-bus.com/ extensively in my strategy class. It is a primary integrating mechanism for this capstone class.

I also use http://erpsim.hec.ca/en because it uses and illustrates SAP and process management.

http://www.goventure.net/ is one I have been looking into. Seems more flexible…

Best,

Jim

From: brock.dubbels@gmail.com [mailto:brock.dubbels@gmail.com] On Behalf Of Brock Dubbels

Sent: Wednesday, November 20, 2013 4:29 PM

To: Oyedele, Adesegun

Cc: Miltenoff, Plamen; Gaming and Simulations

Subject: Re: virtual worlds and simulations

That is fairly general

what constitutes programming skill is not just coding, but learning icon-driven actions and logic in a menu

for example, Sketch Up is free. You still have to learn how to use the interface.

there is drag and drop game software, but this is not necessarily a share simulation

From: Kalyvaki, Maria [mailto:Maria.Kalyvaki2@smsu.edu]

Sent: Wednesday, November 20, 2013 4:26 PM

To: Miltenoff, Plamen

Subject: RE: virtual worlds and simulations

Hi,

I received this email today and I am happy that someone is interested on Second Life. The second life platform and some other virtual worlds are free to use. Depends what are your expectations there that may increase the cost of using the virtual world. I am using some of those virtual worlds and my previous school Texas Tech University was using SL for a course.

Let me know how could I help you with the virtual worlds.

With appreciation,

Maria

From: Jane McKinley [mailto:Jane.McKinley@riverland.edu]

Sent: Thursday, November 21, 2013 11:09 AM

To: Miltenoff, Plamen

Cc: Jone Tiffany; Pamm Tranby; Dan Harber

Subject: Virtual worlds

Hi Plamen,

To introduce myself I am the coordinator/ specialist for our real life allied health simulation center at Riverland Community College. Dan Harber passed your message on to me. I have been actively working in SL since 2008. My goal in SL was to do simulation for nursing education. I remember when MnSCU had the island. I tried contacting the lead person at St. Paul College about building a hospital on the island for nursing that would be open to all MN programs, but never could get a response back.

Yes, SL did take the education fees away for a while but they are now back. Second Life is free in of itself, it is finding islands with educational simulations that takes time to explore, but many are free and open to the public. I do have a list of islands that may be of interest to you. They are all health related, but there are science islands such as Genome Island. Matter of fact there is a talk that will be out there tonight about how to do research and conduct fair experiments at 7:00 our time.

I have been lucky to find someone with the same goals as I have. Her name is Jone Tiffany. She is a professor at Bethel University in the nursing program. In the last 4 years we have built an island for nursing education. This consists of a hospital, clinic, office building, classrooms and a library. We also built a simulation center. (Although I accidently removed the floor and some walls in it. Our builder is getting it back together.) There is such a shortage of real mental health and public health sites that a second island is being purchased to meet this request. On that island we are going to build an inner city, urban and rural communities. This will be geared towards meeting those requests. Our law enforcement program at Riverland has voiced an interest in SL with being able to set up virtual crime scenes which could be staged anywhere on the two islands. With the catastrophic natural events and terrorist activities that have occurred recently we will replicate these same communities on the other side of the island only it will be the aftermath of a hurricane and tornado, or flooding. On the other side we could stage the aftermath of a bombing such as what happened in Boston. Victims could transported to the hospital ED. Law enforcement could do an investigation.

We have also been working with the University of Wisconsin, Osh Kosh. They have a plane crash simulation and what we call a grunge house that students go into to see what the living conditions are like for those who live in poverty and what could be done about it.

Since I am not faculty I cannot take our students out to SL, but Jone has had well over 100 of her students in there doing various assignments. She is taking more out this semester. They have done such things as family health assessments and diabetes assessment and have to create a plan of care. She has done lectures out there. So the students come out with their avatars and sit in a classroom. This is a way distant learning can be done but yet be engaged with the students. The beauty of SL is that you can be creative. Since the island is called Nightingale Isle, some of the builds are designed with that theme in mind. Such as the classrooms, they are tiered up a mountain and look like the remains of a bombed out church from the Crimean War, it is one of our favorite spots. We also have an area open on the island for support groups to meet. About 5 years ago Riverland did do a congestive heart failure simulation with another hospital in SL. That faculty person unfortunately has left so we have not been able to continue it, but the students loved it. We did the same scenario with Jones students in the sim center we have and again the students loved it.

The island is private but anyone is welcome to use it. We do this so that we know and can control who is on the island. All that is needed is to let Jone or I know who you are, where are you from (institution), and what is your avatar name. We will friend you in SL and invite you to join the group, then you have access to the island. Both Jone and I are always eager to share what all goes on out there (as you can tell by this e-mail). There is so much potential of what can be done. We have been lucky to be able to hire the builder who builds for the Mayo Clinic. Their islands are next to ours. She replicated the Gonda Building including the million dollar plus chandeliers.

I can send you the list of the health care related islands, there are about 40 of them. I also copied Jone, she can give you more information on what goes into owning an island. We have had our ups and downs with this endeavor but believe in it so much that we have persevered and have a beautiful island to show for it.

Let me if you want to talk more.

Jane (aka Tessa Finesmith-avatar name)

Jane McKinley, RN

College Lab Specialist -Riverland Center for Simulation Learning

Riverland Community College

Austin, MN 55912

jane.mckinley@riverland.edu

507-433-0551 (office)

From: Jeremy Nienow [mailto:JNienow@inverhills.mnscu.edu]

Sent: Thursday, November 21, 2013 10:11 AM

To: Miltenoff, Plamen

Cc: Sue Dion

Subject: Teaching in virtual worlds

Hello,

A friend here at IHCC sent me your request for information on teaching in low-cost virtual environments.

I like to think of myself on the cusp of gamification and I have a strong background in gaming in general (being a white male in my 30s).

Anyway – almost every MMORPG (Massive Multi-online role playing game) today is set up on a Free to Play platform for its inhabitance.

There are maybe a dozen of these out there right now from Dungeon and Dragons online, to Tera, to Neverwinter Nights…etc.

Its free to download, no subscription fee (like there used to be) and its free to play – how they get the money is they make game items and cool aspects of the game cost money…people pay for the privilege of leveling faster.

So – you could easily have all your students download the game (provided they all have a suitable system and internet access), make an avatar, start in the same place – and teach right from there.

I have thought of doing this for an all online class before, but wanted to wait till I was tenured.

Best,

Jeremy L. Nienow, PhD., RPA

Anthropology Faculty

Inver Hills Community College

P.S. Landon Pirius (sp?) who was once at IHCC and now I believe is at North Hennepin maybe… wrote his PhD on teaching in online environments and used World of Warcraft.

From: Gary Abernethy [mailto:Gary.Abernethy@minneapolis.edu]

Sent: Thursday, November 21, 2013 8:46 AM

To: Miltenoff, Plamen

Subject: Re: [technology] virtual worlds and simulations

Plamen,

The below are current options I am aware of for VW and SIM . You may also want to take a look at Kuda, in Google code, I worked at SRI when we developed this tool. I am interested in collaboration in this area.

Hope the info helps

https://www.activeworlds.com/index.html

http://www.opencobalt.org/

http://opensimulator.org/wiki/Main_Page

http://metaverse.sourceforge.net/

http://stable.kuda.googlecode.com

Gary Abernethy

Director of eLearning

Academic Affairs

Minneapolis Community and Technical College | 1501 Hennepin Avenue S. | Minneapolis, MN 55403

Phone 612-200-5579

Gary.Abernethy@minneapolis.edu | http://www.minneapolis.edu

From: John OBrien [mailto:John.OBrien@so.mnscu.edu]

Sent: Wednesday, November 20, 2013 11:37 PM

To: Miltenoff, Plamen

Subject: RE: virtual worlds and simulations

I doubt this is so helpful, but maybe: http://wiki.secondlife.com/wiki/SLED

Top 10 Social Media Management Tools

http://socialmediatoday.com/daniel-zeevi/1344346/top-10-social-media-management-tools

HootSuite is the most popular social media management tool for people and businesses to collaboratively execute campaigns across multiple social networks like Facebook and Twitter from one web-based dashboard. Hootsuite has become an essential tool for managing social media, tracking conversations and measuring campaign results via the web or mobile devices. Hootsuite offers a free, pro and enterprise solution for managing unlimited social profiles, enhanced analytics, advanced message scheduling, Google Analytics and Facebook insights integration.

My note: HS is worth considering because of the add-ons for Firefox and Chrome and the Hootlet

Notes from a phone conversation with Robert Fougner

Enterprise Development Representative | HootSuite

778-300-1850 Ex 4545 robert.fougner@hootsuite.com

Jeff Woods with SCSU Communications does NOT use HS, neither Tom Nelson with SCSU Athletics. Two options: HS Pro and HS Enterprise. HS Pro: $10/m. Allows two users and once per month statistical output. Up to 50 social media accounts (list under App Directory). 50 SM accounts can be used not only for dissemination of information or streamlining the reception and digestion of information, but also for analytics from other services (can include in itself even Google Analytics), as well as repository (e.g., articles, images etc.) on other cloud services (e.g. Dropbox, Evernote etc.). Adding any other user account costs additional $10/m and can keep going up, until the HS Enterprise option becomes more preferable.

HS has integration with most of the prominent SM tools

HS has social media coaches, who can help not only with the technicalities of using HS but with brainstorming ideas for creative application of HS

HS has HS University, which deals with classroom instructors.

Buffer is a smart and easy way to schedule content across social media. Think of Buffer like a virtual queue you can use to fill with content and then stagger posting times throughout the day. This lets you keep to a consistent social media schedule all week long without worrying about micro-managing the delivery times. The Bufferapp also provides analytics about the engagement and reach of your posts.

My notes: power user -$10/m, business – $50/m. Like HS, it can manage several accounts of Twitter, FB, and LinkedIn, Does NOT support G+

According to Mary Janitsch http://twitter.com/marycjantsch hello@bufferapp.com

Top 10 Social Media Management Tools: beyond Hootsuite and TweetDeck

“Buffer is designed more as a layer on top of whatever tools you already use, we see a lot of customers use both together very easily”

According to http://blog.bufferapp.com/introducing-buffer-for-business-the-most-simple-powerful-social-media-tool-for-your-business:

25 accounts / 5 members = $50/m

According to blog note at http://www.socialmediaexaminer.com/13-tools-to-simplify-your-social-media-marketing/, Time.ly (http://time.ly/) is similar to Buffer, but free.

Buffer integration to Google Reader

What’s the difference between Hootsuite and Bufferapp?

Hootsuite provides a more complete solution that allows you to schedule updates and monitor conversations, whereas Buffer isn’t a dashboard that shows you other people’s content. However, Bufferapp has superior scheduling flexibility over Hootsuite because you can designate very specific scheduling times and change patterns throughout the week. Hootsuite recently introduced an autoschedule feature that automatically designates a scheduling time based on a projected best time to post. This can be effective to use, but doesn’t have the same flexibility as Buffer since you don’t really know when a post will be scheduled till after doing so. What’s the right solution for you? Many people use both Hootsuite (to listen) and Bufferapp (to schedule), including me, and it really depends on your posting needs. In my opinion though, if Hootsuite we’re to introduce more scheduling options this could spell trouble for Buffer! But then again, Buffer could be working on some cool new dashboard that would rival Hootsuite’s offering, time will only tell.

SocialOomph is a neat web tool that provides a host of free and paid productivity enhancements for social media. You can do a lot with the site which includes functions for Facebook, Twitter, LinkedIn, Plurk and your blog. There are a ton of useful Twitter features like scheduling tweets, tracking keyword, viewing mentions and retweets, DM inbox cleanup, auto-follow and auto-DM features for new followers. Social Oomph will auto-follow any new follower of yours on Twitter if you like, which could save you a ton of time if you normally like to reciprocate follows. Social Oomph is so effective at increasing social media productivity that I use the site every day but haven’t had any reason to actually log in there since last year!

My notes: Canadian company. started with Twitter, expanded to FB and LIn and keeps expanding (blogs). Here are the Pro/Free/ features: http://www.bloggingwizard.com/social-oomph-review/

for the paid option only-submit social updates via email, blog posts. TweetCockPIT for managing several accounts, unlimited Twitter accounts. FB, LinkedIn

$27.26 Monthly http://blinklist.com/reviews/socialoomph

Hootsuite Vs SocialOomph http://bluenotetechnologies.com/2013/04/25/hootsuite-vs-socialoomph/ – FOR SO

Hootsuite Vs SocialOomph http://sazbean.com/2009/12/10/review-hootsuite-vs-socialoomph/ – FOR HS

More + reviews and features for SO – http://www.itqlick.com/Products/6643: As a start-up organisation, if you want to keep your cost low and manage social media, SocialOomph can be your best choice as you can use it for free for a stipulated time – see also the pros and cons

Tweetdeck is a web and desktop solution to monitor and manage your Twitter feeds with powerful filters to focus on what matters. You can also schedule tweets and stay up to date with notification alerts for new tweets. Tweetdeck, who was purchased by Twitter, is available for Chrome browsers, as well as Windows and Mac desktops. Recently they closed down their mobile apps to re-shift focus on the web and desktop platforms.

My notes: I abandonded TD for HS about an year ago, because of the same problem: no mobile app. Also, TweetDeck deals only with Twitter accounts, not other social media

Tweepi is a unique management tool for Twitter that lets you flush unfollowers, cleanup inactives, reciprocate following and follow interesting new tweeps! The pro version allows you to do bulk follow/unfollow actions of up to 200 users at a time making it a pretty powerful tool for Twitter management.

My notes: $7.99 for up to 100 followers and 14.99 for up to 200. Twitter only, but unique features, which the other SMT don’t have

Social Flow is an interesting business solution to watch real-time conversation on social media in order to predict the best times for publishing content to capture peak attention from target audiences. Some major publishers use Social Flow which includes National Geographic, Mashable, The Economist and The Washington Post to name a few. Social Flow offers a full suite of services that looks to expand audience engagement and increase revenue per customer. In addition to its Cadence and Crescendo precision products, SocialFlow conducts an analysis of social signals to help identify where marketers should spend money on Promoted Tweets, Promoted Posts and Sponsored Stories, extending the reach and engagement for Twitter and Facebook paid strategies.

My notes: This tool is too advanced and commercial for entry level social media group such as LRS

Sproutsocial is a powerful management and engagement platform for social business. Sprout Social offers a single stream inbox designed to help you never miss a message, and tools to seamlessly post, colloborate and schedule messages to Twitter, Facebook and LinkedIn. The platform also has monitoring tools and rich analytics to help you visualize important metrics.

My notes: shareware app (one month), $59/m for the cheapest (up to 20 profiles)

By far the most expensive, but also the most promising-looking

SocialBro helps businesses learn how to better target and engage with their audience on Twitter. It provides tools to browse your community and identify key influencers, determine when the best time to tweet is, track engagement and analyze your competitors. Socialbro analyzes the timelines of your followers to generate a report showing you when the optimal time to tweet is that would reach the maximum amount of followers for more retweets and replies.

Cowdbooster offers a set of no-nonsense social media analytics with suggestions and resources to boost your online engagement. The platform provides at-a-glance analytics, recommendations for engagement and timing, audience insights and content scheduling to optimize delivery.

My notes: free version available.

CB vs HS: http://allisonw16.wordpress.com/2012/11/26/crowdbooster-and-hootsuite/

- Much simpler to use and understand : +

- Free version only allows for one Twitter account and one Facebook account : –

- Upgrades allow for more accounts, but still only Twitter and Facebook (no other social media types) : —

- No social media feed : —

- Provides suggestions on when to post content based on when followers and friends are most active : +

Ricky here from Crowdbooster. I am a big fan of your entrepreneurial career. We are positioned a little bit differently from Hootsuite, and as far as doing the required daily management, you may still need to use Hootsuite. What we do well is making sense of the analytics, and giving you real-time feedback about how you can improve your content, timing, and engagement. We also do some of the listening for you so you don’t have to always stare at the firehose that Hootsuite brings to you, that way we can help give you some slack as far as knowing when influencers decide to follow you, etc. We work with bit.ly, not ow.ly just yet, but using bit.ly can help us look into your click data to suggest, for example, best places to curate your content.

https://plus.google.com/+PaulAllen/posts/idKkZRdA5gX

10 ArgyleSocial

Identify and engage with more prospects, qualify and quantify better leads, and build and maintain stronger relationships by linking social media actions to the marketing platforms you’re already using.

My notes: More on the sale side.

11. Sendible

http://sendible.com/tour/social-media-reporting

My notes:

startup, $39.99/m, business $70, Corp, $100, premium, $500

Solo plan, $10 with 8 services: http://sendible.com/pricing?filter=allplans

12. Cyfe

http://www.cyfe.com/

My notes:

$19 per month ($14 per month if paid annually). Unlimited everything: accounts, data experts, viw data past 30 days, custom logo,

13. GrabinBox

http://www.grabinbox.com/

Not sure which social media tool you should choose? If you want an advanced platform with advanced features that can handle most of your accounts, you might want to opt for a paid membership to HootSuite or Crowdbooster. If you’d be fine with more basic features (which might be better for beginners with only a couple accounts to manage) GrabinBox might be a better fit for you.

My notes:

14. Google Reader

discontinued

My notes: App.Net and Plurk

Also, looking a the SMMTools, one can acquire a clear picture what is trending as social media tools (just by seeing what is allowed to be handled): Twitter, FB, LinkedIn.

Topsy (http://www.topsy.com)

http://manageflitter.com

Thursday, April 11, 11AM-1PM, Miller Center B-37

and/or

http://media4.stcloudstate.edu/scsu

We invite the campus community to a presentation by three vendors of Classroom Response System (CRS), AKA “clickers”:

11:00-11:30AM Poll Everywhere, Mr. Alec Nuñez

11:30-12:00PM iClikers, Mr. Jeff Howard

12:00-12:30PM Top Hat Monocle Mr. Steve Popovich

12:30-1PM Turning Technologies Mr. Jordan Ferns

links to documentation from the vendors:

http://web.stcloudstate.edu/informedia/crs/ClickerSummaryReport_NDSU.docx

http://web.stcloudstate.edu/informedia/crs/Poll%20Everywhere.docx

http://web.stcloudstate.edu/informedia/crs/tophat1.pdf

http://web.stcloudstate.edu/informedia/crs/tophat2.pdf

http://web.stcloudstate.edu/informedia/crs/turning.pdf

Top Hat Monocle docs:

http://web.stcloudstate.edu/informedia/crs/thm/FERPA.pdf

http://web.stcloudstate.edu/informedia/crs/thm/proposal.pdf

http://web.stcloudstate.edu/informedia/crs/thm/THM_CaseStudy_Eng.pdf

http://web.stcloudstate.edu/informedia/crs/thm/thm_vsCRS.pdf

iCLicker docs:

http://web.stcloudstate.edu/informedia/crs/iclicker/iclicker.pdf

http://web.stcloudstate.edu/informedia/crs/iclicker/iclicker2VPAT.pdf

http://web.stcloudstate.edu/informedia/crs/iclicker/responses.doc

| Questions to vendor: alec@polleverywhere.com |

- 1. Is your system proprietary as far as the handheld device and the operating system software?

The site and the service are the property of Poll Everywhere. We do not provide handheld devices. Participants use their own device be it a smart phone, cell phone, laptop, tablet, etc. |

- 2. Describe the scalability of your system, from small classes (20-30) to large auditorium classes. (500+).

Poll Everywhere is used daily by thousands of users. Audience sizes upwards of 500+ are not uncommon. We’ve been used for events with 30,000 simultaneous participants in the past. |

- 3. Is your system receiver/transmitter based, wi-fi based, or other?

N/A |

- 4. What is the usual process for students to register a “CRS”(or other device) for a course? List all of the possible ways a student could register their device. Could a campus offer this service rather than through your system? If so, how?

Student participants may register by filling out a form. Or, student information can be uploaded via a CSV. |

- 5. Once a “CRS” is purchased can it be used for as long as the student is enrolled in classes? Could “CRS” purchases be made available through the campus bookstore? Once a student purchases a “clicker” are they able to transfer ownership when finished with it?

N/A. Poll Everywhere sells service licenses the length and number of students supported would be outlined in a services agreement. |

- 6. Will your operating software integrate with other standard database formats? If so, list which ones.

Need more information to answer. |

- 7. Describe the support levels you provide. If you offer maintenance agreements, describe what is covered.

8am to 8pm EST native English speaking phone support and email support. |

- 8. What is your company’s history in providing this type of technology? Provide a list of higher education clients.

Company pioneered and invented the use of this technology for audience and classroom response. http://en.wikipedia.org/wiki/Poll_Everywhere. University of Notre Dame

South Bend, Indiana

University of North Carolina-Chapel Hill

Raleigh, North Carolina

University of Southern California

Los Angeles, California

San Diego State University

San Diego, California

Auburn University

Auburn, Alabama

King’s College London

London, United Kingdom

Raffles Institution

Singapore

Fayetteville State University

Fayetteville, North Carolina

Rutgers University

New Brunswick, New Jersey

Pepperdine University

Malibu, California

Texas A&M University

College Station, Texas

University of Illinois

Champaign, Illinois |

- 9. What measures does your company take to insure student data privacy? Is your system in compliance with FERPA and the Minnesota Data Practices Act? (https://www.revisor.leg.state.mn.us/statutes/?id=13&view=chapter)

Our Privacy Policy can be found here: http://www.polleverywhere.com/privacy-policy. We take privacy very seriously. |

- 10. What personal data does your company collect on students and for what purpose? Is it shared or sold to others? How is it protected?

Name. Phone Number. Email. For the purposes of voting and identification (Graded quizzes, attendance, polls, etc.). It is never shared or sold to others. |

- 11. Do any of your business partners collect personal information about students that use your technology?

No. |

- 12. With what formats can test/quiz questions be imported/exported?

Import via text. Export via CSV. |

- 13. List compatible operating systems (e.g., Windows, Macintosh, Palm, Android)?

Works via standard web technology including Safari, Chrome, Firefox, and Internet Explorer. Participant web voting fully supported on Android and IOS devices. Text message participation supported via both shortcode and longcode formats. |

- 14. What are the total costs to students including device costs and periodic or one-time operation costs

Depends on negotiated service level agreement. We offer a student pays model at $14 per year or Institutional Licensing. |

- 15. Describe your costs to the institution.

Depends on negotiated service level agreement. We offer a student pays model at $14 per year or Institutional Licensing. |

- 16. Describe how your software integrates with PowerPoint or other presentation systems.

Downloadable slides from the website for Windows PowerPoint and downloadable app for PowerPoint and Keynote integration on a Mac. |

| 17. State your level of integration with Desire2Learn (D2L)?Does the integration require a server or other additional equipment the campus must purchase?Export results from site via CSV for import into D2L. |

- 17. How does your company address disability accommodation for your product?

We follow the latest web standards best practices to make our website widely accessible by all. To make sure we live up to this, we test our website in a text-based browser called Lynx that makes sure we’re structuring our content correctly for screen readers and other assisted technologies. |

- 18. Does your software limit the number of answers per question in tests or quizzes? If so, what is the max question limit?

No. |

- 19. Does your software provide for integrating multimedia files? If so, list the file format types supported.

Supports image formats (.PNG, .GIF, .JPG). |

- 20. What has been your historic schedule for software releases and what pricing mechanism do you make available to your clients for upgrading?

We ship new code daily. New features are released several times a year depending on when we finish them. New features are released to the website for use by all subscribers. |

- 21. Describe your “CRS”(s).

Poll Everywhere is a web based classroom response system that allows students to participate from their existing devices. No expensive hardware “clickers” are required. More information can be found at http://www.polleverywhere.com/classroom-response-system. |

- 22. If applicable, what is the average life span of a battery in your device and what battery type does it take?

N/A. Battery manufacturers hate us. Thirty percent of their annual profits can be contributed to their use in clickers (we made that up). |

- 23. Does your system automatically save upon shutdown?

Our is a “cloud based” system. User data is stored there even when your computer is not on. |

- 24. What is your company’s projection/vision for this technology in the near and far term.

We want to take clicker companies out of business. We think it’s ridiculous to charge students and institutions a premium for outdated technology when existing devices and standard web technology can be used instead for less than a tenth of the price. |

- 25. Does any of your software/apps require administrator permission to install?

No. |

- 26. If your system is radio frequency based, what frequency spectrum does it operate in? If the system operate in the 2.4-2.5 ghz. spectrum, have you tested to insure that smart phones, wireless tablet’s and laptops and 2.4 ghz. wireless phones do not affect your system? If so, what are the results of those tests?

No. |

- 27. What impact to the wireless network does the solution have?

Depends on a variety of factors. Most university wireless networks are capable of supporting Poll Everywhere. Poll Everywhere can also make use of cell phone carrier infrastructure through SMS and data networks on the students phones. |

- 28. Can the audience response system be used spontaneously for polling?

Yes. |

- 29. Can quiz questions and response distributions be imported and exported from and to plaintext or a portable format? (motivated by assessment & accreditation requirements).

Yes. |

- 30. Is there a requirement that a portion of the course grade be based on the audience response system?

No. |

Gloria Sheldon

MSU Moorhead

Fall 2011 Student Response System Pilot

Summary Report

NDSU has been standardized on a single student response (i.e., “clicker”) system for over a decade, with the intent to provide a reliable system for students and faculty that can be effectively and efficiently supported by ITS. In April 2011, Instructional Services made the decision to explore other response options and to identify a suitable replacement product for the previously used e-Instruction Personal Response System (PRS). At the time, PRS was laden with technical problems that rendered the system ineffective and unsupportable. That system also had a steep learning curve, was difficult to navigate, and was unnecessarily time-consuming to use. In fact, many universities across the U.S. experienced similar problems with PRS and have since then adopted alternative systems.

A pilot to explore alternative response systems was initiated at NDSU in fall 2011. The pilot was aimed at further investigating two systems—Turning Technologies and iClicker—in realistic classroom environments. As part of this pilot program, each company agreed to supply required hardware and software at no cost to faculty or students. Each vendor also visited campus to demonstrate their product to faculty, students and staff.

An open invitation to participate in the pilot was extended to all NDSU faculty on a first come, first serve basis. Of those who indicated interest, 12 were included as participants in this pilot.

Pilot Faculty Participants:

- Angela Hodgson (Biological Sciences)

- Ed Deckard (AES Plant Science)

- Mary Wright (Nursing)

- Larry Peterson (History, Philosophy & Religious Studies)

- Ronald Degges (Statistics)

- Julia Bowsher (Biological Sciences)

- Sanku Mallik (Pharmaceutical Sciences)

- Adnan Akyuz (AES School of Natural Resource Sciences)

- Lonnie Hass (Mathematics)

- Nancy Lilleberg (ITS/Communications)

- Lisa Montplaisir (Biological Sciences)

- Lioudmila Kryjevskaia (Physics)

Pilot Overview

The pilot included three components: 1) Vendor demonstrations, 2) in-class testing of the two systems, and 3) side-by-side faculty demonstrations of the two systems.

After exploring several systems, Instructional Services narrowed down to two viable options—Turning Technologies and iClicker. Both of these systems met initial criteria that was assembled based on faculty input and previous usage of the existing response system. These criteria included durability, reliability, ease of use, radio frequency transmission, integration with Blackboard LMS, cross-platform compatibility (Mac, PC), stand-alone software (i.e., no longer tied to PowerPoint or other programs), multiple answer formats (including multiple choice, true/false, numeric), potential to migrate to mobile/Web solutions at some point in the future, and cost to students and the university.

In the first stage of the pilot, both vendors were invited to campus to demonstrate their respective technologies. These presentations took place during spring semester 2011 and were attended by faculty, staff and students. The purpose of these presentations was to introduce both systems and provide faculty, staff, and students with an opportunity to take a more hands-on look at the systems and provide their initial feedback.

In the second stage of the pilot, faculty were invited to test the technologies in their classes during fall semester 2011. Both vendors supplied required hardware and software at no cost to faculty and students, and both provided online training to orient faculty to their respective system. Additionally, Instructional Services staff provided follow-up support and training throughout the pilot program. Both vendors were requested to ensure system integration with Blackboard. Both vendors indicated that they would provide the number of clickers necessary to test the systems equally across campus. Both clickers were allocated to courses of varying sizes, ranging from 9 to 400+ students, to test viability in various facilities with differing numbers of users. Participating faculty agreed to offer personal feedback and collect feedback from students regarding experiences with the systems at the end of the pilot.

In the final stage of the pilot, Instructional Services facilitated a side-by-side demonstration led by two faculty members. Each faculty member showcased each product on a function-by-function basis so that attendees were able to easily compare and contrast the two systems. Feedback was collected from attendees.

Results of Pilot

In stage one, we established that both systems were viable and appeared to offer similar features, functions, and were compatible with existing IT systems at NDSU. The determination was made to include both products in a larger classroom trial.

In stage two, we discovered that both systems largely functioned as intended; however, several differences between the technologies in terms of advantages and disadvantages were discovered that influenced our final recommendation. (See Appendix A for a list of these advantages, disadvantages, and potential workarounds.) We also encountered two significant issues that altered the course of the pilot. Initially, it was intended that both systems would be tested in equal number in terms of courses and students. Unfortunately, at the time of the pilot, iClicker was not able to provide more than 675 clickers, which was far fewer than anticipated. Turning Technologies was able to provide 1,395 clickers. As a result, Turning Technologies was used by a larger number of faculty and students across campus.

At the beginning of the pilot, Blackboard integration with iClicker at NDSU was not functional. The iClicker vendor provided troubleshooting assistance immediately, but the problem was not resolved until mid-November. As a result, iClicker users had to use alternative solutions for registering clickers and uploading points to Blackboard for student viewing. Turning Technologies was functional and fully integrated with Blackboard throughout the pilot.

During the span of the pilot additional minor issues were discovered with both systems. A faulty iClicker receiver slightly delayed the effective start date of clicker use in one course. The vendor responded by sending a new receiver, however it was an incorrect model. Instructional Services temporarily exchanged receivers with another member of the pilot group until a functional replacement arrived. Similarly, a Turning Technologies receiver was received with outdated firmware. Turning Technologies support staff identified the problem and assisted in updating the firmware with an update tool located on their website. A faculty participant discovered a software flaw in the iClicker software that hides the software toolbar when disconnecting a laptop from a second monitor. iClicker technical support assisted in identifying the problem and stated the problem would be addressed in a future software update. A workaround was identified that mitigated this problem for the remainder of the pilot. It is important to note that these issues were not widespread and did not widely affect all pilot users, however these issues attest to the need for timely, reliable, and effective vendor support.

Students and faculty reported positive experiences with both technologies throughout the semester. Based on feedback, users of both systems found the new technologies to be much improved over the previous PRS system, indicating that adopting either technology would be perceived as an upgrade among students and faculty. Faculty pilot testers met several times during the semester to discuss their experiences with each system; feedback was sent to each vendor for their comments, suggestions, and solutions.

During the stage three demonstrations, feedback from attendees focused on the inability for iClicker to integrate with Blackboard at that time and the substantial differences between the two systems in terms of entering numeric values (i.e., Turning Technologies has numeric buttons, while iClicker requires the use of a directional key pad to scroll through numeric characters). Feedback indicated that attendees perceived Turning Technologies’ clickers to be much more efficient for submitting numeric responses. Feedback regarding other functionalities indicated relative equality between both systems.

Recommendation

Based on the findings of this pilot, Instructional Services recommends that NDSU IT adopt Turning Technologies as the replacement for the existing PRS system. While both pilot-tested systems are viable solutions, Turning Technologies appears to meet the needs of a larger user base. Additionally, the support offered by Turning Technologies was more timely and effective throughout the pilot. With the limited resources of IT, vendor support is critical and was a major reason for exploring alternative student response technologies.

From Instructional Services’ standpoint, standardizing to one solution is imperative for two major reasons: cost efficiency for students (i.e., preventing students from having to purchase duplicate technologies) and efficient utilization of IT resources (i.e., support and training). It is important to note that this recommendation is based on the opinion of the Instructional Services staff and the majority of pilot testers, but is not based on consensus among all participating faculty and staff. It is possible that individual faculty members may elect to use other options that best meet their individual teaching needs, including (but not limited to) iClicker. As an IT organization, we continue to support technology that serves faculty, student and staff needs across various colleges, disciplines, and courses. We feel that this pilot was effective in determining the student response technology—Turning Technologies—that will best serve NDSU faculty, students and staff for the foreseeable future.

Once a final decision concerning standardization is made, contract negotiations should begin in earnest with the goal of completion by January 1, 2012, in order to accommodate those wishing to use clickers during the spring session.

Appendix A: Clicker Comparisons

Turning Technologies and iClicker

Areas where both products have comparable functionality:

- Setting up the receiver and software

- Student registration of clickers

- Software interface floats above other software

- Can use with anything – PowerPoint, Websites, Word, etc.

- Asking questions on the fly

- Can create questions / answers files

- Managing scores and data

- Allow participation points, points for correct answer, change correct answer

- Reporting – Summary and Detailed

- Uploading scores and data to Blackboard (but there was a big delay with the iClicker product)

- Durability of the receivers and clickers

- Free software

- Offer mobile web device product to go “clickerless”

Areas where the products differ:

Main Shortcomings of Turning Technology Product:

- Costs $5 more – no workaround

- Doesn’t have instructor readout window on receiver base –

- This is a handy function in iClicker that lets the instructor see the %’s of votes as they come in, allowing the instructor to plan how he/she will proceed.

- Workaround: As the time winds down to answer the question, the question and answers are displayed on the screen. Intermittently, the instructor would push a button to mute the projector, push a button to view graph results quickly, then push a button to hide graph and push a button to unmute the projector. In summary, push four buttons quickly each time you want to see the feedback, and the students will see a black screen momentarily.

- Processing multiple sessions when uploading grading –

- Turning Technologies uses their own file structure types, but iClicker uses comma-separated-value text files which work easily with Excel

- Workaround: When uploading grades into Blackboard, upload them one session at a time, and use a calculated total column in Bb to combine them. Ideally, instructors would upload the grades daily or weekly to avoid backlog of sessions.

Main Shortcomings of iClicker Product:

- Entering numeric answers –

- Questions that use numeric answers are widely used in Math and the sciences. Instead of choosing a multiple-choice answer, students solve the problem and enter the actual numeric answer, which can include numbers and symbols.

- Workaround: Students push mode button and use directional pad to scroll up and down through a list of numbers, letters and symbols to choose each character individually from left to right. Then they must submit the answer.

- Number of multiple choice answers –

- iClicker has 5 buttons on the transmitter for direct answer choices and Turning Technologies has 10.

- Workaround: Similar to numeric answer workaround. Once again the simpler transmitter becomes complex for the students.

- Potential Vendor Support Problems –

- It took iClicker over 3 months to get their grade upload interface working with NDSU’s Blackboard system. The Turning Technology interface worked right away. No workaround.