|

Our first Library 2.022 mini-conference: “Virtual Reality and Learning: Leading the Way,” will be held online (and for free) on Tuesday, March 29th, 2022.

Virtual Reality was identified by the American Library Association as one of the 10 top library technology trends for the future. The use of this technology is equally trending in the education, museum, and professional learning spheres. Virtual Reality is a social and digital technology that uniquely promises to transform learning, build empathy, and make personal and professional training more effective and economical.

Through the leadership of the state libraries in California, Nevada, and Washington, Virtual Reality projects have been deployed in over 120 libraries in the three states in both economically and geographically diverse service areas. This example, as well as other effective approaches, can help us to begin a national conversation about the use of XR/immersive learning technology in libraries, schools, and museums; and about making content available to all users, creating spaces where digital inclusion and digital literacy serves those who need it the most

This is a free event, being held live online and also recorded.

REGISTER HERE

to attend live and/or to receive the recording links afterward.

Please also join this Library 2.0 network to be kept updated on this and future events.

Everyone is invited to participate in our Library 2.0 conference events, which are designed to foster collaboration and knowledge sharing among information professionals worldwide. Each three-hour event consists of a keynote panel, 10-15 crowd-sourced thirty-minute presentations, and a closing keynote.

Participants are encouraged to use #library2022 and #virtualrealitylearning on their social media posts about the event.

CALL FOR PROPOSALS:The call for proposals is now open. We encourage proposals that showcase effective uses of Virtual Reality in libraries, schools, and museums. We encourage proposals that also address visions or examples of Virtual Reality impacting adult education, STEM learning, the acquisition of marketable skills, workforce development, and unique learning environments.. Proposals can be submitted HERE.

KEYNOTE SPEAKERS, SPECIAL GUESTS, AND ORGANIZERS:

Sara Jones

State Librarian, Washington State Library

Sara Jones previously served as the director of the Marin County Free Library since July 2013. Prior to her time in California, Jones held positions in Nevada libraries for 25 years, including serving as the Carson City Library Director, the Elko-LanderEureka County Library System Director and Youth Services Coordinator, and Nevada State Librarian and Administrator of the State Library and Archives from 2000-2007. Jones was named the Nevada Library Association’s Librarian of the Year in 2012; served as Nevada’s American Library Association (ALA) Council Delegate for four years; coordinated ALA National Library Legislative Day for Nevada for 12 years; served as the Nevada Library Association president; was an active member of the Western Council of State Libraries serving as both vice president and president; and served on the University of North Texas Department of Library and Information Sciences Board of Advisors for over 10 years. She was awarded the ALA Sullivan award for services to children in 2018. She is a member and past-president of CALIFA, a nonprofit library membership consortium.

Tammy Westergard

Senior Workforce Development Leader, Project Coordinator – U.S. Department of Education Reimagine Workforce Preparation Grant Program – Supporting and Advancing Nevada’s Dislocated Individuals – Project SANDI

As Nevada State Librarian (2020 – 2021), Tammy Douglass Westergard was a leader in envisioning the dynamic roles of libraries in the future of learning and democracy in America. Tammy was also named the Nevada Library Association’s 2020 Librarian of the Year. She deployed the first certification program within any public library in America where individuals can earn a Manufacturing Technician 1 (MT1), a nationally recognized industry credential necessary to get many of the high paying careers in advanced manufacturing. In parallel with California public libraries, Westergard launched in Nevada the first State-wide learning program in American public libraries delivering augmented reality and virtual reality STEM content and equipment, resulting in immersive learning experiences for thousands of learners. Westergard imagined and then became the project design leader for the first-ever initiative deploying 3D learning tools for the College of Southern Nevada’s (CSN) allied health programs. As a result, CSN is the first dialysis technician training program in the world to use a virtual reality simulation for instruction and CSN was able to accept remote, online learners into its program for students who were previously unable to access the program.Tammy received her bachelor’s degree from the University of Nevada, Reno, a Master of Library Science from the University of North Texas and is a member of Beta Phi Mu, the international library and information studies honor society. She is a member of the International Advisory Board of the Vaclav Havel Library Foundation. The Library Journal named Westergard an “Agent of Change Mover and Shaker.” Tammy’s great passion is advancing educational opportunities through the library. She believes there is dignity in work, which is why she is expanding first-in-the-country programs she created that help displaced workers reskill and upskill so they can step into living wage jobs.

Greg Lucas

California State Librarian

Greg Lucas was appointed California’s 25th State Librarian by Governor Jerry Brown on March 25, 2014. Prior to his appointment, Greg was the Capitol Bureau Chief for the San Francisco Chronicle where he covered politics and policy at the State Capitol for nearly 20 years. During Greg’s tenure as State Librarian, the State Library’s priorities have been to improve reading skills throughout the state, put library cards into the hands of every school kid and provide all Californians the information they need – no matter what community they live in. The State Library invests $10 million annually in local libraries to help them develop more innovative and efficient ways to serve their communities. Since 2015, the State Library has improved access for millions of Californians by helping connect more than half of the state’s 1,100 libraries to a high-speed Internet network that links universities, colleges, schools, and libraries around the world. Greg holds a Master’s in Library and Information Science from California State University San Jose, a Master’s in Professional Writing from the University of Southern California, and a degree in communications from Stanford University.

Milton Chen

Independent Speaker, Author, Board Member

Milton says that he has had a very fortunate and fulfilling career on both coasts, working with passionate innovators to transform education in creative ways. His first job out of college was at Sesame Workshop in New York, working with founder Joan Cooney and some amazingly talented colleagues in TV production and educational research. From 1976 to 1980, he worked in the research department, creating science curricula for Sesame Street and testing segments for The Electric Company, the reading series. He then served as director of research for the development of 3-2-1 Contact, a science series for 8- to 12-year-olds. Eventually, Sesame Street circled the globe, with broadcasts in more than 100 countries and versions in Spanish, Chinese, Arabic, and many other languages. He then came to the Bay Area to pursue doctoral studies in communication at Stanford. His dissertation looked at gender differences in high school computer use, including new desktop computers we called “microcomputers.” After two years as an assistant professor at the Harvard Graduate School of Education, he joined KQED-San Francisco (PBS) in 1987 as director of education. They worked with teachers to incorporate video into their lessons, using VCRs! He wrote my first book, The Smart Parent’s Guide to Kids’ TV (1994) and hosted a program on the topic with special guest, First Lady Hillary Clinton. In 1998, he joined The George Lucas Educational Foundation as executive director. During his 12 years there, thjey produced documentaries and other media on schools embracing innovations such as project-based learning, social/emotional learning, digital technologies, and community engagement. They created the Edutopia brand to represent more ideal environments for learning. Today, the Edutopia.org website attracts more than 5 million monthly users.

Karsten Heise

Director of Strategic Programs, Nevada Governor’s Office of Economic Development (GOED) i

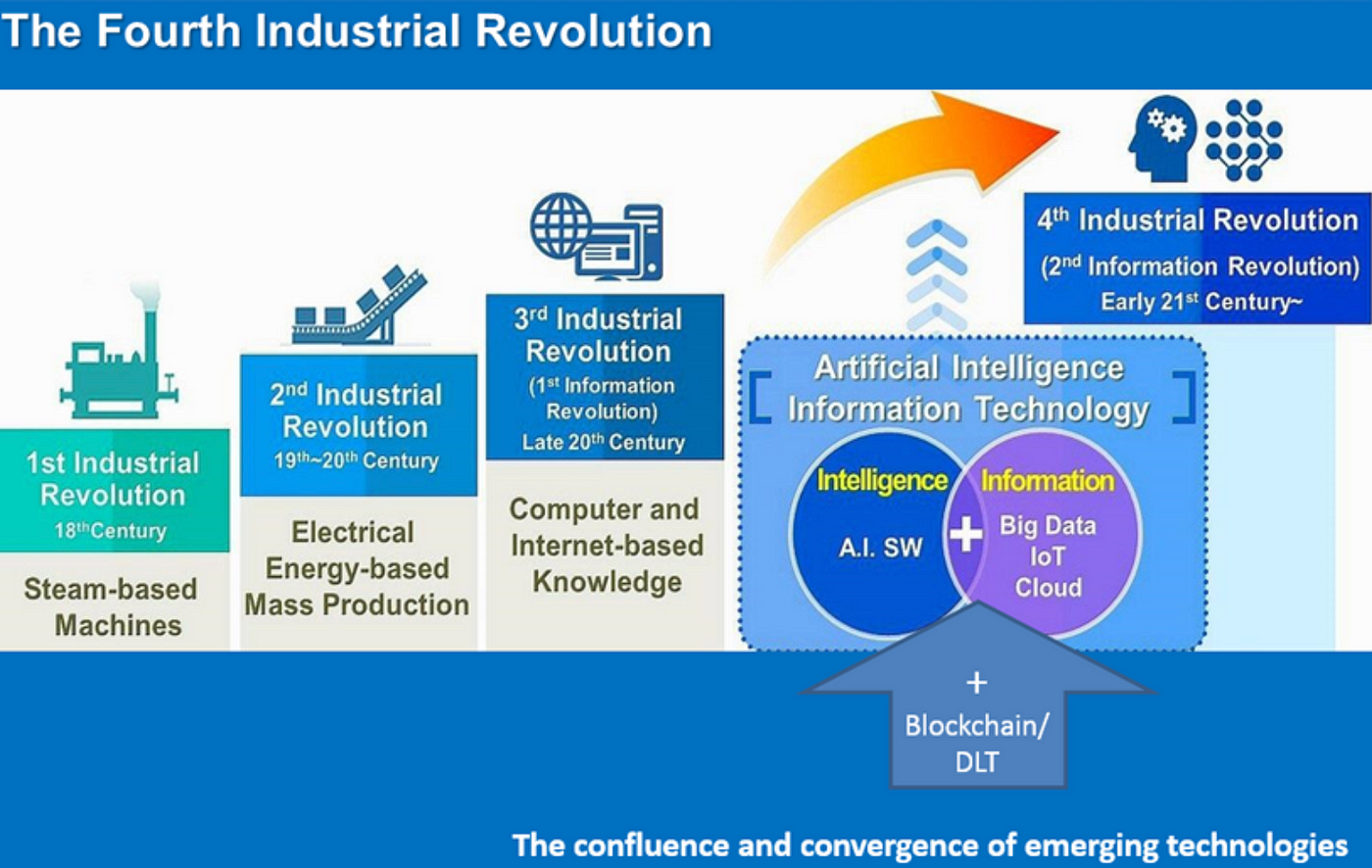

Karsten Heise joined the Nevada Governor’s Office of Economic Development (GOED) in April 2012 initially as Technology Commercialization Director and then continued as Director of Strategic Programs. He leads Innovation Based Economic Development (IBED) in Nevada. As part of IBED, he created and manages Nevada’s State Small Business Credit Initiative (SSBCI) Venture Capital Program. He also leads and overseas the ‘Nevada Knowledge Fund’ to spur commercialization at the state’s research institutions and to foster Research & Development engagements with the private sector as well as supporting local entrepreneurial ecosystems and individual startups. In addition, Karsten is deeply familiar with the European vocational training system having completed his banking-apprenticeship in Germany. This experience inspired the development of the ‘Learn and Earn Advanced career Pathway’ (LEAP) framework in Nevada, which progressed to becoming the standard template for developing career pathway models in the state. He is deeply passionate about continuously developing new workforce development approaches dealing with the consequences of the Fourth Industrial Revolution. Prior to joining the GOED, Karsten spent five years in China working as an external consultant to Baron Group Beijing and as member of the senior management team at Asia Assets Limited, Beijing. Before relocating to Beijing, Karsten worked for 10 years in the international equity divisions of London-based leading Wall Street investment banks Morgan Stanley, Donaldson, Lufkin & Jenrette (DLJ), and most recently Credit Suisse First Boston (CSFB). As Vice President at CSFB, he specialized in alternative investments, structured products, and international equities. His clients were entrepreneurs, ultra-high net worth individuals and family offices as well as insurance companies, pension funds, asset managers and banks. Karsten speaks German and Mandarin Chinese. Karsten completed his university education in the United Kingdom with a Bachelor of Science with First Class Honours in Economics from the University of Buckingham, a Master of Science with Distinction in International Business & Finance from the University of Reading, and a Master of Philosophy with Merit in Modern Chinese Studies, Chinese Economy from the University of Cambridge – Wolfson College. He is also an alumnus of the Investment Management Evening Program at London Business School and completed graduate research studies at Peking University, China.

Dana Ryan, PhD

Special Assistant to the President, Truckee Meadows Community College

With a doctorate in educational leadership from the University of Nevada, Reno, Dana has decades advancing education and training solutions to meaningfully link, scale, enhance and further develop digital components in healthcare, advanced manufacturing, logistics, IT and construction trades. She understands the WIOA one-stop-operating-system programs and processes and can communicate how delivery of services to clients through local offices, regional centers and libraries is achieved. Skill with analysis of a variety of labor market and other demographic information creates excellence in explaining the relevance of labor market data and local, state, and national labor market trends. Dana interfaces with labor and management groups/leaders, and others.

This is a free event, being held live online and also recorded.

REGISTER HERE

to attend live and/or to receive the recording links afterward.

Please also join this Library 2.0 network to be kept updated on this and future events.

The School of Information at San José State University is the founding conference sponsor. Please register as a member of the Library 2.0 network to be kept informed of future events. Recordings from previous years are available under the Archives tab at Library 2.0 and at the Library 2.0 YouTube channel. |