Searching for "social media use"

Information Overload Helps Fake News Spread, and Social Media Knows It

Understanding how algorithm manipulators exploit our cognitive vulnerabilities empowers us to fight back

https://www.scientificamerican.com/article/information-overload-helps-fake-news-spread-and-social-media-knows-it/

a minefield of cognitive biases.

People who behaved in accordance with them—for example, by staying away from the overgrown pond bank where someone said there was a viper—were more likely to survive than those who did not.

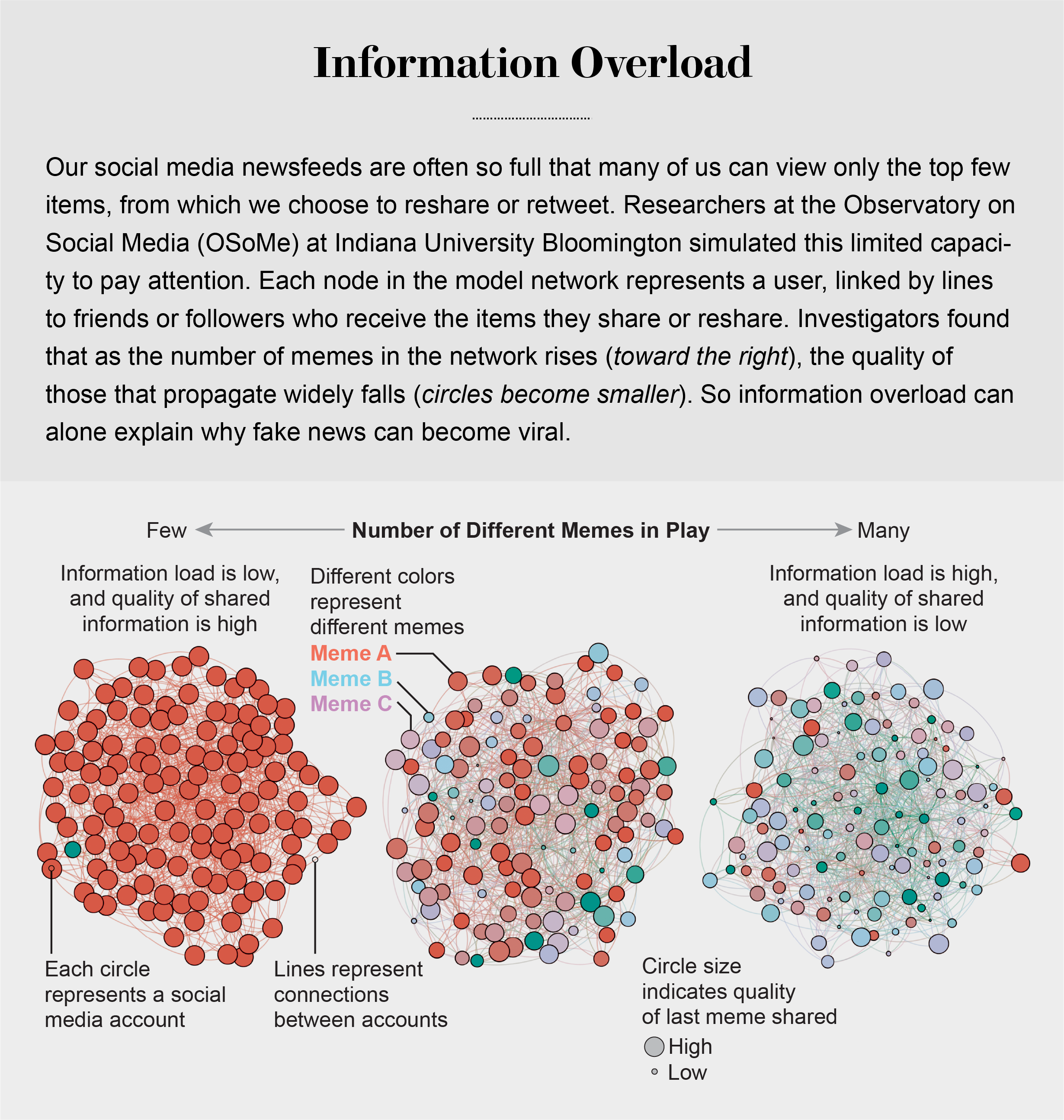

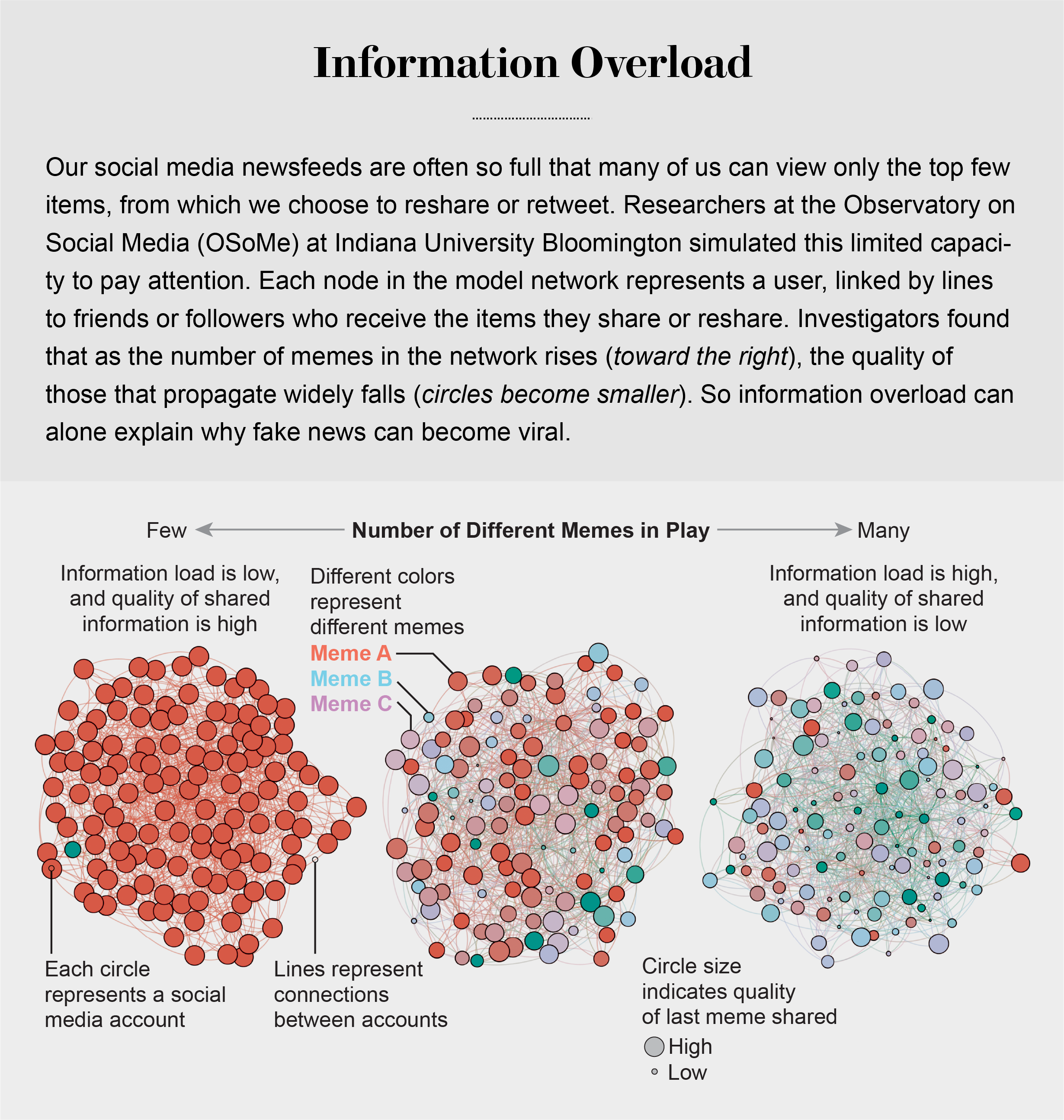

Compounding the problem is the proliferation of online information. Viewing and producing blogs, videos, tweets and other units of information called memes has become so cheap and easy that the information marketplace is inundated. My note: folksonomy in its worst.

At the University of Warwick in England and at Indiana University Bloomington’s Observatory on Social Media (OSoMe, pronounced “awesome”), our teams are using cognitive experiments, simulations, data mining and artificial intelligence to comprehend the cognitive vulnerabilities of social media users.

developing analytical and machine-learning aids to fight social media manipulation.

As Nobel Prize–winning economist and psychologist Herbert A. Simon noted, “What information consumes is rather obvious: it consumes the attention of its recipients.”

attention economy

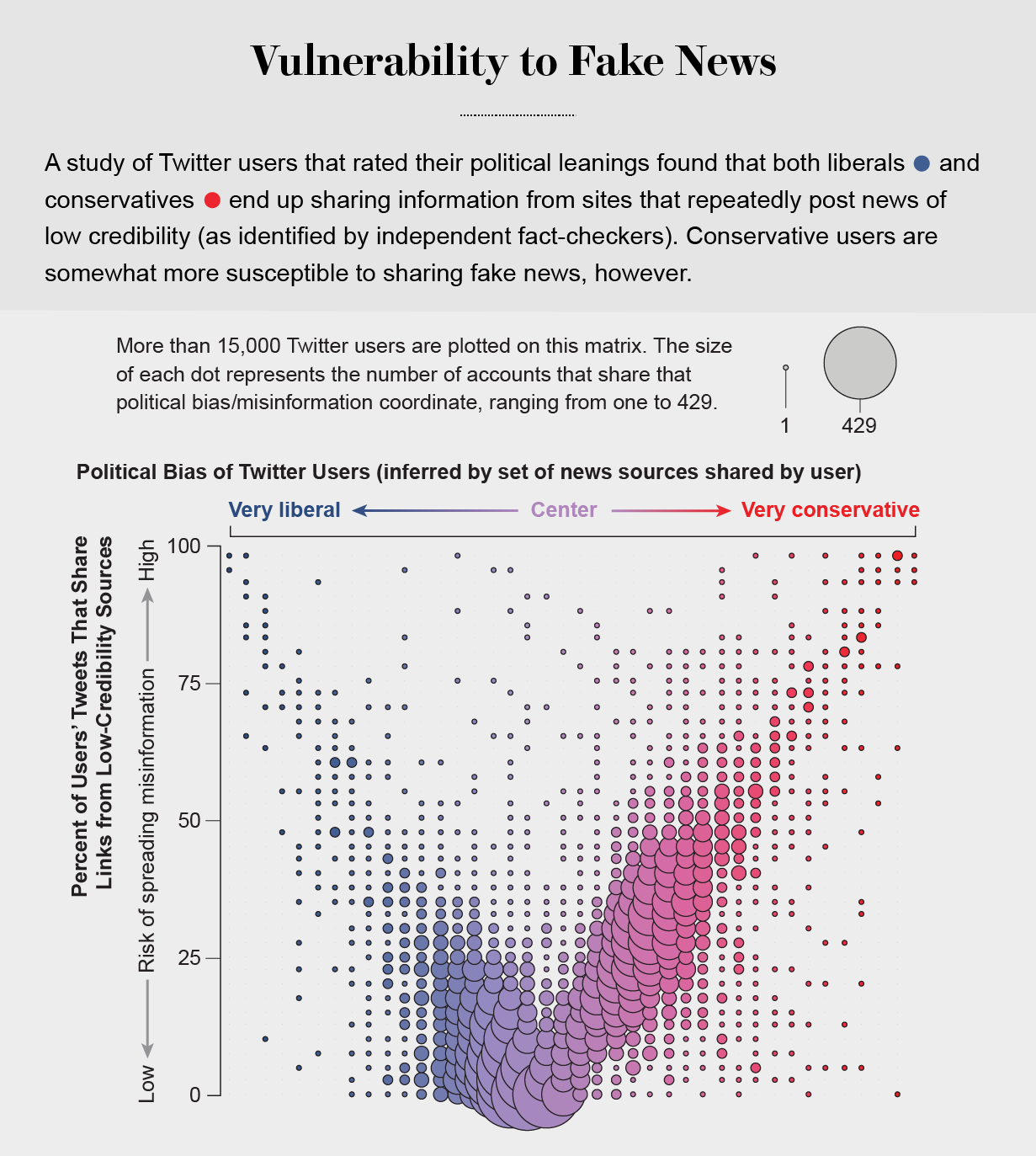

Our models revealed that even when we want to see and share high-quality information, our inability to view everything in our news feeds inevitably leads us to share things that are partly or completely untrue.

Frederic Bartlett

Cognitive biases greatly worsen the problem.

We now know that our minds do this all the time: they adjust our understanding of new information so that it fits in with what we already know. One consequence of this so-called confirmation bias is that people often seek out, recall and understand information that best confirms what they already believe.

This tendency is extremely difficult to correct.

Making matters worse, search engines and social media platforms provide personalized recommendations based on the vast amounts of data they have about users’ past preferences.

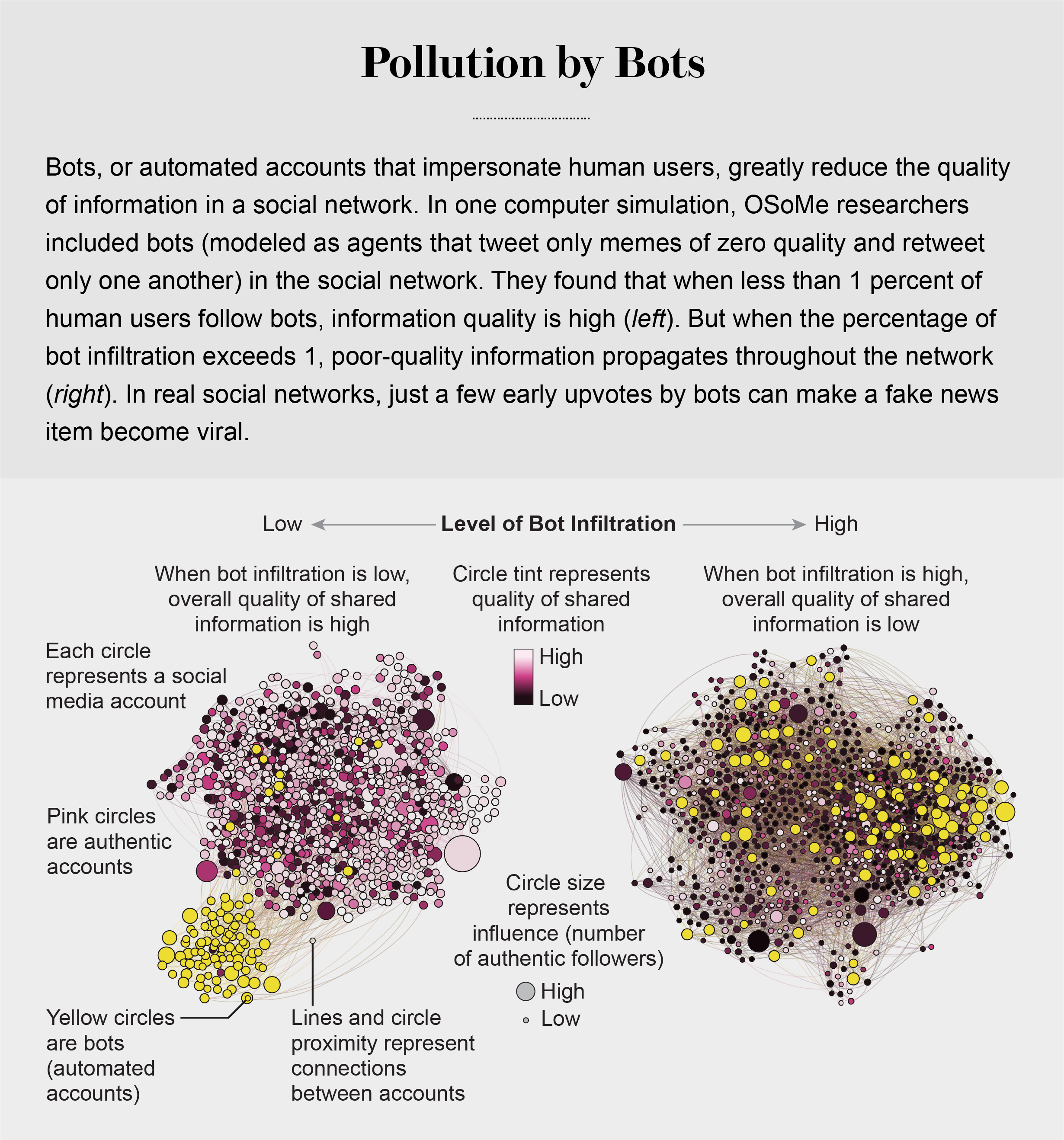

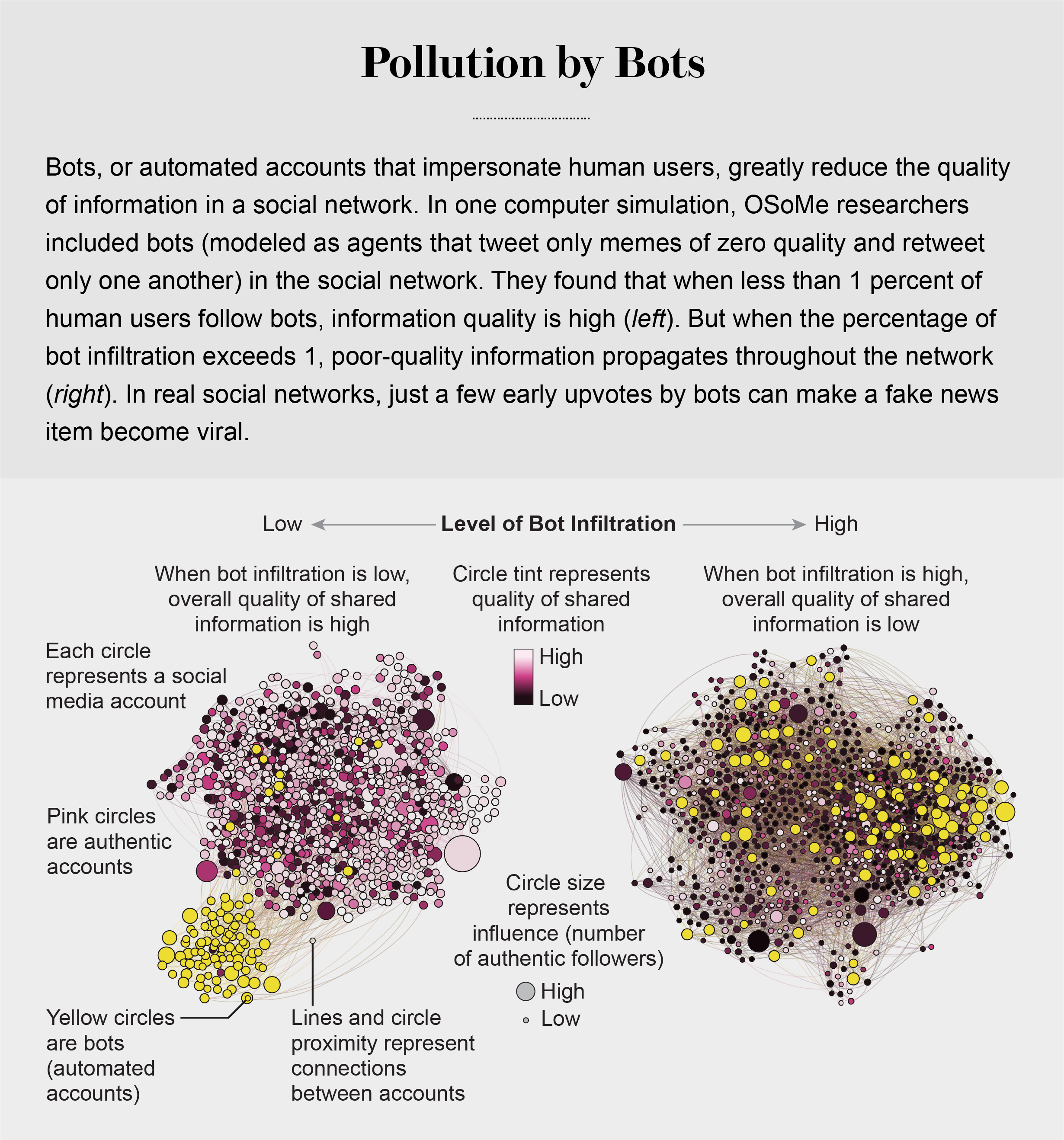

pollution by bots

Social Herding

social groups create a pressure toward conformity so powerful that it can overcome individual preferences, and by amplifying random early differences, it can cause segregated groups to diverge to extremes.

Social media follows a similar dynamic. We confuse popularity with quality and end up copying the behavior we observe.

information is transmitted via “complex contagion”: when we are repeatedly exposed to an idea, typically from many sources, we are more likely to adopt and reshare it.

In addition to showing us items that conform with our views, social media platforms such as Facebook, Twitter, YouTube and Instagram place popular content at the top of our screens and show us how many people have liked and shared something. Few of us realize that these cues do not provide independent assessments of quality.

programmers who design the algorithms for ranking memes on social media assume that the “wisdom of crowds” will quickly identify high-quality items; they use popularity as a proxy for quality. My note: again, ill-conceived folksonomy.

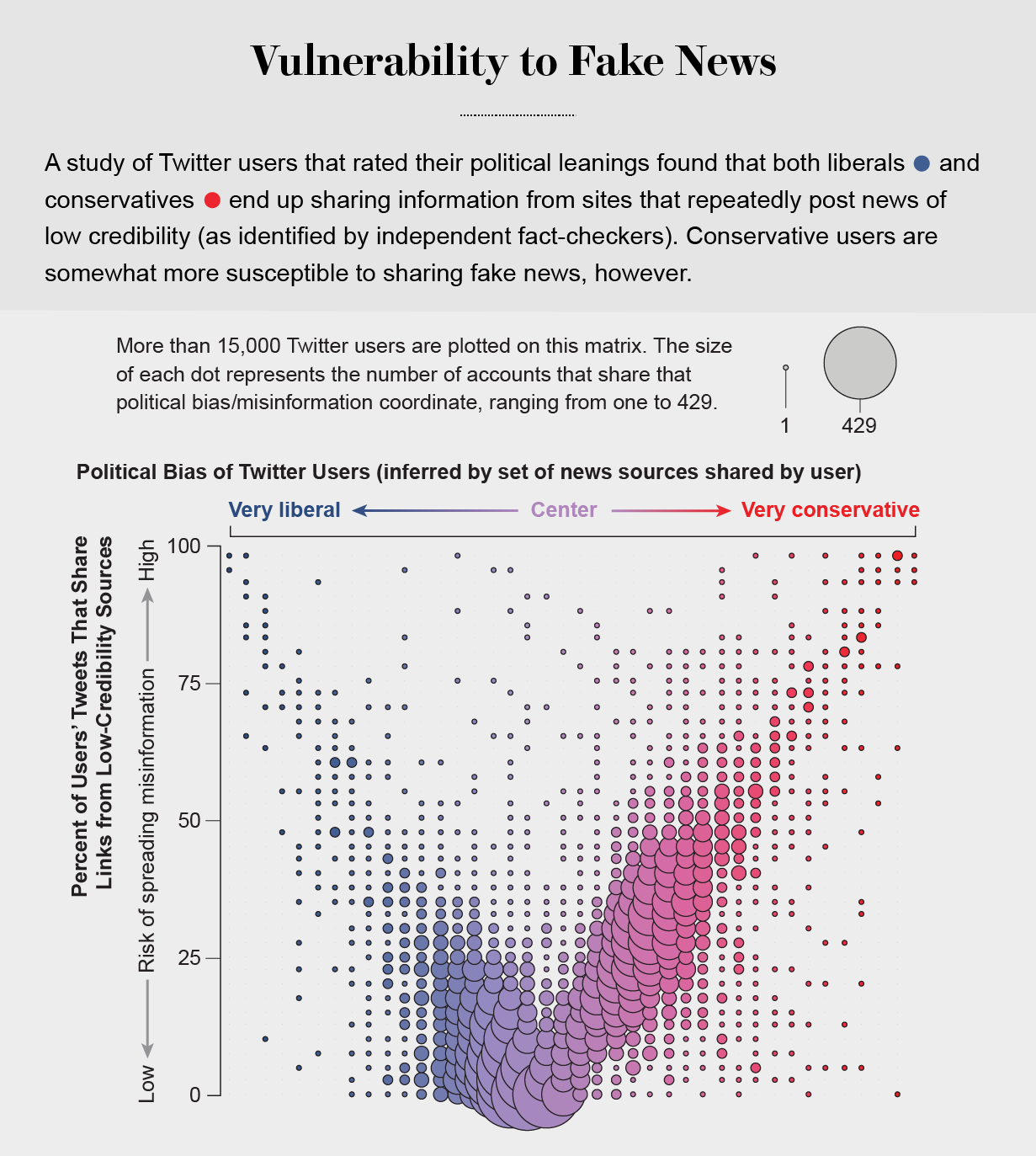

Echo Chambers

the political echo chambers on Twitter are so extreme that individual users’ political leanings can be predicted with high accuracy: you have the same opinions as the majority of your connections. This chambered structure efficiently spreads information within a community while insulating that community from other groups.

socially shared information not only bolsters our biases but also becomes more resilient to correction.

machine-learning algorithms to detect social bots. One of these, Botometer, is a public tool that extracts 1,200 features from a given Twitter account to characterize its profile, friends, social network structure, temporal activity patterns, language and other features. The program compares these characteristics with those of tens of thousands of previously identified bots to give the Twitter account a score for its likely use of automation.

Some manipulators play both sides of a divide through separate fake news sites and bots, driving political polarization or monetization by ads.

recently uncovered a network of inauthentic accounts on Twitter that were all coordinated by the same entity. Some pretended to be pro-Trump supporters of the Make America Great Again campaign, whereas others posed as Trump “resisters”; all asked for political donations.

a mobile app called Fakey that helps users learn how to spot misinformation. The game simulates a social media news feed, showing actual articles from low- and high-credibility sources. Users must decide what they can or should not share and what to fact-check. Analysis of data from Fakey confirms the prevalence of online social herding: users are more likely to share low-credibility articles when they believe that many other people have shared them.

Hoaxy, shows how any extant meme spreads through Twitter. In this visualization, nodes represent actual Twitter accounts, and links depict how retweets, quotes, mentions and replies propagate the meme from account to account.

Free communication is not free. By decreasing the cost of information, we have decreased its value and invited its adulteration.

https://www.axios.com/social-media-companies-look-same-tiktok-stories-snapchat-spotlight-2dd1da51-cf45-4f6b-a0ab-0db79f6b7793.html

Tech platforms used to focus on ways to create wildly different products to attract audiences. Today, they all have similar features, and instead differentiate themselves with their philosophies, values and use cases.

++++++++++++

more on SM in this IMS blog

https://blog.stcloudstate.edu/ims?s=social+media

https://www.npr.org/2020/08/13/901419012/with-more-transparency-on-election-security-a-question-looms-what-dont-we-know

a historic report last week from the nation’s top boss of counterintelligence.

the need for the United States to order the closure of the Chinese government’s consulate in Houston.

metaphor for this aspect of the spy game: a layer cake.

There’s a layer of activity that is visible to all — the actions or comments of public figures, or statements made via official channels.

Then there’s a clandestine layer that is usually visible only to another clandestine service: the work of spies being watched by other spies.

Counterintelligence officials watching Chinese intelligence activities in Houston, for example, knew the consulate was a base for efforts to steal intellectual property or recruit potential agents

And there’s at least a third layer about which the official statements raised questions: the work of spies who are operating without being detected.

The challenges of election security include its incredible breadth — every county in the United States is a potential target — and vast depth, from the prospect of cyberattacks on voter systems, to the theft of information that can then be released to embarrass a target, to the ongoing and messy war on social media over disinformation and political agitation.

Witnesses have told Congress that when Facebook and Twitter made it more difficult to create and use fake accounts to spread disinformation and amplify controversy, Russia and China began to rely more on open channels.

In 2016, Russian influencemongers posed as fake Americans and engaged with them as though they were responding to the same election alongside one another. Russian operatives even used Facebook to organize real-world campaign events across the United States.

But RT’s account on Twitter or China’s foreign ministry representatives aren’t pretending to do anything but serve as voices for Moscow or Beijing.

the offer of a $10 million bounty for information about threats to the election.

+++++++++++++++++++

more on trolls in this IMS blog

https://blog.stcloudstate.edu/ims?s=troll

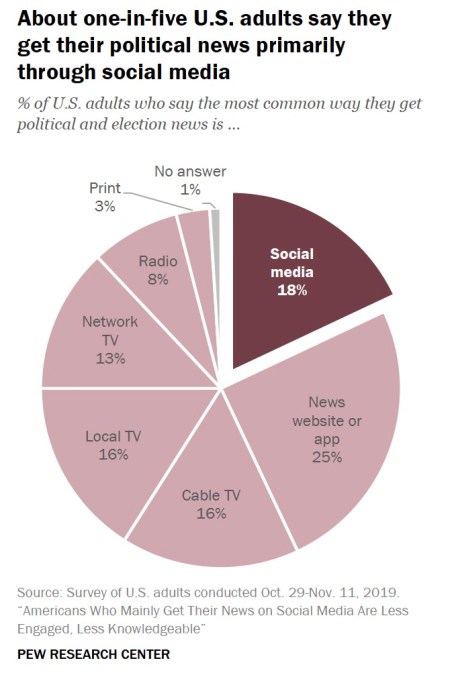

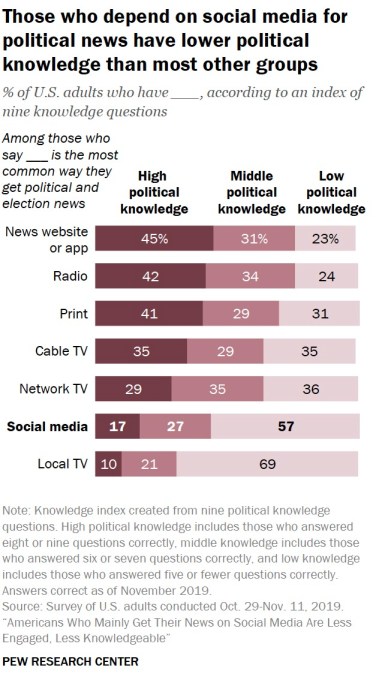

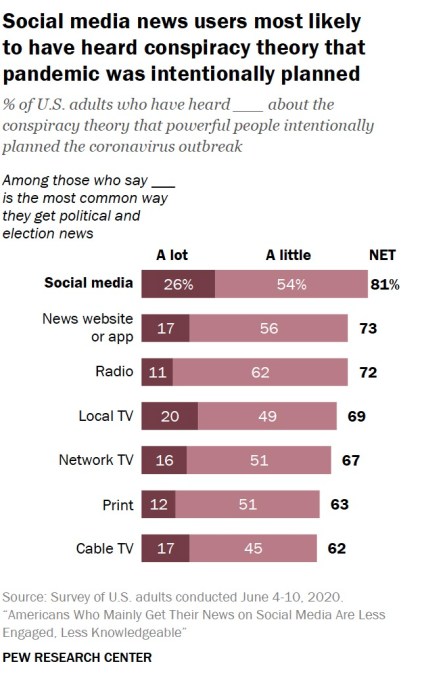

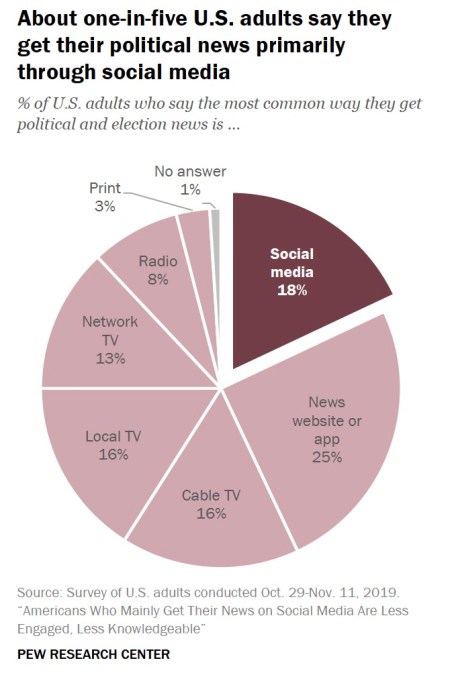

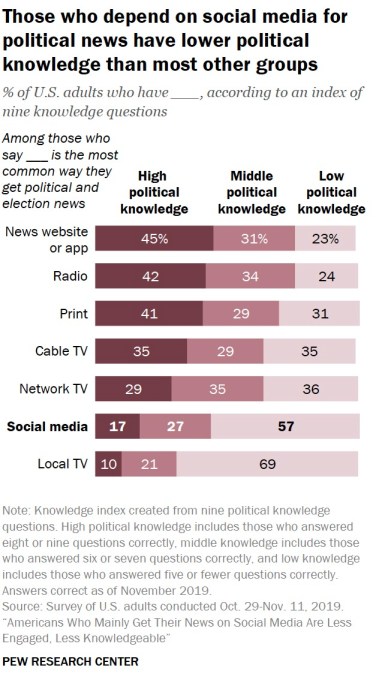

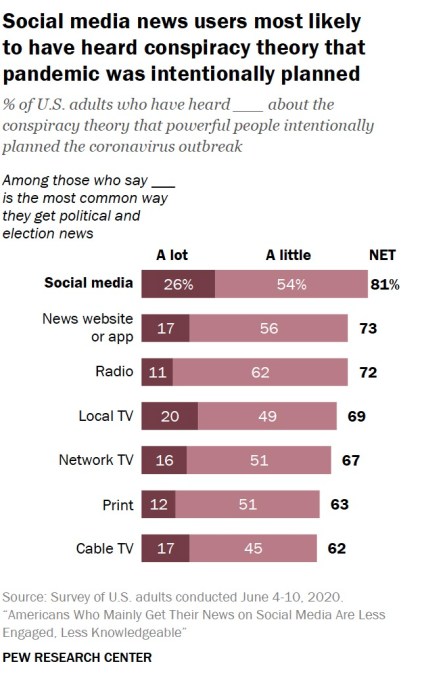

Study: U.S. adults who mostly rely on social media for news are less informed, exposed to more conspiracies from r/technology

Study: US adults who mostly rely on social media for news are less informed, exposed to more conspiracies

A new report from Pew Research makes an attempt to better understand U.S. adults who get their news largely from social media platforms, and compare their understanding of current events and political knowledge to those who use other sources, like TV, radio and news publications.

Pew research: Tech experts believe social media is harming democracy from r/technology

Many Tech Experts Say Digital Disruption Will Hurt Democracy

The years of almost unfettered enthusiasm about the benefits of the internet have been followed by a period of techlash as users worry about the actors who exploit the speed, reach and complexity of the internet for harmful purposes. Over the past four years – a time of the Brexit decision in the United Kingdom, the American presidential election and a variety of other elections – the digital disruption of democracy has been a leading concern.

Some think the information and trust environment will worsen by 2030 thanks to the rise of video deepfakes, cheapfakes and other misinformation tactics.

Power Imbalance: Democracy is at risk because those with power will seek to maintain it by building systems that serve them not the masses. Too few in the general public possess enough knowledge to resist this assertion of power.

EXPLOITING DIGITAL ILLITERACY

danah boyd, principal researcher at Microsoft Research and founder of Data & Society, wrote, “The problem is that technology mirrors and magnifies the good, bad AND ugly in everyday life. And right now, we do not have the safeguards, security or policies in place to prevent manipulators from doing significant harm with the technologies designed to connect people and help spread information.”

+++++++++++++++

more on social media in this IMS blog

https://blog.stcloudstate.edu/ims?s=social+media

Leaked documents show how police used social media and private Slack channels to track George Floyd protesters from r/technology

https://www.businessinsider.com/blueleaks-how-cops-tracked-george-floyd-protesters-on-social-media-2020-6

Police monitored RSVP lists on Facebook events, shared information about Slack channels protesters were using, and cited protesters’ posts in encrypted messaging apps like Telegram.

How police used social media to track protesters

warning sent to police departments on June 6, the FBI says it’s been tracking “individuals using Facebook, Snapchat, and Instagram” who post about organizing protests.

+++++++++++++++++++

more on surveillance in this IMS blog

https://blog.stcloudstate.edu/ims?s=surveillance

https://www.edsurge.com/news/2019-01-22-educators-share-how-video-games-can-help-kids-build-sel-skills/

social-emotional learning (SEL) skills

the intersection of teacher education, learning technologies and game-based learning. He thinks educators shouldn’t ignore video games if they want students to be media-literate, because they are the “storytelling medium of the 21st century.”

gaming can help build other SEL skills, such as empathy.

Video games are good for teaching kids problem-solving and ethical decision-making

Some experts have expressed concern about how video games affect children. According to the Washington Post, the World Health Organization has recognized “gaming disorder”—characterized as a lasting addiction to video games—as a condition. Yet, not all experts agree that “game addiction” should be pathologized.

+++++++++++

more on video games in this IMS blog

https://blog.stcloudstate.edu/ims?s=video+games

9 ways real students use social media for good

Michael Niehoff October 2, 2019

https://www.iste.org/explore/Digital-citizenship/9-ways-real-students-use-social-media-for-good

1. Sharing tools and resources.

2. Gathering survey data.

3. Collaborating with peers.

4. Participating in group work.

5. Communicating with teachers.

6. Researching careers.

7. Meeting with mentors and experts.

8. Showcasing student work.

9. Creating digital portfolios.

+++++++++++++

more about social media in education in this IMS blog

https://blog.stcloudstate.edu/ims?s=social+media+education

Digital Media Has a Misinformation Problem—but It’s an Opportunity for Teaching.

Jennifer Sparrow Dec 13, 2018

https://www.edsurge.com/news/2018-12-13-digital-media-has-a-misinformation-problem-but-it-s-an-opportunity-for-teaching

https://www.edsurge.com/news/2018-12-13-digital-media-has-a-misinformation-problem-but-it-s-an-opportunity-for-teaching

Research has shown that 50 percent of college students spend a minimum of five hours each week on social media. These social channels feed information from news outlets, private bloggers, friends and family, and myriad other sources that are often curated based on the user’s interests. But what really makes social media a tricky resource for students and educators alike is that most companies don’t view themselves as content publishers. This position essentially absolves social media platforms of the responsibility to monitor what their users share, and that can allow false even harmful information to circulate.

“How do we help students become better consumers of information, data, and communication?” Fluency in each of these areas is integral to 21st century-citizenry, for which we must prepare students.

In English 202C, a technical writing course, students use our Invention Studio and littleBits to practice inventing their own electronic devices, write instructions for how to construct the device, and have classmates reproduce the invention.

The proliferation of mobile devices and high-speed Wi-Fi have made videos a common outlet for information-sharing. To keep up with the changing means of communication, Penn State campuses are equipped with One Button Studio, where students can learn to produce professional-quality video. With this, students must learn how to take information and translate it into a visual medium in a way that will best benefit the intended audience. They can also use the studios to hone their presentation or interview skills by recording practice sessions and then reviewing the footage.

++++++++++++++

more on digital media in this IMS blog

https://blog.stcloudstate.edu/ims?s=digital+media

https://www.edsurge.com/news/2019-08-28-colleges-face-investigations-over-whether-their-use-of-social-media-follows-accessibility-regulations

Nearly 200 colleges face federal civil rights investigations opened in 2019 about whether they are accessible and communicate effectively to people with disabilities.

As a result, colleges are rolling out social media accessibility standards and training communications staff members to take advantage of built-in accessibility tools in platforms including YouTube, Facebook and Twitter.

For example, last fall, a blind man filed 50 lawsuits against colleges whose websites he said didn’t work with his screen reader. And on August 21, in Payan v. Los Angeles Community College District, the Federal District Court for the Central District of California ruled that Los Angeles Community College failed to provide a blind student with “meaningful access to his course materials” via MyMathLab, software developed by Pearson, in a timely manner.

YouTube and Facebook have options to automatically generate captions on videos posted there, while Twitter users with access to its still-developing Media Studio can upload videos with captions. Users can provide alt-text, or descriptive language describing images, through Facebook, Twitter, Instagram and Hootsuite.

California State University at Long Beach, for instance, advises posting main information first and hashtags last to make messages clear for people using screen readers. The University of Minnesota calls for indicating whether hyperlinks point to [AUDIO], [PIC], or [VIDEO]. This summer, leaders at the College of William & Mary held a training workshopfor the institution’s communications staff in response to an Office for Civil Rights investigation.

an online website accessibility center.

+++++++++

more on SM in education

https://blog.stcloudstate.edu/ims?s=social+m+edia+education