Tag: artificial intelligence

China electric cars

+++++++++++

more on ethics and AI in this IMS blog

https://blog.stcloudstate.edu/ims?s=ethics

deep learning revolution

How deep learning―from Google Translate to driverless cars to personal cognitive assistants―is changing our lives and transforming every sector of the economy.

The deep learning revolution has brought us driverless cars, the greatly improved Google Translate, fluent conversations with Siri and Alexa, and enormous profits from automated trading on the New York Stock Exchange. Deep learning networks can play poker better than professional poker players and defeat a world champion at Go. In this book, Terry Sejnowski explains how deep learning went from being an arcane academic field to a disruptive technology in the information economy.

Sejnowski played an important role in the founding of deep learning, as one of a small group of researchers in the 1980s who challenged the prevailing logic-and-symbol based version of AI. The new version of AI Sejnowski and others developed, which became deep learning, is fueled instead by data. Deep networks learn from data in the same way that babies experience the world, starting with fresh eyes and gradually acquiring the skills needed to navigate novel environments. Learning algorithms extract information from raw data; information can be used to create knowledge; knowledge underlies understanding; understanding leads to wisdom. Someday a driverless car will know the road better than you do and drive with more skill; a deep learning network will diagnose your illness; a personal cognitive assistant will augment your puny human brain. It took nature many millions of years to evolve human intelligence; AI is on a trajectory measured in decades. Sejnowski prepares us for a deep learning future.

A pioneering scientist explains ‘deep learning’

Artificial intelligence meets human intelligence

Buzzwords like “deep learning” and “neural networks” are everywhere, but so much of the popular understanding is misguided, says Terrence Sejnowski, a computational neuroscientist at the Salk Institute for Biological Studies.

Sejnowski, a pioneer in the study of learning algorithms, is the author of The Deep Learning Revolution (out next week from MIT Press). He argues that the hype about killer AI or robots making us obsolete ignores exciting possibilities happening in the fields of computer science and neuroscience, and what can happen when artificial intelligence meets human intelligence.

Machine learning is a very large field and goes way back. Originally, people were calling it “pattern recognition,” but the algorithms became much broader and much more sophisticated mathematically. Within machine learning are neural networks inspired by the brain, and then deep learning. Deep learning algorithms have a particular architecture with many layers that flow through the network. So basically, deep learning is one part of machine learning and machine learning is one part of AI.

December 2012 at the NIPS meeting, which is the biggest AI conference. There, [computer scientist] Geoff Hinton and two of his graduate students showed you could take a very large dataset called ImageNet, with 10,000 categories and 10 million images, and reduce the classification error by 20 percent using deep learning.Traditionally on that dataset, error decreases by less than 1 percent in one year. In one year, 20 years of research was bypassed. That really opened the floodgates.

The inspiration for deep learning really comes from neuroscience.

AlphaGo, the program that beat the Go champion included not just a model of the cortex, but also a model of a part of the brain called the basal ganglia, which is important for making a sequence of decisions to meet a goal. There’s an algorithm there called temporal differences, developed back in the ‘80s by Richard Sutton, that, when coupled with deep learning, is capable of very sophisticated plays that no human has ever seen before.

there’s a convergence occurring between AI and human intelligence. As we learn more and more about how the brain works, that’s going to reflect back in AI. But at the same time, they’re actually creating a whole theory of learning that can be applied to understanding the brain and allowing us to analyze the thousands of neurons and how their activities are coming out. So there’s this feedback loop between neuroscience and AI

Inclusive Design of Artificial Intelligence

EASI Free Webinar: Inclusive Design of Artificial Intelligence Thursday

+++++++++++

more on AI in this IMS blog

https://blog.stcloudstate.edu/ims?s=artificial+intelligence

AI and ethics

Live Facebook discussion at SCSU VizLab on ethics and technology:

Heard on Marketplace this morning (Oct. 22, 2018): ethics of artificial intelligence with John Havens of the Institute of Electrical and Electronics Engineers, which has developed a new ethics certification process for AI: https://standards.ieee.org/content/dam/ieee-standards/standards/web/documents/other/ec_bios.pdf

Ethics and AI

***** The student club, the Philosophical Society, has now been recognized by SCSU as a student organization ***

https://ed.ted.com/lessons/the-ethical-dilemma-of-self-driving-cars-patrick-lin

Could it be the case that a random decision is still better then predetermined one designed to minimize harm?

similar ethical considerations are raised also:

in this sitcom

https://www.youtube.com/watch?v=JWb_svTrcOg

https://www.theatlantic.com/sponsored/hpe-2018/the-ethics-of-ai/1865/ (full movie)

https://youtu.be/2xCkFUJSZ8Y

This TED talk:

https://blog.stcloudstate.edu/ims/2017/09/19/social-media-algorithms/

https://blog.stcloudstate.edu/ims/2018/10/02/social-media-monopoly/

+++++++++++++++++++

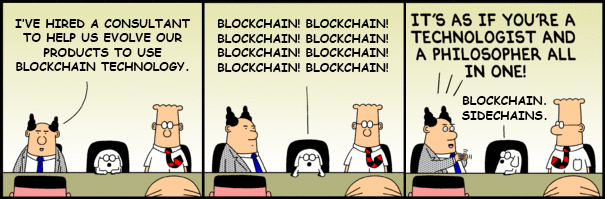

IoT (Internet of Things), Industry 4.0, Big Data, BlockChain,

+++++++++++++++++++

IoT (Internet of Things), Industry 4.0, Big Data, BlockChain, Privacy, Security, Surveilance

https://blog.stcloudstate.edu/ims?s=internet+of+things

peer-reviewed literature;

Keyword search: ethic* + Internet of Things = 31

Baldini, G., Botterman, M., Neisse, R., & Tallacchini, M. (2018). Ethical Design in the Internet of Things. Science & Engineering Ethics, 24(3), 905–925. https://doi-org.libproxy.stcloudstate.edu/10.1007/s11948-016-9754-5

Berman, F., & Cerf, V. G. (2017). Social and Ethical Behavior in the Internet of Things. Communications of the ACM, 60(2), 6–7. https://doi-org.libproxy.stcloudstate.edu/10.1145/3036698

Murdock, G. (2018). Media Materialties: For A Moral Economy of Machines. Journal of Communication, 68(2), 359–368. https://doi-org.libproxy.stcloudstate.edu/10.1093/joc/jqx023

Carrier, J. G. (2018). Moral economy: What’s in a name. Anthropological Theory, 18(1), 18–35. https://doi-org.libproxy.stcloudstate.edu/10.1177/1463499617735259

Kernaghan, K. (2014). Digital dilemmas: Values, ethics and information technology. Canadian Public Administration, 57(2), 295–317. https://doi-org.libproxy.stcloudstate.edu/10.1111/capa.12069

Koucheryavy, Y., Kirichek, R., Glushakov, R., & Pirmagomedov, R. (2017). Quo vadis, humanity? Ethics on the last mile toward cybernetic organism. Russian Journal of Communication, 9(3), 287–293. https://doi-org.libproxy.stcloudstate.edu/10.1080/19409419.2017.1376561

Keyword search: ethic+ + autonomous vehicles = 46

Cerf, V. G. (2017). A Brittle and Fragile Future. Communications of the ACM, 60(7), 7. https://doi-org.libproxy.stcloudstate.edu/10.1145/3102112

Fleetwood, J. (2017). Public Health, Ethics, and Autonomous Vehicles. American Journal of Public Health, 107(4), 632–537. https://doi-org.libproxy.stcloudstate.edu/10.2105/AJPH.2016.303628

HARRIS, J. (2018). Who Owns My Autonomous Vehicle? Ethics and Responsibility in Artificial and Human Intelligence. Cambridge Quarterly of Healthcare Ethics, 27(4), 599–609. https://doi-org.libproxy.stcloudstate.edu/10.1017/S0963180118000038

Keeling, G. (2018). Legal Necessity, Pareto Efficiency & Justified Killing in Autonomous Vehicle Collisions. Ethical Theory & Moral Practice, 21(2), 413–427. https://doi-org.libproxy.stcloudstate.edu/10.1007/s10677-018-9887-5

Hevelke, A., & Nida-Rümelin, J. (2015). Responsibility for Crashes of Autonomous Vehicles: An Ethical Analysis. Science & Engineering Ethics, 21(3), 619–630. https://doi-org.libproxy.stcloudstate.edu/10.1007/s11948-014-9565-5

Getha-Taylor, H. (2017). The Problem with Automated Ethics. Public Integrity, 19(4), 299–300. https://doi-org.libproxy.stcloudstate.edu/10.1080/10999922.2016.1250575

Keyword search: ethic* + artificial intelligence = 349

Etzioni, A., & Etzioni, O. (2017). Incorporating Ethics into Artificial Intelligence. Journal of Ethics, 21(4), 403–418. https://doi-org.libproxy.stcloudstate.edu/10.1007/s10892-017-9252-2

Köse, U. (2018). Are We Safe Enough in the Future of Artificial Intelligence? A Discussion on Machine Ethics and Artificial Intelligence Safety. BRAIN: Broad Research in Artificial Intelligence & Neuroscience, 9(2), 184–197. Retrieved from http://login.libproxy.stcloudstate.edu/login?qurl=http%3a%2f%2fsearch.ebscohost.com%2flogin.aspx%3fdirect%3dtrue%26db%3daph%26AN%3d129943455%26site%3dehost-live%26scope%3dsite

++++++++++++++++

http://www.cts.umn.edu/events/conference/2018

2018 CTS Transportation Research Conference

Keynote presentations will explore the future of driving and the evolution and potential of automated vehicle technologies.

+++++++++++++++++++

https://blog.stcloudstate.edu/ims/2016/02/26/philosophy-and-technology/

+++++++++++++++++++

more on AI in this IMS blog

https://blog.stcloudstate.edu/ims/2018/09/07/limbic-thought-artificial-intelligence/

AI and autonomous cars as ALA discussion topic

https://blog.stcloudstate.edu/ims/2018/01/11/ai-autonomous-cars-libraries/

and privacy concerns

https://blog.stcloudstate.edu/ims/2018/09/14/ai-for-education/

the call of the German scientists on ethics and AI

https://blog.stcloudstate.edu/ims/2018/09/01/ethics-and-ai/

AI in the race for world dominance

https://blog.stcloudstate.edu/ims/2018/04/21/ai-china-education/

AI for Education

The Promise (and Pitfalls) of AI for Education

Artificial intelligence could have a profound impact on learning, but it also raises key questions.

By Dennis Pierce, Alice Hathaway 08/29/18

https://thejournal.com/articles/2018/08/29/the-promise-of-ai-for-education.aspx

Artificial intelligence (AI) and machine learning are no longer fantastical prospects seen only in science fiction. Products like Amazon Echo and Siri have brought AI into many homes,

Kelly Calhoun Williams, an education analyst for the technology research firm Gartner Inc., cautions there is a clear gap between the promise of AI and the reality of AI.

Artificial intelligence is a broad term used to describe any technology that emulates human intelligence, such as by understanding complex information, drawing its own conclusions and engaging in natural dialog with people.

Machine learning is a subset of AI in which the software can learn or adapt like a human can. Essentially, it analyzes huge amounts of data and looks for patterns in order to classify information or make predictions. The addition of a feedback loop allows the software to “learn” as it goes by modifying its approach based on whether the conclusions it draws are right or wrong.

AI can process far more information than a human can, and it can perform tasks much faster and with more accuracy. Some curriculum software developers have begun harnessing these capabilities to create programs that can adapt to each student’s unique circumstances.

For instance, a Seattle-based nonprofit company called Enlearn has developed an adaptive learning platform that uses machine learning technology to create highly individualized learning paths that can accelerate learning for every student. (My note: about learning and technology, Alfie Kohn in https://blog.stcloudstate.edu/ims/2018/09/11/educational-technology/)

GoGuardian, a Los Angeles company, uses machine learning technology to improve the accuracy of its cloud-based Internet filtering and monitoring software for Chromebooks. (My note: that smells Big Brother).Instead of blocking students’ access to questionable material based on a website’s address or domain name, GoGuardian’s software uses AI to analyze the actual content of a page in real time to determine whether it’s appropriate for students. (my note: privacy)

serious privacy concerns. It requires an increased focus not only on data quality and accuracy, but also on the responsible stewardship of this information. “School leaders need to get ready for AI from a policy standpoint,” Calhoun Williams said. For instance: What steps will administrators take to secure student data and ensure the privacy of this information?

++++++++++++

more on AI in education in this IMS blog

https://blog.stcloudstate.edu/ims?s=artifical+intelligence

Limbic thought and artificial intelligence

Limbic thought and artificial intelligence

September 5, 2018 Siddharth (Sid) Pai

https://www.linkedin.com/pulse/limbic-thought-artificial-intelligence-siddharth-sid-pai/

Stephen Hawking warns artificial intelligence could end mankind

An AI Wake-Up Call From Ancient Greece

++++++++++++++++++++

more on AI in this IMS blog

https://blog.stcloudstate.edu/ims?s=artifical+intelligence

coding ethics unpredictability

Franken-algorithms: the deadly consequences of unpredictable code

by Andrew Smith Thu 30 Aug 2018 01.00 EDT

https://www.theguardian.com/technology/2018/aug/29/coding-algorithms-frankenalgos-program-danger

Between the “dumb” fixed algorithms and true AI lies the problematic halfway house we’ve already entered with scarcely a thought and almost no debate, much less agreement as to aims, ethics, safety, best practice. If the algorithms around us are not yet intelligent, meaning able to independently say “that calculation/course of action doesn’t look right: I’ll do it again”, they are nonetheless starting to learn from their environments. And once an algorithm is learning, we no longer know to any degree of certainty what its rules and parameters are. At which point we can’t be certain of how it will interact with other algorithms, the physical world, or us. Where the “dumb” fixed algorithms – complex, opaque and inured to real time monitoring as they can be – are in principle predictable and interrogable, these ones are not. After a time in the wild, we no longer know what they are: they have the potential to become erratic. We might be tempted to call these “frankenalgos” – though Mary Shelley couldn’t have made this up.

Twenty years ago, George Dyson anticipated much of what is happening today in his classic book Darwin Among the Machines. The problem, he tells me, is that we’re building systems that are beyond our intellectual means to control. We believe that if a system is deterministic (acting according to fixed rules, this being the definition of an algorithm) it is predictable – and that what is predictable can be controlled. Both assumptions turn out to be wrong.“It’s proceeding on its own, in little bits and pieces,” he says. “What I was obsessed with 20 years ago that has completely taken over the world today are multicellular, metazoan digital organisms, the same way we see in biology, where you have all these pieces of code running on people’s iPhones, and collectively it acts like one multicellular organism.“There’s this old law called Ashby’s law that says a control system has to be as complex as the system it’s controlling, and we’re running into that at full speed now, with this huge push to build self-driving cars where the software has to have a complete model of everything, and almost by definition we’re not going to understand it. Because any model that we understand is gonna do the thing like run into a fire truck ’cause we forgot to put in the fire truck.”

Walsh believes this makes it more, not less, important that the public learn about programming, because the more alienated we become from it, the more it seems like magic beyond our ability to affect. When shown the definition of “algorithm” given earlier in this piece, he found it incomplete, commenting: “I would suggest the problem is that algorithm now means any large, complex decision making software system and the larger environment in which it is embedded, which makes them even more unpredictable.” A chilling thought indeed. Accordingly, he believes ethics to be the new frontier in tech, foreseeing “a golden age for philosophy” – a view with which Eugene Spafford of Purdue University, a cybersecurity expert, concurs. Where there are choices to be made, that’s where ethics comes in.

our existing system of tort law, which requires proof of intention or negligence, will need to be rethought. A dog is not held legally responsible for biting you; its owner might be, but only if the dog’s action is thought foreseeable.

model-based programming, in which machines do most of the coding work and are able to test as they go.

As we wait for a technological answer to the problem of soaring algorithmic entanglement, there are precautions we can take. Paul Wilmott, a British expert in quantitative analysis and vocal critic of high frequency trading on the stock market, wryly suggests “learning to shoot, make jam and knit”

The venerable Association for Computing Machinery has updated its code of ethics along the lines of medicine’s Hippocratic oath, to instruct computing professionals to do no harm and consider the wider impacts of their work.

+++++++++++

more on coding in this IMS blog

https://blog.stcloudstate.edu/ims?s=coding

Google AI Voice Kit

Google AI Voice Kit: Big Brother im Karton

https://www.elektormagazine.de/news/google-ai-voice-kit-big-brother-im-karton

++++++++++++

more on Artificial Intelligence in this IMS blog

https://blog.stcloudstate.edu/ims?s=artifical+intelligence

Geoffrey Hinton AR

https://torontolife.com/tech/ai-superstars-google-facebook-apple-studied-guy/