Searching for "tech companies"

Google, Meta, and others will have to explain their algorithms under new EU legislation

The Digital Services Act will reshape the online world

https://www.theverge.com/2022/4/23/23036976/eu-digital-services-act-finalized-algorithms-targeted-advertising

The EU has agreed on another ambitious piece of legislation to police the online world.

- argeted advertising based on an individual’s religion, sexual orientation, or ethnicity is banned. Minors cannot be subject to targeted advertising either.

- “Dark patterns” — confusing or deceptive user interfaces designed to steer users into making certain choices — will be prohibited. The EU says that, as a rule, canceling subscriptions should be as easy as signing up for them.

- Large online platforms like Facebook will have to make the working of their recommender algorithms (used for sorting content on the News Feed or suggesting TV shows on Netflix) transparent to users. Users should also be offered a recommender system “not based on profiling.” In the case of Instagram, for example, this would mean a chronological feed (as it introduced recently).

- Hosting services and online platforms will have to explain clearly why they have removed illegal content as well as give users the ability to appeal such takedowns. The DSA itself does not define what content is illegal, though, and leaves this up to individual countries.

- The largest online platforms will have to provide key data to researchers to “provide more insight into how online risks evolve.”

- Online marketplaces must keep basic information about traders on their platform to track down individuals selling illegal goods or services.

- Large platforms will also have to introduce new strategies for dealing with misinformation during crises (a provision inspired by the recent invasion of Ukraine).

hese tech companies have lobbied hard to water down the requirements in the DSA, particularly those concerning targeted advertising and handing over data to outside researchers.

HaptX raises another $12M for high-tech gloves, relocates HQ back to Seattle

https://www.geekwire.com/2021/haptx-raises-another-12m-high-tech-gloves-relocates-hq-back-seattle/

Founded in 2012 and previously known as AxonVR, the company’s tech promises to deliver realistic touch feedback to users reaching out for objects in VR, thanks to microfluidics in the glove system that physically and precisely displace the skin on a user’s hands and fingers.

Virtual and augmented reality have not yet reached mainstream consumers as some predicted but enterprise-focused startups such as HaptX have found traction. Large tech companies such as Facebook and Apple also continue investing in the technology, and investors keep making bets.

++++++++++++++++++++

more on HaptX in this IMS blog

https://blog.stcloudstate.edu/ims?s=haptx

https://www.npr.org/2021/02/23/970300911/block-party-aims-to-be-a-spam-folder-for-social-media-harassment

Chou is a Stanford-educated software engineer who worked at tech companies including Pinterest and Quora before setting out on her own. Along the way, she’s become a leading advocate for diversity, pushing Silicon Valley to open up about how few women work in tech — a field long dominated by men.

Block Party is also designed to help deal with the worst abuse, by letting people collect evidence of threats or stalking, even if they are deleted by the users who post them or by the platforms. That documentation is critical for filing police reports, getting restraining orders and pursuing legal cases.

https://www.vice.com/en/article/n7wxvd/students-are-rebelling-against-eye-tracking-exam-surveillance-tools

Algorithmic proctoring software has been around for several years, but its use exploded as the COVID-19 pandemic forced schools to quickly transition to remote learning. Proctoring companies cite studies estimating that between 50 and 70 percent of college students will attempt some form of cheating, and warn that cheating will be rampant if students are left unmonitored in their own homes.

Like many other tech companies, they also balk at the suggestion that they are responsible for how their software is used. While their algorithms flag behavior that the designers have deemed suspicious, these companies argue that the ultimate determination of whether cheating occurred rests in the hands of the class instructor.

As more evidence emerges about how the programs work, and fail to work, critics say the tools are bound to hurt low-income students, students with disabilities, students with children or other dependents, and other groups who already face barriers in higher education.

“Each academic department has almost complete agency to design their curriculum as far as I know, and each professor has the freedom to design their own exams and use whatever monitoring they see fit,” Rohan Singh, a computer engineering student at Michigan State University, told Motherboard.

after students approached faculty members at the University of California Santa Barbara, the faculty association sent a letter to the school’s administration raising concerns about whether ProctorU would share student data with third parties.

In response, a ProctorU attorney threatened to sue the faculty association for defamation and violating copyright law (because the association had used the company’s name and linked to its website). He also accused the faculty association of “directly impacting efforts to mitigate civil disruption across the United States” by interfering with education during a national emergency, and said he was sending his complaint to the state’s Attorney General.

here is a link to a community discussion regarding this and similar software use:

https://www.facebook.com/groups/RemakingtheUniversity/permalink/1430416163818409/

+++++++++++++

more on Proctorio in this IMS blog

https://blog.stcloudstate.edu/ims?s=proctorio

“Some of the more prominent companies offering these services include Proctorio, Respondus, ProctorU, HonorLock, Kryterion Global Testing Solutions, and Examity.”

Alternative Credentials on the Rise

Interest is growing in short-term, online credentials amid the pandemic. Will they become viable alternative pathways to well-paying jobs?

Paul Fain August 27, 2020

https://www.insidehighered.com/news/2020/08/27/interest-spikes-short-term-online-credentials-will-it-be-sustained

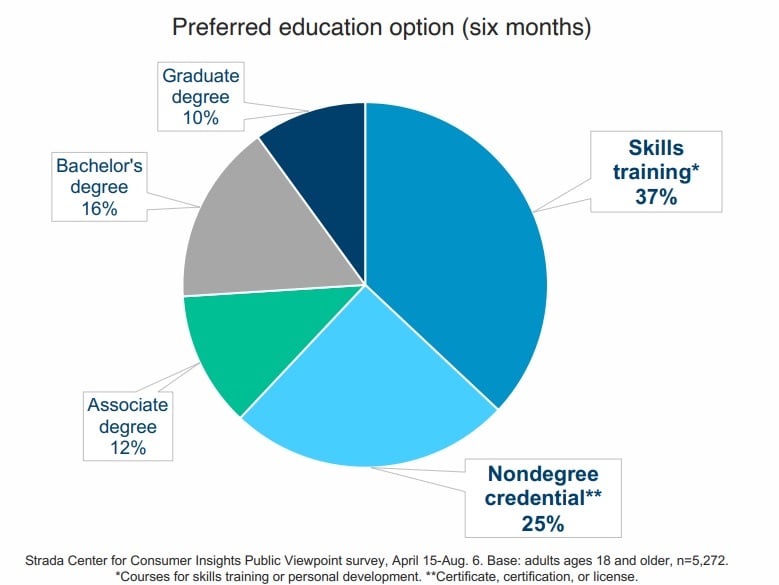

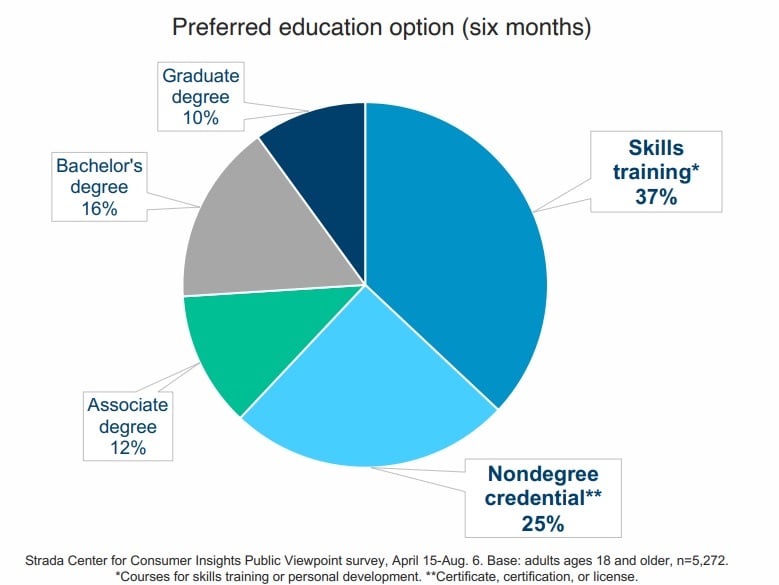

A growing body of evidence has found strong consumer interest in recent months in skills-based, online credentials that are clearly tied to careers, particularly among adult learners from diverse and lower-income backgrounds, whom four-year colleges often have struggled to attract and graduate.

For years the demographics of higher education have been shifting away from traditional-age, full-paying college students while online education has become more sophisticated and accepted.

That has amplified interest in recent months among employers, students, workers and policy makers in online certificates, industry certifications, apprenticeships, microcredentials, boot camps and even lower-cost online master’s degrees.

Moody’s, the credit ratings firm, on Wednesday said online and nondegree programs are growing at a rapid pace.

Google will fund 100,000 need-based scholarships for the certificates, and said it will consider them the “equivalent of a four-year degree” for related roles.

Google isn’t alone in this push. IBM, Facebook, Salesforce and Microsoft are creating their own short-term, skills-based credentials. Several tech companies also are dropping degree requirements for some jobs, as is the federal government, while the White House, employers and some higher education groups have collaborated on an Ad Council campaign to tout alternatives to the college degree.

One of the most consistent findings in a nationally representative poll conducted by the Strada Education Network’s Center for Consumer Insights over the last five months has been a preference for nondegree and skills training options.

Despite growing skepticism about the value of a college degree, it remains the best ticket to a well-paying job and career. And data have shown that college degrees have been a cushion amid the pandemic and recession.

Experts had long speculated that employer interest in alternative credential pathways would wither when low employment rates went away,…. Yet some big employers, including Amazon, are paying to retrain workers for jobs outside the company as it restructures.

++++++++++++

more on badges, microcredentialing in this IMS blog

https://blog.stcloudstate.edu/ims?s=microcredential

New anti-encryption bill worse than EARN IT. Act now to stop both. from r/technology

https://tutanota.com/blog/posts/lawful-access-encrypted-data-act-backdoor/

Once surveillance laws such as an encryption backdoor for the “good guys” is available, it’s just a matter of time until the “good guys” turn bad or abuse their power.

By stressing the fact that tech companies must decrypt sensitive information only after a court issues a warrant, the three Senators believe they can swing the public opinion in favor of this encryption backdoor law.

Our Bodies Encoded: Algorithmic Test Proctoring in Higher Education

https://hybridpedagogy.org/our-bodies-encoded-algorithmic-test-proctoring-in-higher-education/

While in-person test proctoring has been used to combat test-based cheating, this can be difficult to translate to online courses. Ed-tech companies have sought to address this concern by offering to watch students take online tests, in real time, through their webcams.

Some of the more prominent companies offering these services include Proctorio, Respondus, ProctorU, HonorLock, Kryterion Global Testing Solutions, and Examity.

Algorithmic test proctoring’s settings have discriminatory consequences across multiple identities and serious privacy implications.

While racist technology calibrated for white skin isn’t new (everything from photography to soap dispensers do this), we see it deployed through face detection and facial recognition used by algorithmic proctoring systems.

While some test proctoring companies develop their own facial recognition software, most purchase software developed by other companies, but these technologies generally function similarly and have shown a consistent inability to identify people with darker skin or even tell the difference between Chinese people. Facial recognition literally encodes the invisibility of Black people and the racist stereotype that all Asian people look the same.

As Os Keyes has demonstrated, facial recognition has a terrible history with gender. This means that a software asking students to verify their identity is compromising for students who identify as trans, non-binary, or express their gender in ways counter to cis/heteronormativity.

These features and settings create a system of asymmetric surveillance and lack of accountability, things which have always created a risk for abuse and sexual harassment. Technologies like these have a long history of being abused, largely by heterosexual men at the expense of women’s bodies, privacy, and dignity.

Their promotional messaging functions similarly to dog whistle politics which is commonly used in anti-immigration rhetoric. It’s also not a coincidence that these technologies are being used to exclude people not wanted by an institution; biometrics and facial recognition have been connected to anti-immigration policies, supported by both Republican and Democratic administrations, going back to the 1990’s.

Borrowing from Henry A. Giroux, Kevin Seeber describes the pedagogy of punishment and some of its consequences in regards to higher education’s approach to plagiarism in his book chapter “The Failed Pedagogy of Punishment: Moving Discussions of Plagiarism beyond Detection and Discipline.”

my note: I am repeating this for years

Sean Michael Morris and Jesse Stommel’s ongoing critique of Turnitin, a plagiarism detection software, outlines exactly how this logic operates in ed-tech and higher education: 1) don’t trust students, 2) surveil them, 3) ignore the complexity of writing and citation, and 4) monetize the data.

Technological Solutionism

Cheating is not a technological problem, but a social and pedagogical problem.

Our habit of believing that technology will solve pedagogical problems is endemic to narratives produced by the ed-tech community and, as Audrey Watters writes, is tied to the Silicon Valley culture that often funds it. Scholars have been dismantling the narrative of technological solutionism and neutrality for some time now. In her book “Algorithms of Oppression,” Safiya Umoja Noble demonstrates how the algorithms that are responsible for Google Search amplify and “reinforce oppressive social relationships and enact new modes of racial profiling.”

Anna Lauren Hoffmann, who coined the term “data violence” to describe the impact harmful technological systems have on people and how these systems retain the appearance of objectivity despite the disproportionate harm they inflict on marginalized communities.

This system of measuring bodies and behaviors, associating certain bodies and behaviors with desirability and others with inferiority, engages in what Lennard J. Davis calls the Eugenic Gaze.

Higher education is deeply complicit in the eugenics movement. Nazism borrowed many of its ideas about racial purity from the American school of eugenics, and universities were instrumental in supporting eugenics research by publishing copious literature on it, establishing endowed professorships, institutes, and scholarly societies that spearheaded eugenic research and propaganda.

+++++++++++++++++

more on privacy in this IMS blog

https://blog.stcloudstate.edu/ims?s=privacy

The Secretive Company That Might End Privacy as We Know It: It’s taken 3 billion images from the internet to build a an AI driven database that allows US law enforcement agencies identify any stranger. from r/Futurology

Until now, technology that readily identifies everyone based on his or her face has been taboo because of its radical erosion of privacy. Tech companies capable of releasing such a tool have refrained from doing so; in 2011, Google’s chairman at the time said it was the one technology the company had held back because it could be used “in a very bad way.” Some large cities, including San Francisco, have barred police from using facial

But without public scrutiny, more than 600 law enforcement agencies have started using Clearview in the past year, according to the company, which declined to provide a list. recognition technology.

Facial recognition technology has always been controversial. It makes people nervous about Big Brother. It has a tendency to deliver false matches for certain groups, like people of color. And some facial recognition products used by the police — including Clearview’s — haven’t been vetted by independent experts.

Clearview deployed current and former Republican officials to approach police forces, offering free trials and annual licenses for as little as $2,000. Mr. Schwartz tapped his political connections to help make government officials aware of the tool, according to Mr. Ton-That.

“We have no data to suggest this tool is accurate,” said Clare Garvie, a researcher at Georgetown University’s Center on Privacy and Technology, who has studied the government’s use of facial recognition. “The larger the database, the larger the risk of misidentification because of the doppelgänger effect. They’re talking about a massive database of random people they’ve found on the internet.”

Law enforcement is using a facial recognition app with huge privacy issues Clearview AI’s software can find matches in billions of internet images. from r/technology

Part of the problem stems from a lack of oversight. There has been no real public input into adoption of Clearview’s software, and the company’s ability to safeguard data hasn’t been tested in practice. Clearview itself remained highly secretive until late 2019.

The software also appears to explicitly violate policies at Facebook and elsewhere against collecting users’ images en masse.

while there’s underlying code that could theoretically be used for augmented reality glasses that could identify people on the street, Ton-That said there were no plans for such a design.

Banning Facial Recognition Isn’t Enough from r/technology

In May of last year, San Francisco banned facial recognition; the neighboring city of Oakland soon followed, as did Somerville and Brookline in Massachusetts (a statewide ban may follow). In December, San Diego suspended a facial recognition program in advance of a new statewide law, which declared it illegal, coming into effect. Forty major music festivals pledged not to use the technology, and activists are calling for a nationwide ban. Many Democratic presidential candidates support at least a partial ban on the technology.

facial recognition bans are the wrong way to fight against modern surveillance. Focusing on one particular identification method misconstrues the nature of the surveillance society we’re in the process of building. Ubiquitous mass surveillance is increasingly the norm. In countries like China, a surveillance infrastructure is being built by the government for social control. In countries like the United States, it’s being built by corporations in order to influence our buying behavior, and is incidentally used by the government.

People can be identified at a distance by their heart beat or by their gait, using a laser-based system. Cameras are so good that they can read fingerprints and iris patterns from meters away. And even without any of these technologies, we can always be identified because our smartphones broadcast unique numbers called MAC addresses.

China, for example, uses multiple identification technologies to support its surveillance state.

There is a huge — and almost entirely unregulated — data broker industry in the United States that trades on our information.

This is why many companies buy license plate data from states. It’s also why companies like Google are buying health records, and part of the reason Google bought the company Fitbit, along with all of its data.

The data broker industry is almost entirely unregulated; there’s only one law — passed in Vermont in 2018 — that requires data brokers to register and explain in broad terms what kind of data they collect.

The Secretive Company That Might End Privacy as We Know It from r/technews

Until now, technology that readily identifies everyone based on his or her face has been taboo because of its radical erosion of privacy. Tech companies capable of releasing such a tool have refrained from doing so; in 2011, Google’s chairman at the time said it was the one technology the company had held back because it could be used “in a very bad way.” Some large cities, including San Francisco, have barred police from using facial recognition technology.

+++++++++++++

on social credit system in this IMS blog

https://blog.stcloudstate.edu/ims?s=social+credit

https://www.edsurge.com/news/2019-10-22-high-tech-surveillance-comes-at-high-cost-to-students-is-it-worth-it

The phrase “school-to-prison pipeline” has long been used to describe how schools respond to disciplinary problems with excessively stringent policies that create prison-like environments and funnel children who don’t fall in line into the criminal justice system. Now, schools are investing in surveillance systems that will likely exacerbate existing disparities.

A number of tech companies are capitalizing on the growing market for student surveillance measures as various districts and school leaders commit themselves to preventing acts of violence. Rekor Systems, for instance, recently announced the launch of OnGuard, a program that claims to “advance student safety” by implementing countless surveillance and “threat assessment” mechanisms in and around schools.

While none of these methods have been proven to be effective in deterring violence, similar systems have resulted in diverting resources away from enrichment opportunities, policing school communities to a point where students feel afraid to express themselves, and placing especially dangerous targets on students of color who are already disproportionately mislabeled and punished.ProPublica

++++++++++++++++

more on surveillance in this IMS blog

https://blog.stcloudstate.edu/ims?s=surveillance

4 Ways AI Education and Ethics Will Disrupt Society in 2019

https://www.edsurge.com/news/2019-01-28-4-ways-ai-education-and-ethics-will-disrupt-society-in-2019

In 2018 we witnessed a clash of titans as government and tech companies collided on privacy issues around collecting, culling and using personal data. From GDPR to Facebook scandals, many tech CEOs were defending big data, its use, and how they’re safeguarding the public.

Meanwhile, the public was amazed at technological advances like Boston Dynamic’s Atlas robot doing parkour, while simultaneously being outraged at the thought of our data no longer being ours and Alexa listening in on all our conversations.

1. Companies will face increased pressure about the data AI-embedded services use.

2. Public concern will lead to AI regulations. But we must understand this tech too.

In 2018, the National Science Foundation invested $100 million in AI research, with special support in 2019 for developing principles for safe, robust and trustworthy AI; addressing issues of bias, fairness and transparency of algorithmic intelligence; developing deeper understanding of human-AI interaction and user education; and developing insights about the influences of AI on people and society.

This investment was dwarfed by DARPA—an agency of the Department of Defence—and its multi-year investment of more than $2 billion in new and existing programs under the “AI Next” campaign. A key area of the campaign includes pioneering the next generation of AI algorithms and applications, such as “explainability” and common sense reasoning.

Federally funded initiatives, as well as corporate efforts (such as Google’s “What If” tool) will lead to the rise of explainable AI and interpretable AI, whereby the AI actually explains the logic behind its decision making to humans. But the next step from there would be for the AI regulators and policymakers themselves to learn about how these technologies actually work. This is an overlooked step right now that Richard Danzig, former Secretary of the U.S. Navy advises us to consider, as we create “humans-in-the-loop” systems, which require people to sign off on important AI decisions.

3. More companies will make AI a strategic initiative in corporate social responsibility.

Google invested $25 million in AI for Good and Microsoft added an AI for Humanitarian Action to its prior commitment. While these are positive steps, the tech industry continues to have a diversity problem

4. Funding for AI literacy and public education will skyrocket.

Ryan Calo from the University of Washington explains that it matters how we talk about technologies that we don’t fully understand.