It’s time to accept that disinformation is a cyber security issue from r/technology

https://www.computerweekly.com/opinion/Its-time-to-accept-that-disinformation-is-a-cyber-security-issue

Misinformation and disinformation are rife, but so far it’s been seen as a challenge for policy-makers and big tech, including social media platforms.

The sheer volume of data being created makes it hard to tell what’s real and what’s not. From destroying 5G towers to conspiracies like QAnon and unfounded concern about election fraud, distrust is becoming the default – and this can have incredibly damaging effects on society.

So far, the tech sector – primarily social media companies, given that their platforms enable fake news to spread exponentially – have tried to implement some measures, with varying levels of success. For example, WhatsApp has placed a stricter limit on its message-forwarding capability and Twitter has begun to flag misleading posts.

the rise of tech startups that are exploring ways to detect and stem the flow of disinformation, such Right of Reply, Astroscreen and Logically.

disinformation has the potential to undermine national security

Data breaches result in the loss of value, but so can data manipulation

This Will Change Your Life

Why the grandiose promises of multilevel marketing and QAnon conspiracy theories go hand in hand

https://www.theatlantic.com/technology/archive/2020/10/why-multilevel-marketing-and-qanon-go-hand-hand/616885/

The Concordia University researcher Marc-André Argentino has a name for people like Schrandt: “Pastel QAnon.” These women—they are almost universally women—are doing the work of sanitizing QAnon, often pairing its least objectionable elements (Save the children!) with equally inoffensive imagery: Millennial-pink-and-gold color schemes, a winning smile. And many of them are members of multilevel-marketing organizations—a massive, under-examined sector of the American retail economy that is uniquely fertile ground for conspiracism. These are organizations built on foundational myths (that the establishment is keeping secrets from you, that you are on a hero’s journey to enlightenment and wealth), charismatic leadership, and shameless, constant posting. The people at the top of them are enviable, rich, and gifted at wrapping everything that happens—in their personal lives, or in the world around them—into a grand narrative about how to become as happy as they are. In 2020, what’s happening to them is dark and dangerous, but it looks gorgeous.

+++++++++++++++

more on QAnon in this IMS blog

https://blog.stcloudstate.edu/ims?s=qanon

The Smoking Gun in the Facebook Antitrust Case

The government wants to break up the world’s biggest social network. Internal company emails show why.

https://www.wired.com/story/facebook-ftc-antitrust-case-smoking-gun/

At first blush, privacy and antitrust might seem like separate issues—two different chapters in a textbook about big tech. But the decline in Facebook’s privacy protections plays a central role in the states’ case. Antitrust is a complicated field built on a simple premise: When a company doesn’t face real competition, it will be free to do bad things.

a conceptual breakthrough on that front. In a paper titled “The Antitrust Case Against Facebook,” the legal scholar Dina Srinivasan argued that Facebook’s takeover of the social networking market has inflicted a very specific harm on consumers: It has forced them to accept ever worse privacy settings. Facebook, Srinivasan pointed out, began its existence in 2004 by differentiating itself on privacy. Unlike then-dominant MySpace, for example, where profiles were visible to anyone by default, Facebook profiles could be seen only by your friends or people at the same school

+++++++++++++++++

Facebook hit with antitrust probe for tying Oculus use to Facebook accounts

https://techcrunch.com/2020/12/10/facebook-hit-with-antitrust-probe-for-tying-oculus-use-to-facebook-accounts

In recent years Facebook has been pushing to add a ‘social layer’ to the VR platform — but the heavy-handed requirement for Oculus users to have a Facebook account has not proved popular with gamers.

+++++++++++++++++

more on Facebook in this IMS blog

https://blog.stcloudstate.edu/ims?s=facebook

Chris Hughes

https://blog.stcloudstate.edu/ims/2019/05/09/break-up-facebook/

Information Overload Helps Fake News Spread, and Social Media Knows It

Understanding how algorithm manipulators exploit our cognitive vulnerabilities empowers us to fight back

https://www.scientificamerican.com/article/information-overload-helps-fake-news-spread-and-social-media-knows-it/

a minefield of cognitive biases.

People who behaved in accordance with them—for example, by staying away from the overgrown pond bank where someone said there was a viper—were more likely to survive than those who did not.

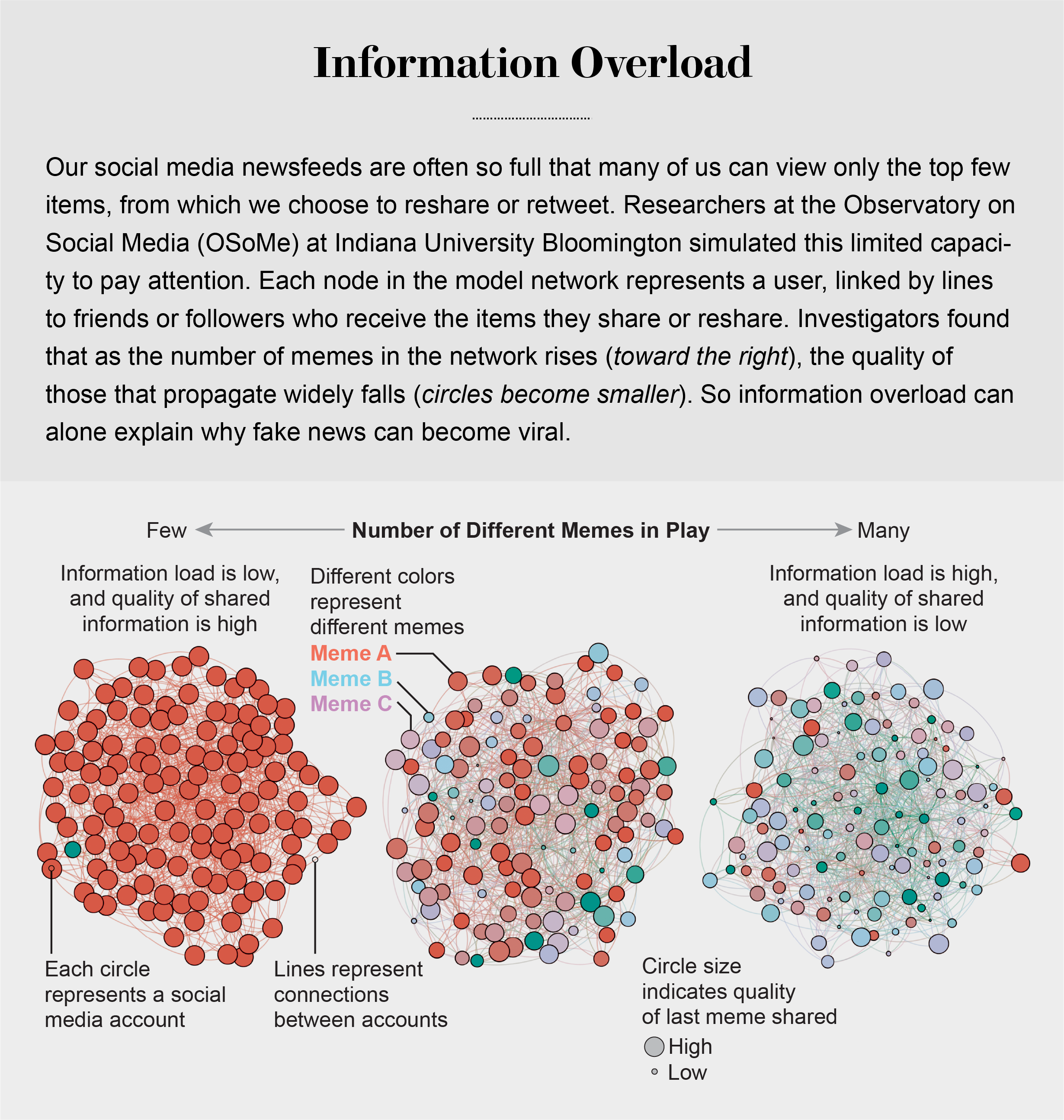

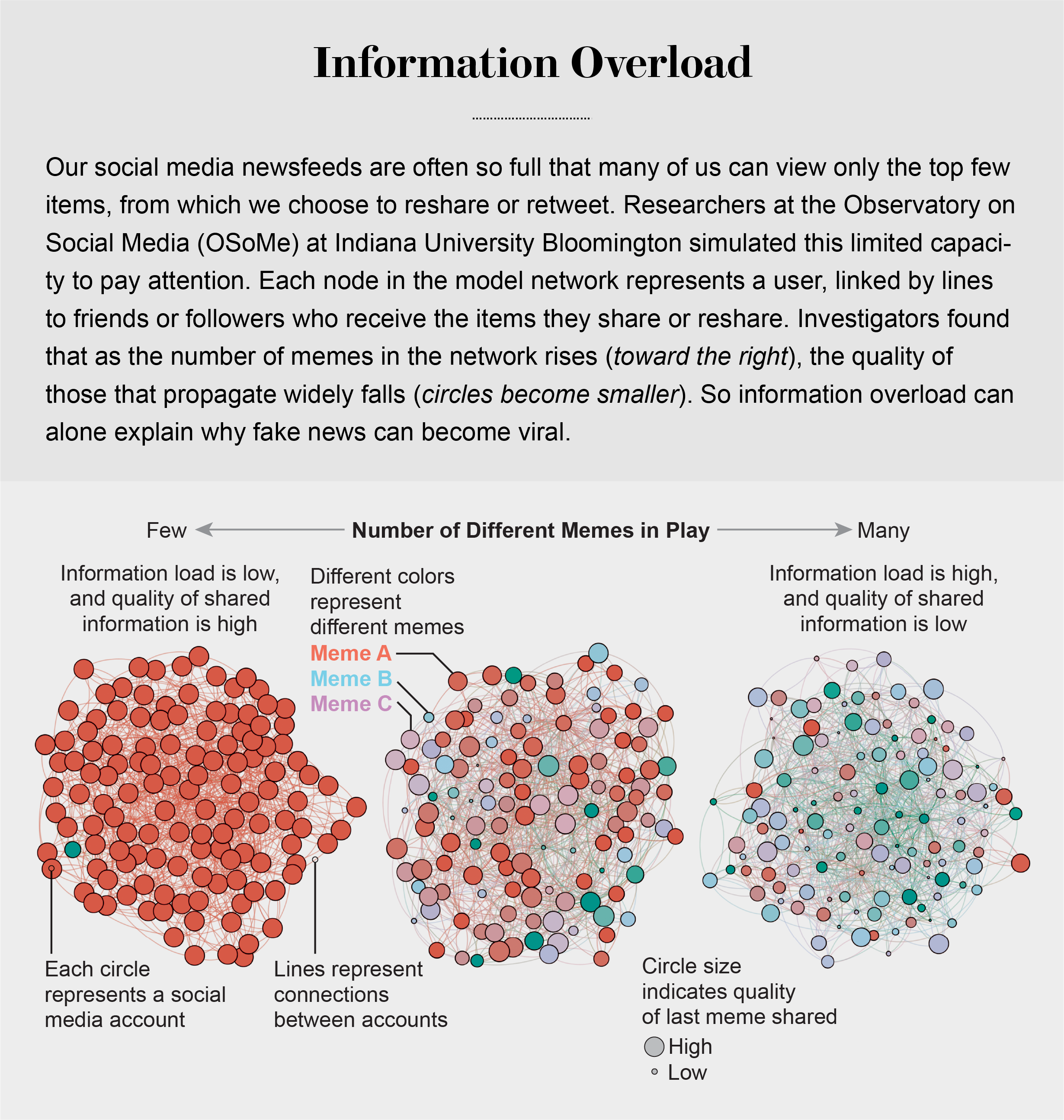

Compounding the problem is the proliferation of online information. Viewing and producing blogs, videos, tweets and other units of information called memes has become so cheap and easy that the information marketplace is inundated. My note: folksonomy in its worst.

At the University of Warwick in England and at Indiana University Bloomington’s Observatory on Social Media (OSoMe, pronounced “awesome”), our teams are using cognitive experiments, simulations, data mining and artificial intelligence to comprehend the cognitive vulnerabilities of social media users.

developing analytical and machine-learning aids to fight social media manipulation.

As Nobel Prize–winning economist and psychologist Herbert A. Simon noted, “What information consumes is rather obvious: it consumes the attention of its recipients.”

attention economy

Our models revealed that even when we want to see and share high-quality information, our inability to view everything in our news feeds inevitably leads us to share things that are partly or completely untrue.

Frederic Bartlett

Cognitive biases greatly worsen the problem.

We now know that our minds do this all the time: they adjust our understanding of new information so that it fits in with what we already know. One consequence of this so-called confirmation bias is that people often seek out, recall and understand information that best confirms what they already believe.

This tendency is extremely difficult to correct.

Making matters worse, search engines and social media platforms provide personalized recommendations based on the vast amounts of data they have about users’ past preferences.

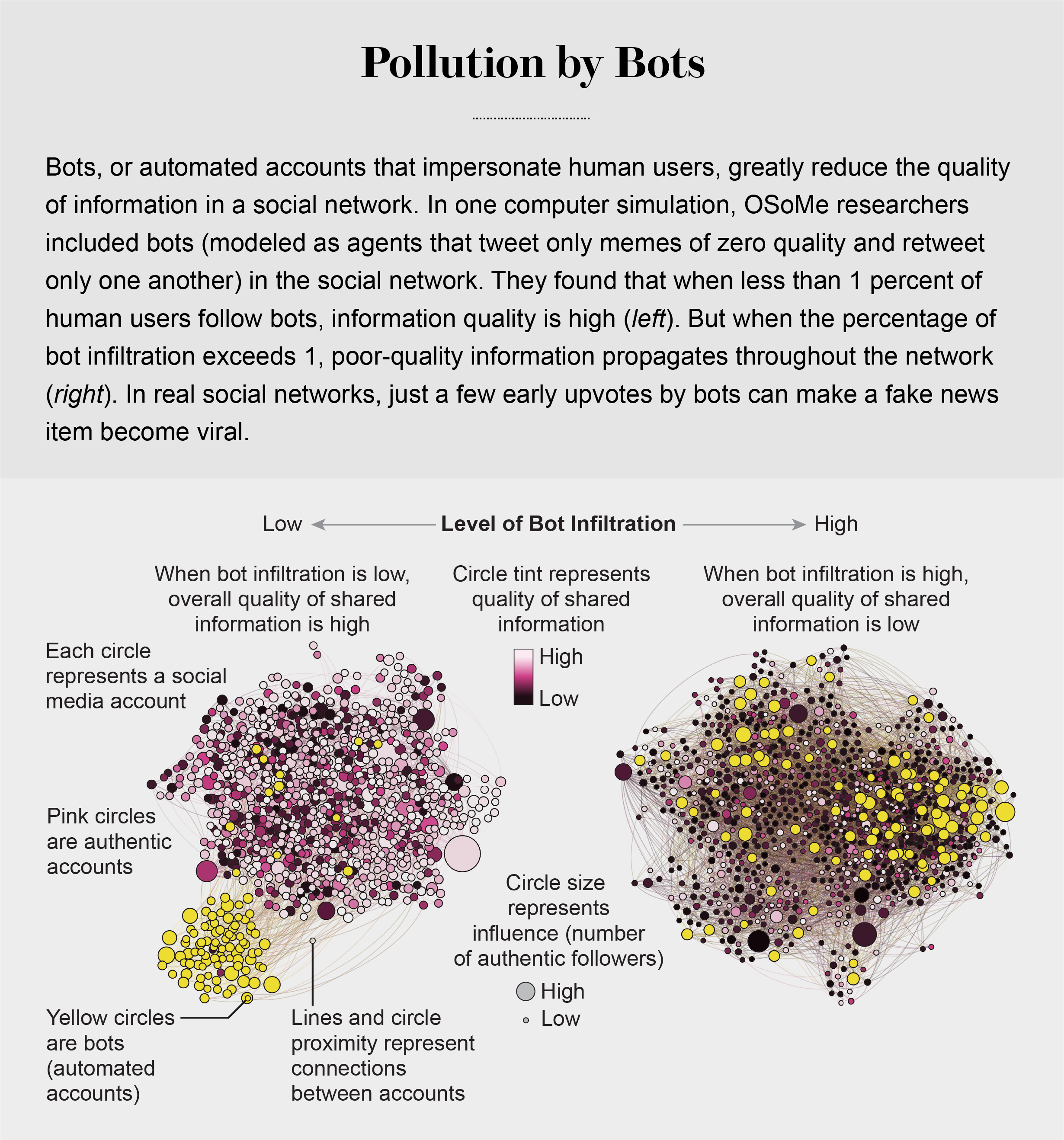

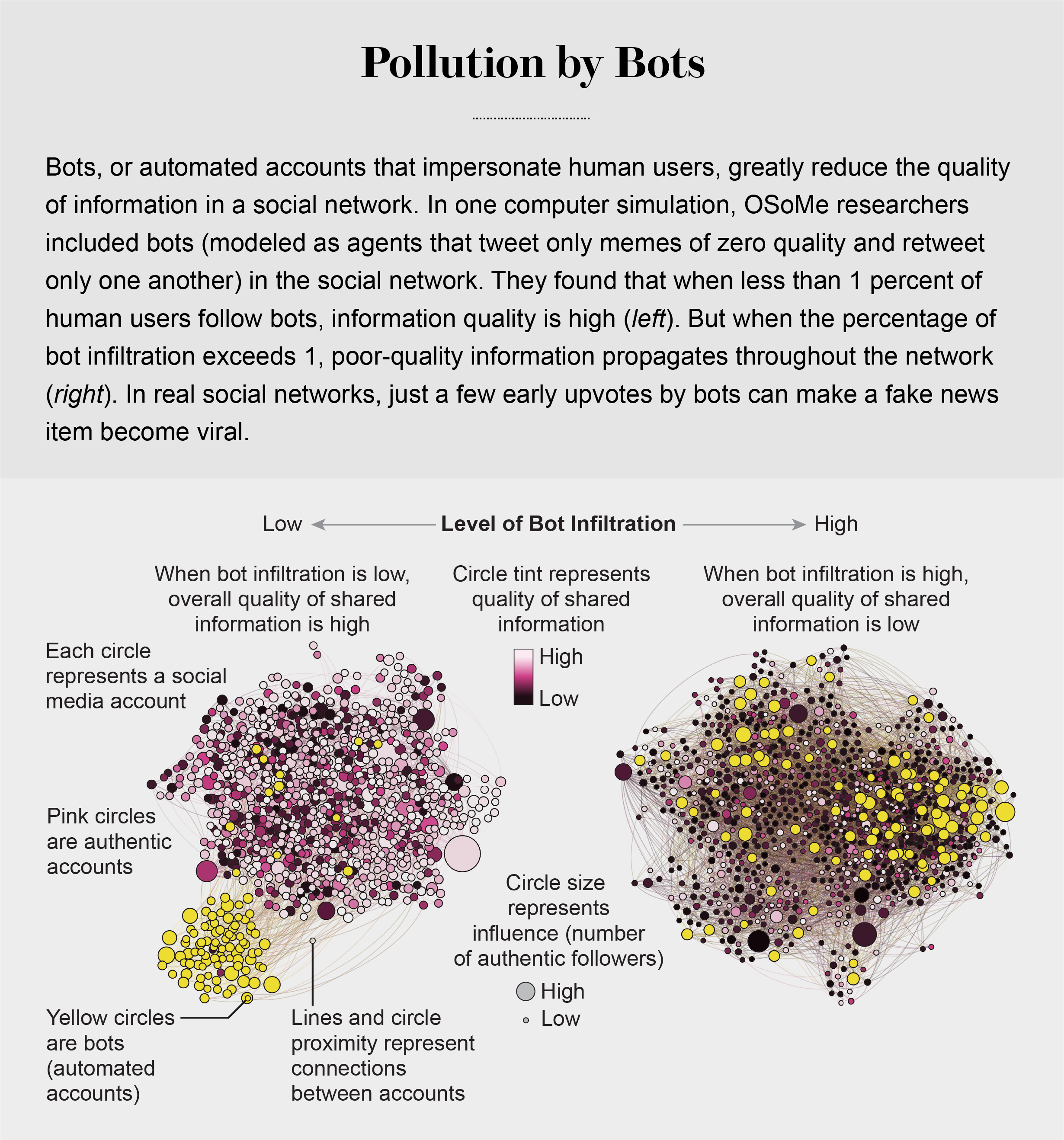

pollution by bots

Social Herding

social groups create a pressure toward conformity so powerful that it can overcome individual preferences, and by amplifying random early differences, it can cause segregated groups to diverge to extremes.

Social media follows a similar dynamic. We confuse popularity with quality and end up copying the behavior we observe.

information is transmitted via “complex contagion”: when we are repeatedly exposed to an idea, typically from many sources, we are more likely to adopt and reshare it.

In addition to showing us items that conform with our views, social media platforms such as Facebook, Twitter, YouTube and Instagram place popular content at the top of our screens and show us how many people have liked and shared something. Few of us realize that these cues do not provide independent assessments of quality.

programmers who design the algorithms for ranking memes on social media assume that the “wisdom of crowds” will quickly identify high-quality items; they use popularity as a proxy for quality. My note: again, ill-conceived folksonomy.

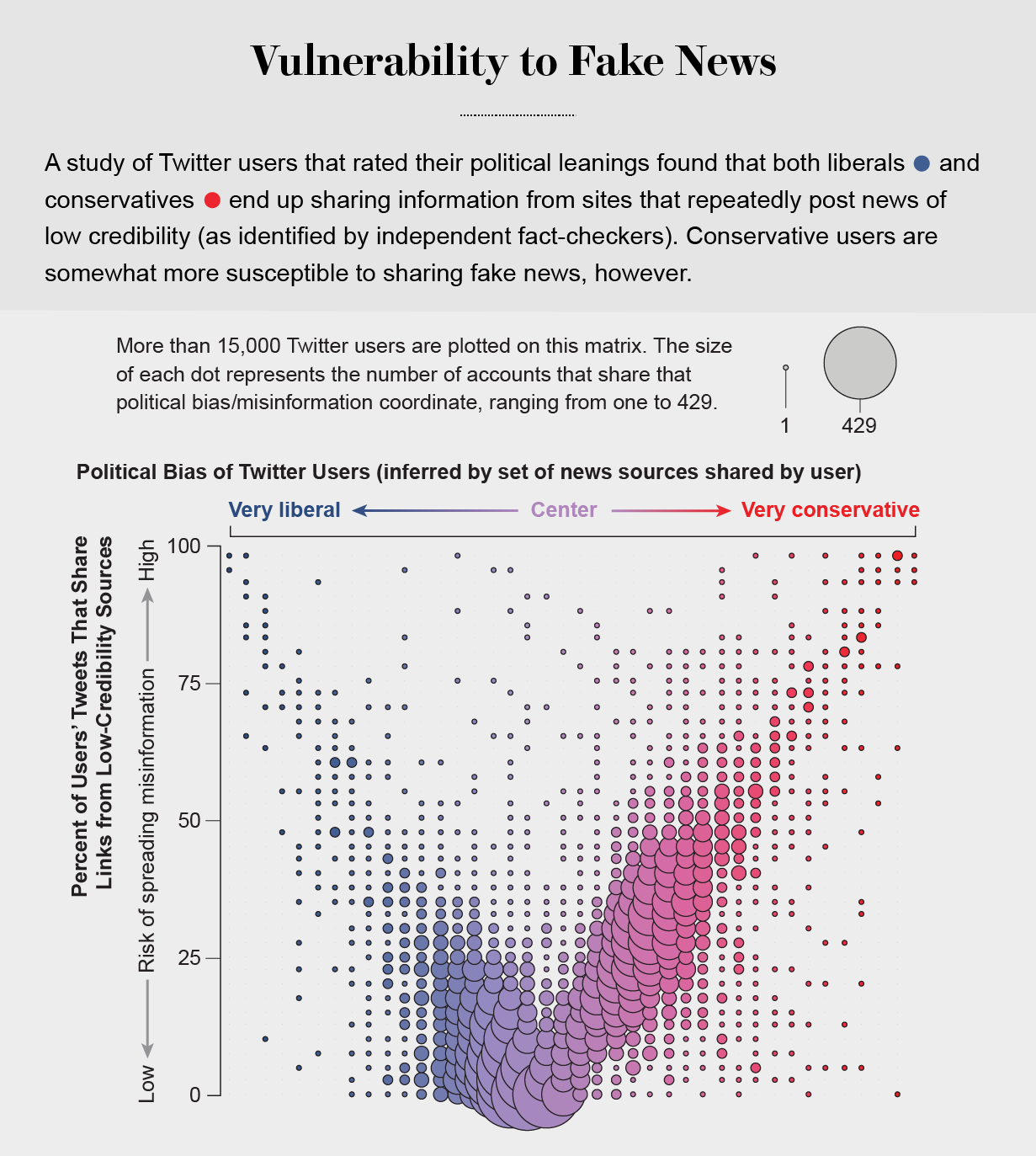

Echo Chambers

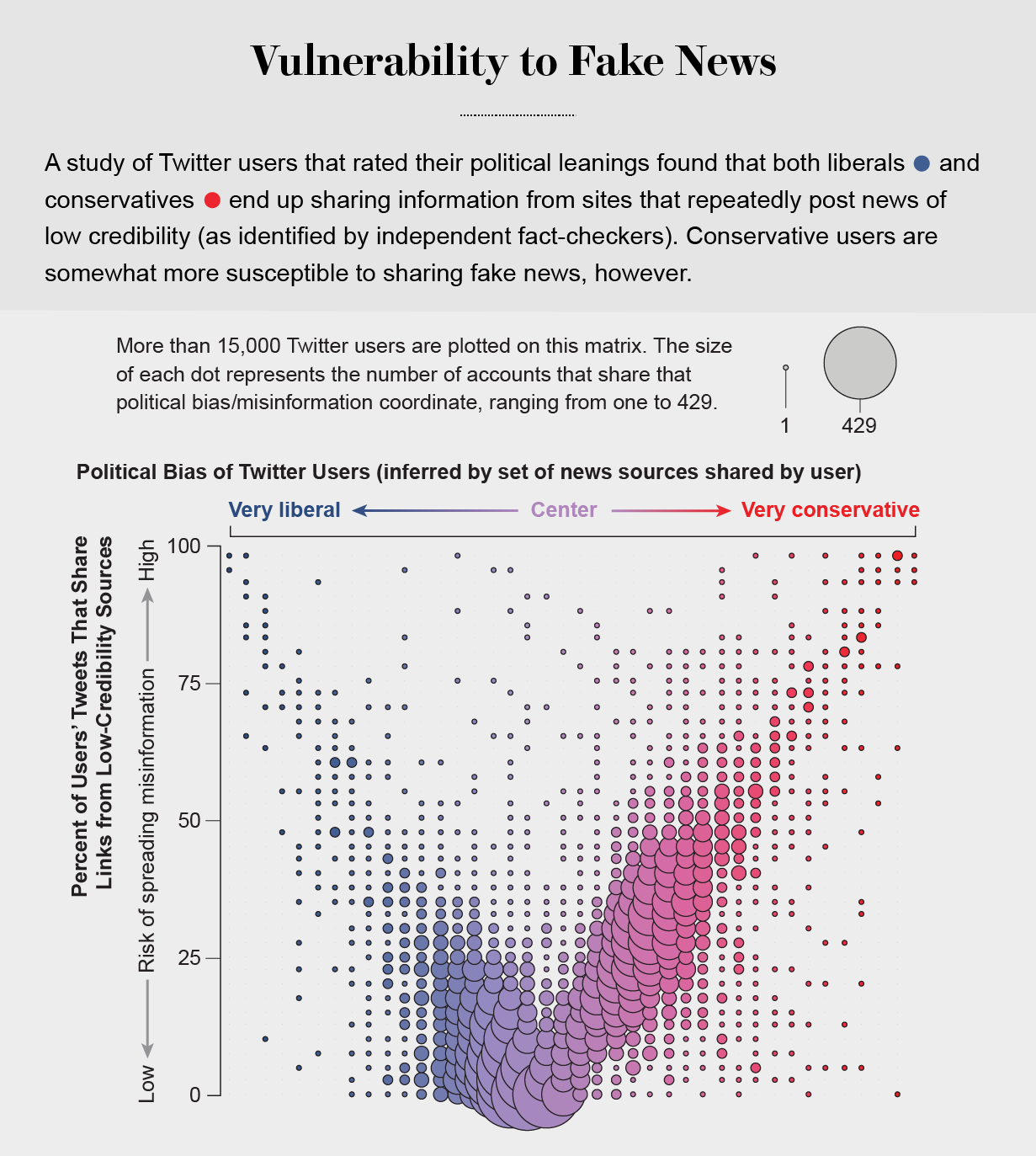

the political echo chambers on Twitter are so extreme that individual users’ political leanings can be predicted with high accuracy: you have the same opinions as the majority of your connections. This chambered structure efficiently spreads information within a community while insulating that community from other groups.

socially shared information not only bolsters our biases but also becomes more resilient to correction.

machine-learning algorithms to detect social bots. One of these, Botometer, is a public tool that extracts 1,200 features from a given Twitter account to characterize its profile, friends, social network structure, temporal activity patterns, language and other features. The program compares these characteristics with those of tens of thousands of previously identified bots to give the Twitter account a score for its likely use of automation.

Some manipulators play both sides of a divide through separate fake news sites and bots, driving political polarization or monetization by ads.

recently uncovered a network of inauthentic accounts on Twitter that were all coordinated by the same entity. Some pretended to be pro-Trump supporters of the Make America Great Again campaign, whereas others posed as Trump “resisters”; all asked for political donations.

a mobile app called Fakey that helps users learn how to spot misinformation. The game simulates a social media news feed, showing actual articles from low- and high-credibility sources. Users must decide what they can or should not share and what to fact-check. Analysis of data from Fakey confirms the prevalence of online social herding: users are more likely to share low-credibility articles when they believe that many other people have shared them.

Hoaxy, shows how any extant meme spreads through Twitter. In this visualization, nodes represent actual Twitter accounts, and links depict how retweets, quotes, mentions and replies propagate the meme from account to account.

Free communication is not free. By decreasing the cost of information, we have decreased its value and invited its adulteration.