Searching for "artificial intelligence "

WHY TECHNOLOGY FAVORS TYRANNY

https://www.theatlantic.com/magazine/archive/2018/10/yuval-noah-harari-technology-tyranny/568330/

Artificial intelligence could erase many practical advantages of democracy, and erode the ideals of liberty and equality. It will further concentrate power among a small elite if we don’t take steps to stop it.

liberal democracy and free-market economics might become obsolete.

The Russian, Chinese, and Cuban revolutions were made by people who were vital to the economy but lacked political power; in 2016, Trump and Brexit were supported by many people who still enjoyed political power but feared they were losing their economic worth.

artificial intelligence is different from the old machines. In the past, machines competed with humans mainly in manual skills. Now they are beginning to compete with us in cognitive skills. And we don’t know of any third kind of skill—beyond the manual and the cognitive—in which humans will always have an edge.

Israel is a leader in the field of surveillance technology, and has created in the occupied West Bank a working prototype for a total-surveillance regime.

The conflict between democracy and dictatorship is actually a conflict between two different data-processing systems. AI may swing the advantage toward the latter.

As we rely more on Google for answers, our ability to locate information independently diminishes. Already today, “truth” is defined by the top results of a Google search.

The race to accumulate data is already on, and is currently headed by giants such as Google and Facebook and, in China, Baidu and Tencent. So far, many of these companies have acted as “attention merchants”—they capture our attention by providing us with free information, services, and entertainment, and then they resell our attention to advertisers.

We aren’t their customers—we are their product.

Nationalization of data by governments could offer one solution; it would certainly curb the power of big corporations. But history suggests that we are not necessarily better off in the hands of overmighty governments.

https://www.ecampusnews.com/2021/11/11/viewpoint-can-ai-tutors-help-students-learn/

the Kyowon Group, an education company in Korea, recently developed a life-like tutor using artificial intelligence for the very first time in the Korean education industry.

Kyowon created its AI tutors for two-way communication–teacher to student and student to teacher–by exchanging questions and answers between the two about the lesson plan as if they were having an interactive conversation. These AI tutors were able to provide real time feedback related to the learning progress and were also able to identify, manage, and customize interactions with students through learning habits management. In addition, to help motivate student learning, the AI Tutors captured students’ emotions through analysis of their strengths and challenges.

While AI is being used in various industries, including education, the technology comes under scrutiny as many ask the question if they can trust AI and its legitimacy?

Although there are some meaningful use cases for deepfake, such as using technology to bring historical figures of the past to life, deepfake technology is mostly exploited. However, the good news is that groups are working to detect and minimize the damage caused by deepfake videos and other AI technology abuses, including credible standards organizations who are working to ensure trust in AI.

For education, the best and only way AI tutors will be adopted and accepted

can only be done with innovative real-time AI conversational technology that must include accurate lip and mouth synchronization in addition to video synthesis technology. Using real models, not fake computer-generated ones, is critical as well.

Artificial intelligence (AI) training costs, for example, are dropping 40-70% at an annual rate, a record-breaking deflationary force. AI is likely to transform every sector, industry, and company during the 5 to 10 years.

+++++++++++++++++

More on artificial intelligence in this blog

https://blog.stcloudstate.edu/ims?s=artificial+intelligence+education

Handon AI projects for the classroom

Elementary school level

https://cdn.iste.org/www-root/Libraries/Documents%20%26%20Files/Artificial%20Intelligence/AIGDK5_1120.pdf

Secondary teachers

https://cdn.iste.org/www-root/Libraries/Documents%20%26%20Files/Artificial%20Intelligence/AIGDSE_1120.pdf

Elective educators guides

https://cdn.iste.org/www-root/Libraries/Documents%20%26%20Files/Artificial%20Intelligence/AIGDEL_0820-red.pdf

Computer science educators guides

https://cdn.iste.org/www-root/Libraries/Documents%20%26%20Files/Artificial%20Intelligence/AIGDCS_0820-red.pdf

The Artificial Intelligence (AI) for K-12 initiative (AI4K12) is jointly sponsored by AAAI and CSTA.

Home page

ISTE Standards and Computational Thinking Competencies can help frame the inclusion and development of AI-related projects in K–12 classrooms. The ISTE Standards for Students identify the skills and knowledge that K–12 students need to thrive, grow, and contribute in a global, interconnected, and constantly changing society. The Computational Thinking Competencies for Educators identify the skills educators need to successfully prepare students to become innovators and problem-solvers in a digital world.

https://www.iste.org/standards/iste-standards-for-computational-thinking

The Next Wave of Edtech Will Be Very, Very Big — and Global

https://www-edsurge-com.cdn.ampproject.org/c/s/www.edsurge.com/amp/news/2021-07-30-the-next-wave-of-edtech-will-be-very-very-big-and-global

India’s Byju’s

Few companies have tackled the full range of learners since the days when Pearson was touted as the world’s largest learning company. Those that do, however, are increasingly huge (like PowerSchool, which had an IPO this week) and work across international borders.

Chinese education giants, including TAL and New Oriental.

The meteoric rise of Chinese edtech companies has dimmed recently as the Chinese government shifted regulations around online tutoring, in an effort to “protect students’ right to rest, improve the quality of school education and reduce the burden on parents.”

Acquisitions and partnerships are a cornerstone of Byju’s early learning programs: It bought Palo Alto-based Osmo in 2019, which combines digital learning with manipulatives, an approach the companies call “phygital.” For instance: Using a tablet’s camera and Osmo’s artificial intelligence software, the system tracks what a child is doing on a (physical) worksheet and responds accordingly to right and wrong answers. “It’s almost like having a teacher looking over you,

My note: this can be come disastrous when combined with the China’s “social credit” system.

By contrast, Byju’s FutureSchool (launched in the U.S. this past spring) aims to offer one-to-one tutoring sessions starting with coding (based in part on WhiteHat Jr., which it acquired in August 2020) and eventually including music, fine arts and English to students in the U.S., Brazil, the U.K., Indonesia and others. The company has recruited 11,000 teachers in India to staff the sessions

In mid-July, Byju’s bought California-based reading platform Epic for $500 million. That product opens up a path for Byju’s to schools. Epic offers a digital library of more than 40,000 books for students ages 12 and under. Consumers pay about $80 a year for the library. It’s free to schools. Epic says that more than 1 million teachers in 90 percent of U.S. elementary schools have signed up for accounts.

That raises provocative questions for U.S. educators. Among them:

- How will products originally developed for the consumer market fit the needs of schools, particularly those that serve disadvantaged students?

- Will there be more development dollars poured into products that appeal to consumers—and less into products that consumers typically skip (say, middle school civics or history curriculum?)

- How much of an investment will giants such as Byju’s put into researching the effectiveness of its products? In the past most consumers have been less concerned than professional educators about the “research” behind the learning products they buy. Currently Gokulnath says the company most closely tracks metrics such as “engagement” (how much time students spend on the product) and “renewals” (how many customers reup after a year’s use of the product.)

- How will products designed for home users influence parents considering whether to continue to school at home in the wake of viral pandemics?

What is AI? Here’s everything you need to know about artificial intelligence

An executive guide to artificial intelligence, from machine learning and general AI to neural networks.

https://www-zdnet-com.cdn.ampproject.org/c/s/www.zdnet.com/google-amp/article/what-is-ai-heres-everything-you-need-to-know-about-artificial-intelligence/

What is artificial intelligence (AI)?

It depends who you ask.

What are the uses for AI?

What are the different types of AI?

Narrow AI is what we see all around us in computers today — intelligent systems that have been taught or have learned how to carry out specific tasks without being explicitly programmed how to do so.

General AI

General AI is very different and is the type of adaptable intellect found in humans, a flexible form of intelligence capable of learning how to carry out vastly different tasks, anything from haircutting to building spreadsheets or reasoning about a wide variety of topics based on its accumulated experience.

What can Narrow AI do?

There are a vast number of emerging applications for narrow AI:

- Interpreting video feeds from drones carrying out visual inspections of infrastructure such as oil pipelines.

- Organizing personal and business calendars.

- Responding to simple customer-service queries.

- Coordinating with other intelligent systems to carry out tasks like booking a hotel at a suitable time and location.

- Helping radiologists to spot potential tumors in X-rays.

- Flagging inappropriate content online, detecting wear and tear in elevators from data gathered by IoT devices.

- Generating a 3D model of the world from satellite imagery… the list goes on and on.

What can General AI do?

A survey conducted among four groups of experts in 2012/13 by AI researchers Vincent C Müller and philosopher Nick Bostrom reported a 50% chance that Artificial General Intelligence (AGI) would be developed between 2040 and 2050, rising to 90% by 2075.

What is machine learning?

What are neural networks?

What are other types of AI?

Another area of AI research is evolutionary computation.

What is fueling the resurgence in AI?

What are the elements of machine learning?

As mentioned, machine learning is a subset of AI and is generally split into two main categories: supervised and unsupervised learning.

Supervised learning

Unsupervised learning

Which are the leading firms in AI?

Which AI services are available?

All of the major cloud platforms — Amazon Web Services, Microsoft Azure and Google Cloud Platform — provide access to GPU arrays for training and running machine-learning models, with Google also gearing up to let users use its Tensor Processing Units — custom chips whose design is optimized for training and running machine-learning models.

Which countries are leading the way in AI?

It’d be a big mistake to think the US tech giants have the field of AI sewn up. Chinese firms Alibaba, Baidu, and Lenovo, invest heavily in AI in fields ranging from e-commerce to autonomous driving. As a country, China is pursuing a three-step plan to turn AI into a core industry for the country, one that will be worth 150 billion yuan ($22bn) by the end of 2020 to become the world’s leading AI power by 2030.

How can I get started with AI?

While you could buy a moderately powerful Nvidia GPU for your PC — somewhere around the Nvidia GeForce RTX 2060 or faster — and start training a machine-learning model, probably the easiest way to experiment with AI-related services is via the cloud.

How will AI change the world?

Robots and driverless cars

Fake news

Facial recognition and surveillance

Healthcare

Reinforcing discrimination and bias

AI and global warming (climate change)

Will AI kill us all?

+++++++++++++

more on AI in this iMS blog

https://blog.stcloudstate.edu/ims?s=artificial+intelligence+education

Project centers on artificial intelligence; new National Institute in AI to be headquartered at Georgia Tech

https://gra.org/blog/209

“The goal of ALOE is to develop new artificial intelligence theories and techniques to make online education for adults at least as effective as in-person education in STEM fields,” says Co-PI Ashok Goel, Professor of Computer Science and Human-Centered Computing and the Chief Scientist with the Center for 21stCentury Universities at Georgia Tech

Research and development at ALOE aims to blend online educational resources and courses to make education more widely available, as well as use virtual assistants to make it more affordable and achievable. According to Goel, ALOE will make fundamental advances in personalization at scale, machine teaching, mutual theory of mind and responsible AI.

The ALOE Institute represents a powerful consortium of several universities (Arizona State, Drexel, Georgia Tech, Georgia State, Harvard, UNC-Greensboro); technical colleges in TCSG; major industrial partners (Boeing, IBM and Wiley); and non-profit organizations (GRA and IMS).

+++++++++++++++++++++++

more on AI in this IMS blog

https://blog.stcloudstate.edu/ims?s=artificial+intelligence

https://blog.stcloudstate.edu/ims?s=online+education

EDUCAUSE QuickPoll Results: Artificial Intelligence Use in Higher Education

D. Christopher Brooks” Friday, June 11, 2021

https://er.educause.edu/articles/2021/6/educause-quickpoll-results-artificial-intelligence-use-in-higher-education

AI is being used to monitor students and their work. The most prominent uses of AI in higher education are attached to applications designed to protect or preserve academic integrity through the use of plagiarism-detection software (60%) and proctoring applications (42%) (see figure 1).

The chatbots are coming! A sizable percentage (36%) of respondents reported that chatbots and digital assistants are in use at least somewhat on their campuses, with another 17% reporting that their institutions are in the planning, piloting, and initial stages of use (see figure 2). The use of chatbots in higher education by admissions, student affairs, career services, and other student success and support units is not entirely new, but the pandemic has likely contributed to an increase in their use as they help students get efficient, relevant, and correct answers to their questions without long waits.Footnote10 Chatbots may also liberate staff from repeatedly responding to the same questions and reduce errors by deploying updates immediately and universally.

AI is being used for student success tools such as identifying students who are at-risk academically (22%) and sending early academic warnings (16%); another 14% reported that their institutions are in the stage of planning, piloting, and initial usage of AI for these tasks.

Nearly three-quarters of respondents said that ineffective data management and integration (72%) and insufficient technical expertise (71%) present at least a moderate challenge to AI implementation. Financial concerns (67%) and immature data governance (66%) also pose challenges. Insufficient leadership support (56%) is a foundational challenge that is related to each of the previous listed challenges in this group.

Current use of AI

- Chatbots for informational and technical support, HR benefits questions, parking questions, service desk questions, and student tutoring

- Research applications, conducting systematic reviews and meta-analyses, and data science research (my italics)

- Library services (my italics)

- Recruitment of prospective students

- Providing individual instructional material pathways, assessment feedback, and adaptive learning software

- Proctoring and plagiarism detection

- Student engagement support and nudging, monitoring well-being, and predicting likelihood of disengaging the institution

- Detection of network attacks

- Recommender systems

++++++++++++++++++

more on AI in education in this IMS blog

https://blog.stcloudstate.edu/ims?s=artificial+intelligence+education

https://www.facebook.com/groups/onlinelearningcollective/permalink/761389994491701/

“What the invention of photography did to painting, this will do to teaching.”

AI can write a passing college paper in 20 minutes

Natural language processing is on the cusp of changing our relationship with machines forever.

https://www.zdnet.com/article/ai-can-write-a-passing-college-paper-in-20-minutes/

The specific AI — GPT-3, for Generative Pre-trained Transformer 3 — was released in June 2020 by OpenAI, a research business co-founded by Elon Musk. It was developed to create content with a human language structure better than any of its predecessors.

According to a 2019 paper by the Allen Institute of Artificial Intelligence, machines fundamentally lack commonsense reasoning — the ability to understand what they’re writing. That finding is based on a critical reevaluation of standard tests to determine commonsense reasoning in machines, such as the Winograd Schema Challenge.

Which makes the results of the EduRef experiment that much more striking. The writing prompts were given in a variety of subjects, including U.S. History, Research Methods (Covid-19 Vaccine Efficacy), Creative Writing, and Law. GPT-3 managed to score a “C” average across four subjects from professors, failing only one assignment.

Aside from potentially troubling implications for educators, what this points to is a dawning inflection point for natural language processing, heretofore a decidedly human characteristic.

++++++++++++++

more on artificial intelligence in this IMS blog

https://blog.stcloudstate.edu/ims?s=artificial+intelligence

more on paper mills in this IMS blog

https://blog.stcloudstate.edu/ims?s=paper+mills

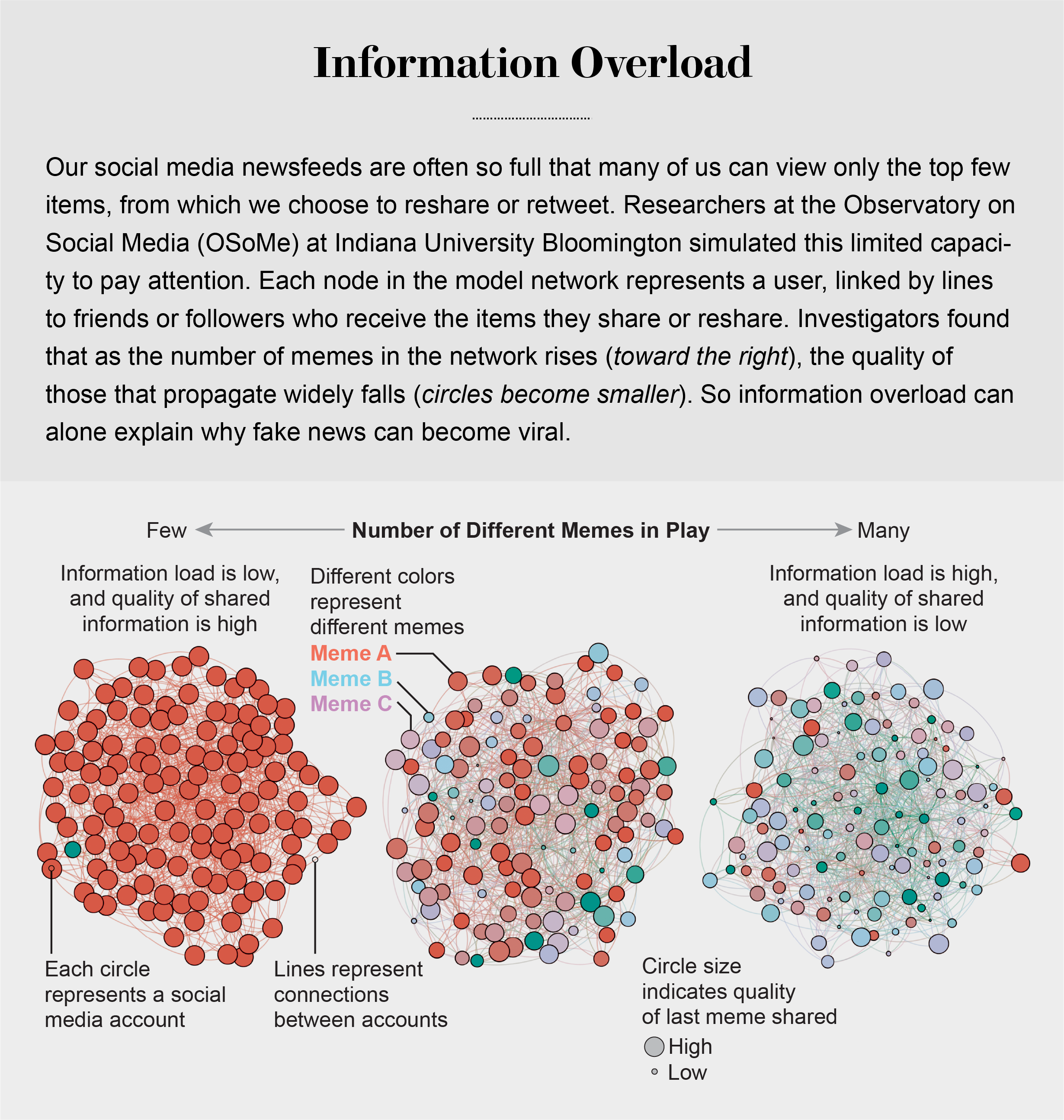

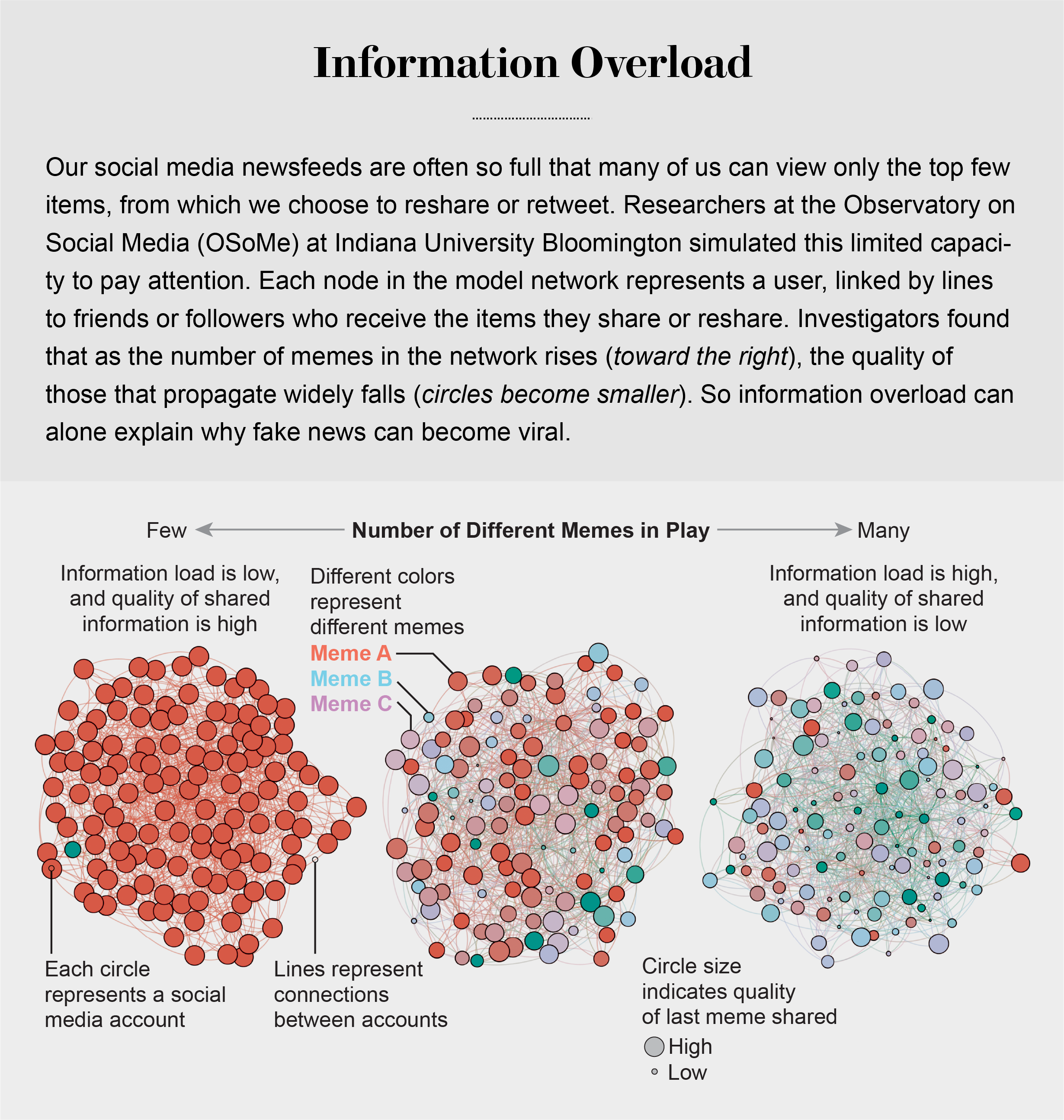

Information Overload Helps Fake News Spread, and Social Media Knows It

Understanding how algorithm manipulators exploit our cognitive vulnerabilities empowers us to fight back

https://www.scientificamerican.com/article/information-overload-helps-fake-news-spread-and-social-media-knows-it/

a minefield of cognitive biases.

People who behaved in accordance with them—for example, by staying away from the overgrown pond bank where someone said there was a viper—were more likely to survive than those who did not.

Compounding the problem is the proliferation of online information. Viewing and producing blogs, videos, tweets and other units of information called memes has become so cheap and easy that the information marketplace is inundated. My note: folksonomy in its worst.

At the University of Warwick in England and at Indiana University Bloomington’s Observatory on Social Media (OSoMe, pronounced “awesome”), our teams are using cognitive experiments, simulations, data mining and artificial intelligence to comprehend the cognitive vulnerabilities of social media users.

developing analytical and machine-learning aids to fight social media manipulation.

As Nobel Prize–winning economist and psychologist Herbert A. Simon noted, “What information consumes is rather obvious: it consumes the attention of its recipients.”

attention economy

Our models revealed that even when we want to see and share high-quality information, our inability to view everything in our news feeds inevitably leads us to share things that are partly or completely untrue.

Frederic Bartlett

Cognitive biases greatly worsen the problem.

We now know that our minds do this all the time: they adjust our understanding of new information so that it fits in with what we already know. One consequence of this so-called confirmation bias is that people often seek out, recall and understand information that best confirms what they already believe.

This tendency is extremely difficult to correct.

Making matters worse, search engines and social media platforms provide personalized recommendations based on the vast amounts of data they have about users’ past preferences.

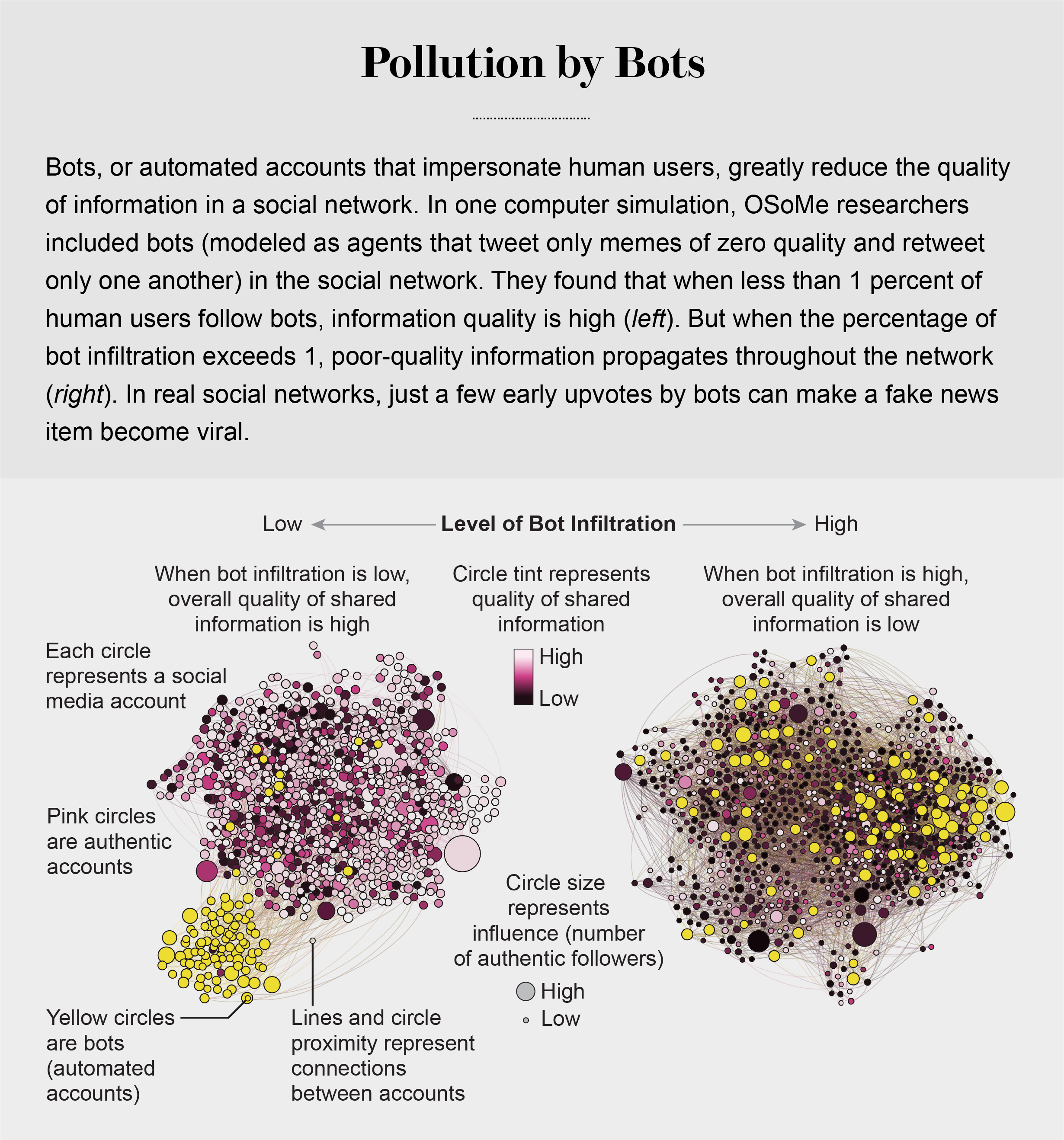

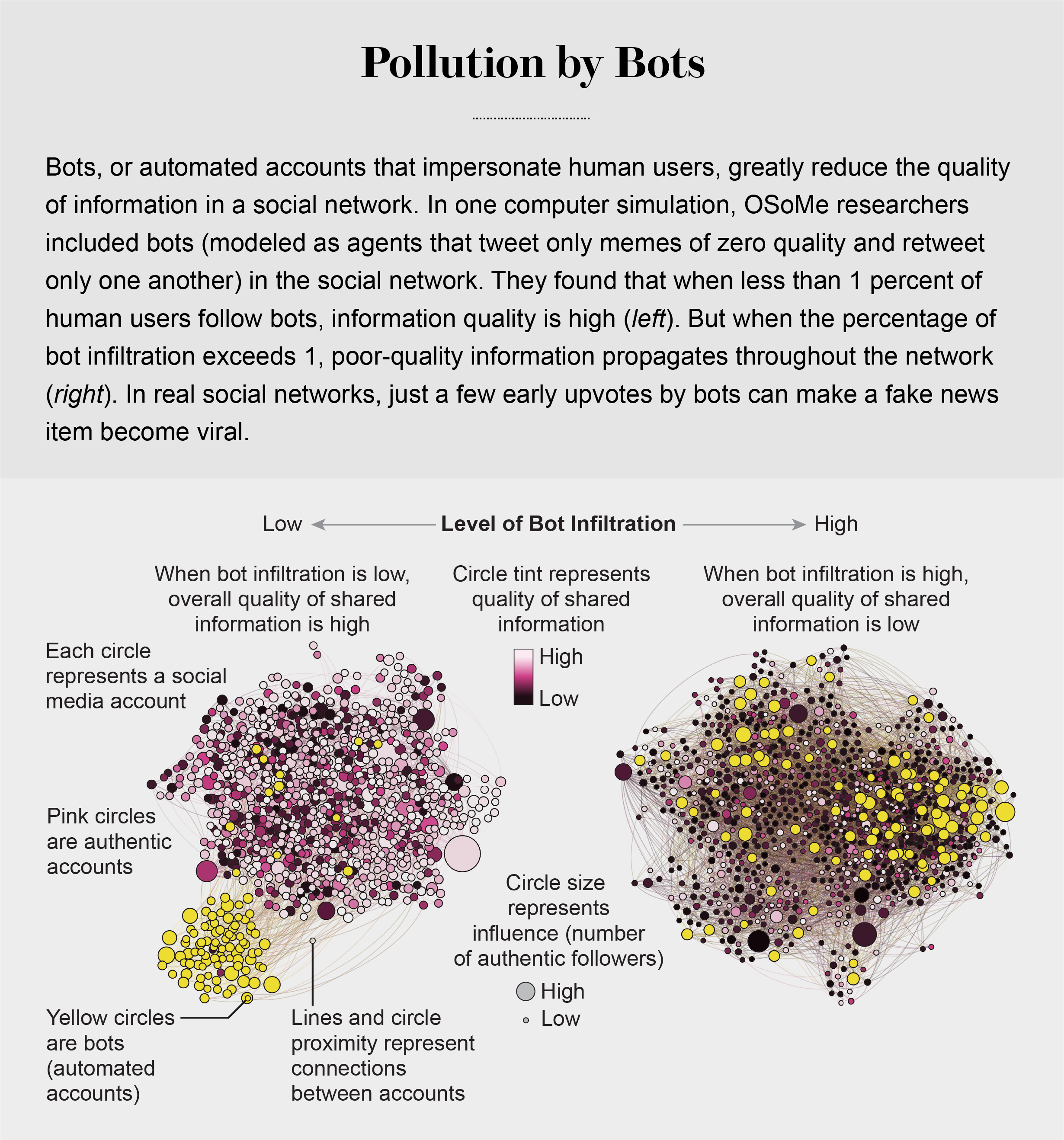

pollution by bots

Social Herding

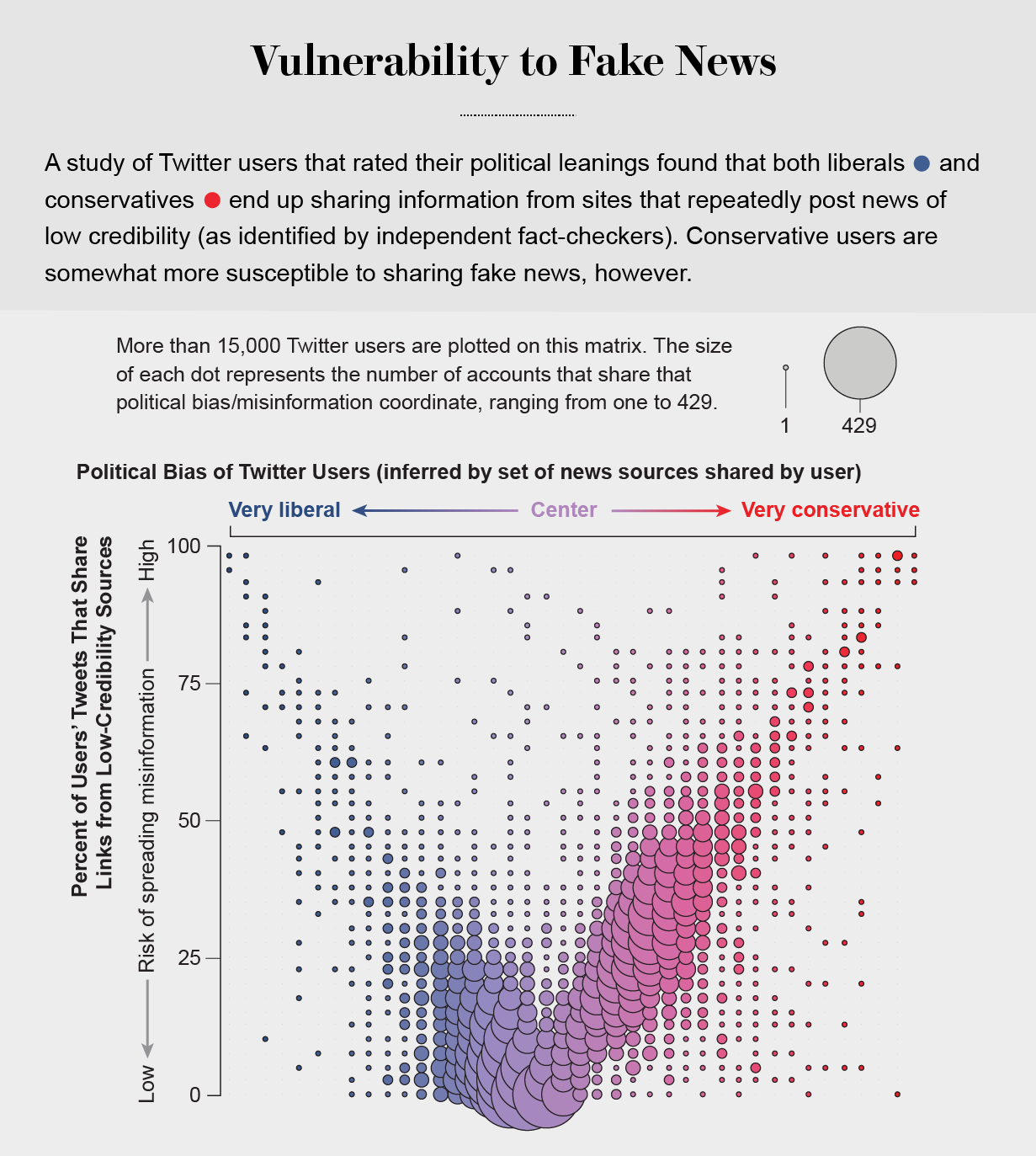

social groups create a pressure toward conformity so powerful that it can overcome individual preferences, and by amplifying random early differences, it can cause segregated groups to diverge to extremes.

Social media follows a similar dynamic. We confuse popularity with quality and end up copying the behavior we observe.

information is transmitted via “complex contagion”: when we are repeatedly exposed to an idea, typically from many sources, we are more likely to adopt and reshare it.

In addition to showing us items that conform with our views, social media platforms such as Facebook, Twitter, YouTube and Instagram place popular content at the top of our screens and show us how many people have liked and shared something. Few of us realize that these cues do not provide independent assessments of quality.

programmers who design the algorithms for ranking memes on social media assume that the “wisdom of crowds” will quickly identify high-quality items; they use popularity as a proxy for quality. My note: again, ill-conceived folksonomy.

Echo Chambers

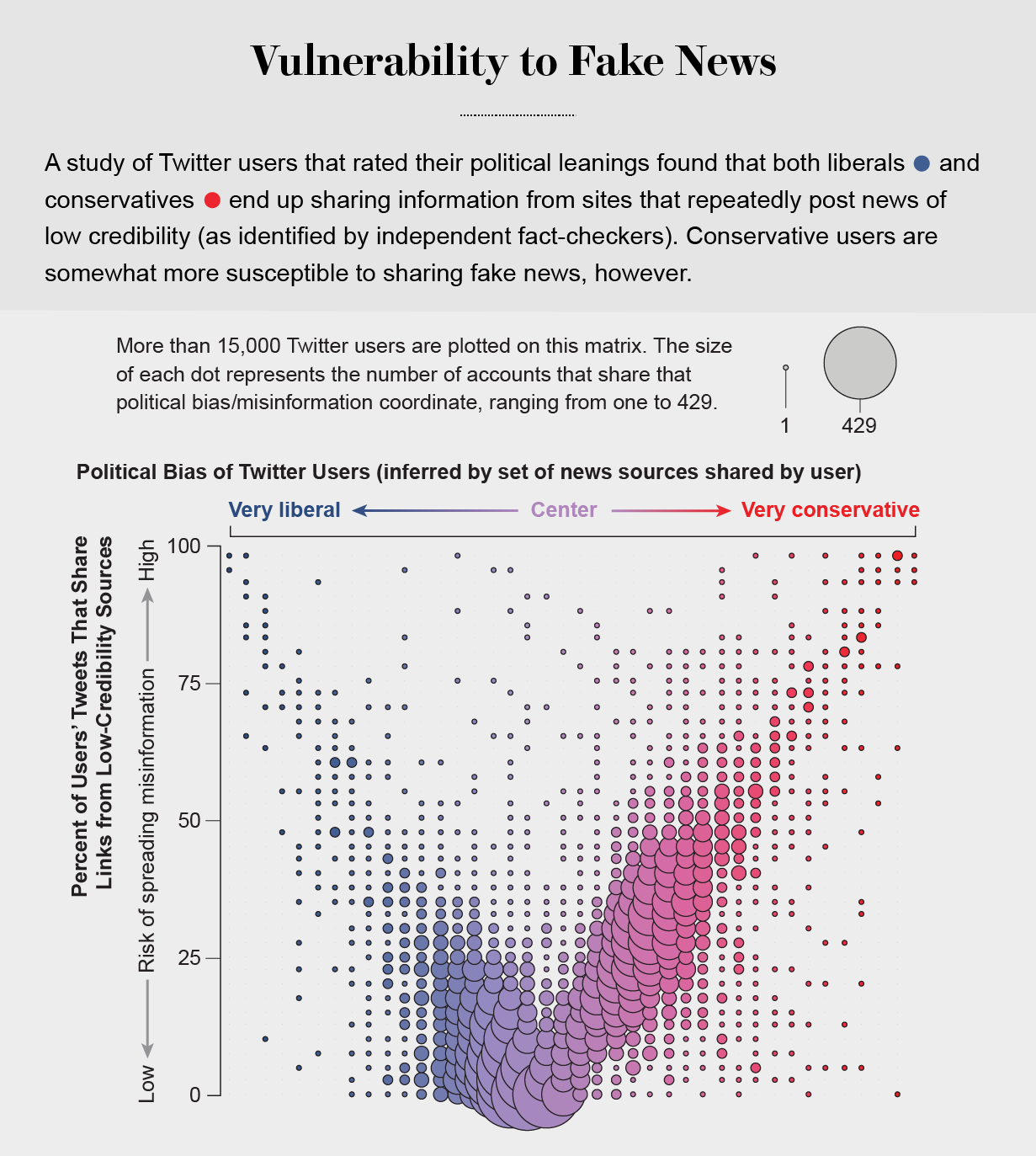

the political echo chambers on Twitter are so extreme that individual users’ political leanings can be predicted with high accuracy: you have the same opinions as the majority of your connections. This chambered structure efficiently spreads information within a community while insulating that community from other groups.

socially shared information not only bolsters our biases but also becomes more resilient to correction.

machine-learning algorithms to detect social bots. One of these, Botometer, is a public tool that extracts 1,200 features from a given Twitter account to characterize its profile, friends, social network structure, temporal activity patterns, language and other features. The program compares these characteristics with those of tens of thousands of previously identified bots to give the Twitter account a score for its likely use of automation.

Some manipulators play both sides of a divide through separate fake news sites and bots, driving political polarization or monetization by ads.

recently uncovered a network of inauthentic accounts on Twitter that were all coordinated by the same entity. Some pretended to be pro-Trump supporters of the Make America Great Again campaign, whereas others posed as Trump “resisters”; all asked for political donations.

a mobile app called Fakey that helps users learn how to spot misinformation. The game simulates a social media news feed, showing actual articles from low- and high-credibility sources. Users must decide what they can or should not share and what to fact-check. Analysis of data from Fakey confirms the prevalence of online social herding: users are more likely to share low-credibility articles when they believe that many other people have shared them.

Hoaxy, shows how any extant meme spreads through Twitter. In this visualization, nodes represent actual Twitter accounts, and links depict how retweets, quotes, mentions and replies propagate the meme from account to account.

Free communication is not free. By decreasing the cost of information, we have decreased its value and invited its adulteration.