Dec

2020

iLRN 2021

More on Virbela in this IMS blog

https://blog.stcloudstate.edu/ims?s=virbela

Digital Literacy for St. Cloud State University

More on Virbela in this IMS blog

https://blog.stcloudstate.edu/ims?s=virbela

Understanding how algorithm manipulators exploit our cognitive vulnerabilities empowers us to fight back

a minefield of cognitive biases.

People who behaved in accordance with them—for example, by staying away from the overgrown pond bank where someone said there was a viper—were more likely to survive than those who did not.

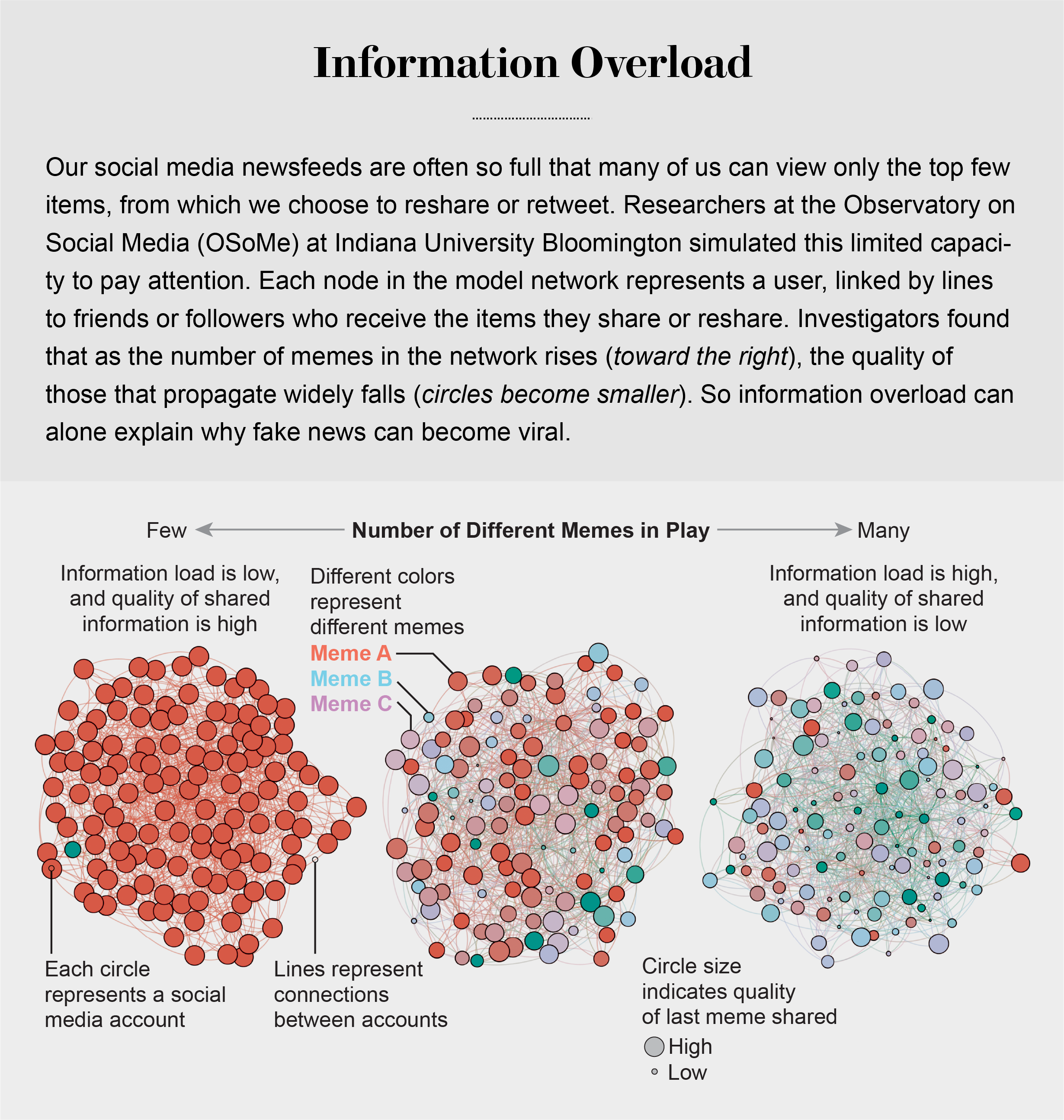

Compounding the problem is the proliferation of online information. Viewing and producing blogs, videos, tweets and other units of information called memes has become so cheap and easy that the information marketplace is inundated. My note: folksonomy in its worst.

At the University of Warwick in England and at Indiana University Bloomington’s Observatory on Social Media (OSoMe, pronounced “awesome”), our teams are using cognitive experiments, simulations, data mining and artificial intelligence to comprehend the cognitive vulnerabilities of social media users.

developing analytical and machine-learning aids to fight social media manipulation.

As Nobel Prize–winning economist and psychologist Herbert A. Simon noted, “What information consumes is rather obvious: it consumes the attention of its recipients.”

attention economy

Our models revealed that even when we want to see and share high-quality information, our inability to view everything in our news feeds inevitably leads us to share things that are partly or completely untrue.

Frederic Bartlett

Cognitive biases greatly worsen the problem.

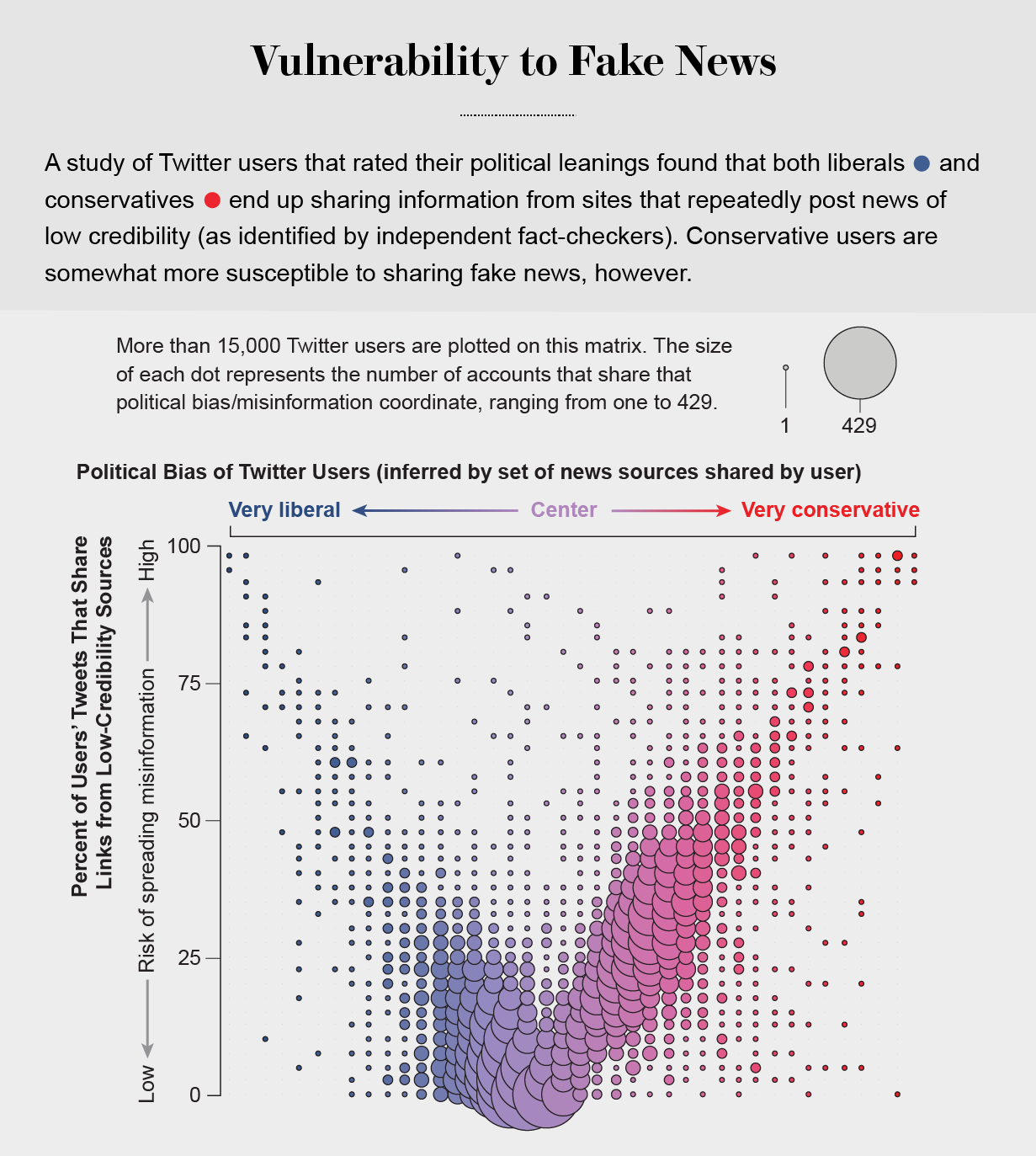

We now know that our minds do this all the time: they adjust our understanding of new information so that it fits in with what we already know. One consequence of this so-called confirmation bias is that people often seek out, recall and understand information that best confirms what they already believe.

This tendency is extremely difficult to correct.

Making matters worse, search engines and social media platforms provide personalized recommendations based on the vast amounts of data they have about users’ past preferences.

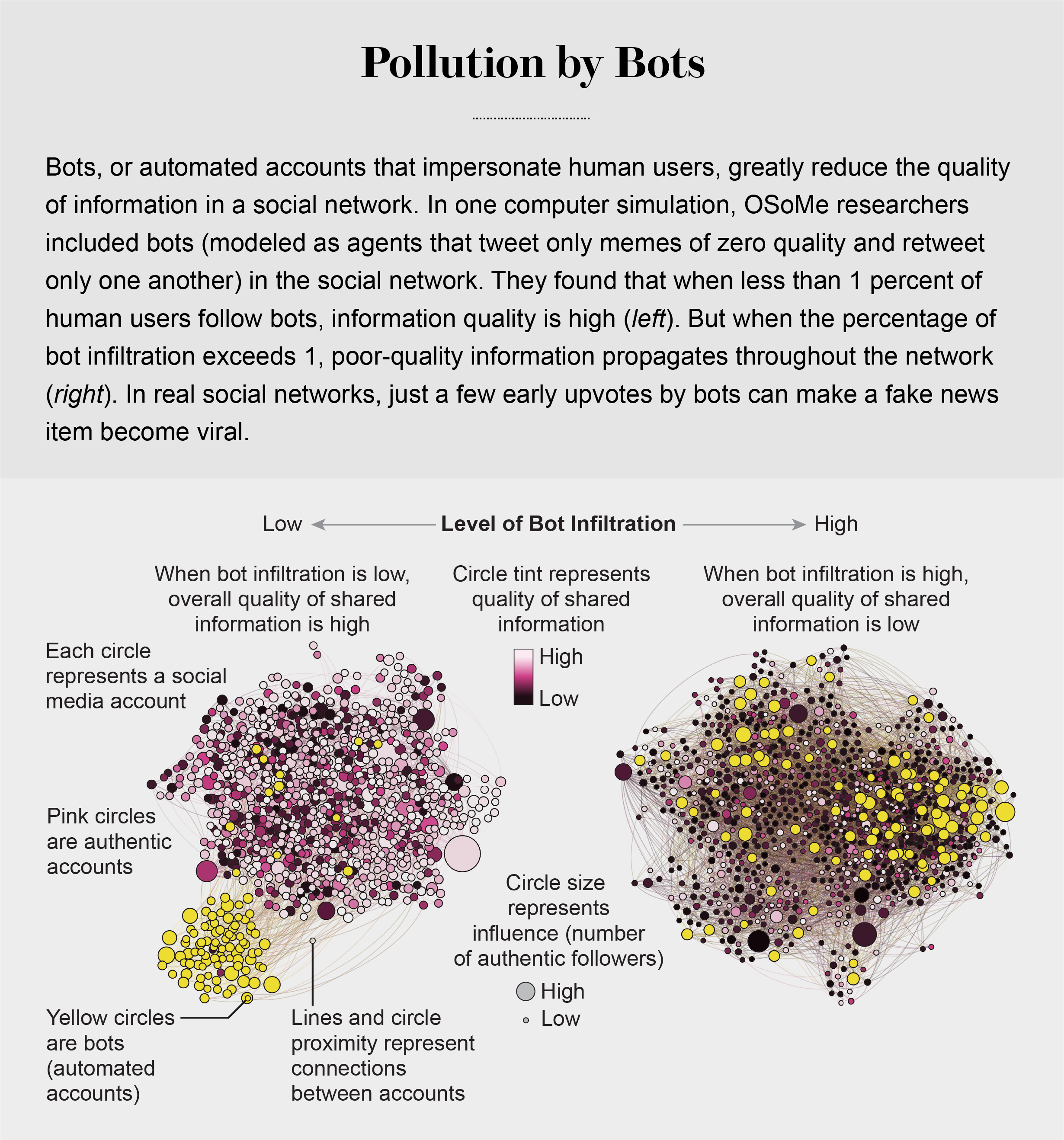

pollution by bots

Social Herding

social groups create a pressure toward conformity so powerful that it can overcome individual preferences, and by amplifying random early differences, it can cause segregated groups to diverge to extremes.

Social media follows a similar dynamic. We confuse popularity with quality and end up copying the behavior we observe.

information is transmitted via “complex contagion”: when we are repeatedly exposed to an idea, typically from many sources, we are more likely to adopt and reshare it.

In addition to showing us items that conform with our views, social media platforms such as Facebook, Twitter, YouTube and Instagram place popular content at the top of our screens and show us how many people have liked and shared something. Few of us realize that these cues do not provide independent assessments of quality.

programmers who design the algorithms for ranking memes on social media assume that the “wisdom of crowds” will quickly identify high-quality items; they use popularity as a proxy for quality. My note: again, ill-conceived folksonomy.

Echo Chambers

the political echo chambers on Twitter are so extreme that individual users’ political leanings can be predicted with high accuracy: you have the same opinions as the majority of your connections. This chambered structure efficiently spreads information within a community while insulating that community from other groups.

socially shared information not only bolsters our biases but also becomes more resilient to correction.

machine-learning algorithms to detect social bots. One of these, Botometer, is a public tool that extracts 1,200 features from a given Twitter account to characterize its profile, friends, social network structure, temporal activity patterns, language and other features. The program compares these characteristics with those of tens of thousands of previously identified bots to give the Twitter account a score for its likely use of automation.

Some manipulators play both sides of a divide through separate fake news sites and bots, driving political polarization or monetization by ads.

recently uncovered a network of inauthentic accounts on Twitter that were all coordinated by the same entity. Some pretended to be pro-Trump supporters of the Make America Great Again campaign, whereas others posed as Trump “resisters”; all asked for political donations.

a mobile app called Fakey that helps users learn how to spot misinformation. The game simulates a social media news feed, showing actual articles from low- and high-credibility sources. Users must decide what they can or should not share and what to fact-check. Analysis of data from Fakey confirms the prevalence of online social herding: users are more likely to share low-credibility articles when they believe that many other people have shared them.

Hoaxy, shows how any extant meme spreads through Twitter. In this visualization, nodes represent actual Twitter accounts, and links depict how retweets, quotes, mentions and replies propagate the meme from account to account.

Free communication is not free. By decreasing the cost of information, we have decreased its value and invited its adulteration.

Mary Nunaley

Karl Kapp The Gamification of Learning and Instruction

Kevin Werbach, Dan Hunter How Game Thinking Can Revolutionize Your Business

Yu-Kai Chou gamification design. Octalysis. https://www.gish.com/

8 core drives:

Meaning

Accomplishment

Empowerment

Ownership

Social Influence. social media, instagram influencers

Scarcity: scarcity with homework deadlines, coupons at the store

Unpredictability and curiosity. scavenger hunt in courses. careful when teaching.

Avoidance

motivation

+++++++++++++++

https://yukaichou.com/octalysis-tool/

+++++++++++++++

https://island.octalysisprime.com/

+++++++++++++

https://yukaichou.com/

+++++++++++++

++++++++++++++

++++++++++++

more on gamification in this IMS blog

https://blog.stcloudstate.edu/ims?s=gamification

This is an excerpt from my 2018 book chapter: https://www.academia.edu/41628237/Chapter_12_VR_AR_and_Video_360_A_Case_Study_Towards_New_Realities_in_Education_by_Plamen_Miltenoff

Among a myriad of other definitions, Noor (2016) describes Virtual Reality (VR) as “a computer generated environment that can simulate physical presence in places in the real world or imagined worlds. The user wears a headset and through specialized software and sensors is immersed in 360-degree views of simulated worlds” (p. 34).

Noor, Ahmed. 2016. “The Hololens Revolution.” Mechanical Engineering 138(10):30-35.

Weiss and colleagues wrote that “Virtual reality typically refers to the use of interactive simulations created with computer hardware and software to present users with opportunities to engage in environments that appear to be and feel similar to real-world objects and events”

Weiss, P. L., Rand, D., Katz, N., & Kizony, R. (2004). Video capture virtual reality as a flexible and effective rehabilitation tool. Journal of NeuroEngineering and Rehabilitation, 1(1), 12. https://doi.org/10.1186/1743-0003-1-12

Henderson defined virtual reality as a “computer based, interactive, multisensory environment that occurs in real time”

Rubin, 2018, p. 28. Virtual reality is an 1. artificial environment that’s 2. immersive enough to convince you that you are 3. actually inside it.

”artificialenvironment ” could mean just about anything. The photograph is an artificial environment of video game is an artificial environment a Pixar movie is an artificial environment the only thing that matters is that it’s not where are you physically are. p. 46 “VR is potentially going to become a direct interface to the subconscious”

From: https://blog.stcloudstate.edu/ims/2018/11/07/can-xr-help-students-learn/ :

p. 10 “there is not universal agreement on the definitions of these terms or on the scope of these technologies. Also, all of these technologies currently exist in an active marketplace and, as in many rapidly changing markets, there is a tendency for companies to invent neologisms around 3D technology.” p. 11 Virtual reality means that the wearer is completely immersed in a computer simulation.

from: https://blog.stcloudstate.edu/ims/2018/11/07/can-xr-help-students-learn/

There is no necessary distinction between AR and VR; indeed, much research

on the subject is based on a conception of a “virtuality continuum” from entirely

real to entirely virtual, where AR lies somewhere between those ends of the

spectrum. Paul Milgram and Fumio Kishino, “A Taxonomy of Mixed Reality Visual Displays,” IEICE Transactions on Information Systems, vol. E77-D, no. 12 (1994); Steve Mann, “Through the Glass, Lightly,” IEEE Technology and Society Magazine 31, no. 3 (2012): 10–14.

++++++++++++++++++++++

Among a myriad of other definitions, Noor (2016) describes Virtual Reality (VR) as “a computer generated environment that can simulate physical presence in places in the real world or imagined worlds. The user wears a headset and through specialized software and sensors is immersed in 360-degree views of simulated worlds” (p. 34). Weiss and colleagues wrote that “Virtual reality typically refers to the use of interactive simulations created with computer hardware and software to present users with opportunities to engage in environments that appear to be and feel similar to real-world objects and events.”

Rubin takes a rather broad approach ascribing to VR: 1. artificial environment that’s 2. immersive enough to convince you that you are 3. actually inside it. (p. 28) and further asserts “VR is potentially going to become a direct interface to the subconscious” (p. 46).

Most importantly, as Pomeranz (2018) asserts, “there is not universal agreement on the definitions of these terms or on the scope of these technologies. Also, all of these technologies currently exist in an active marketplace and, as in many rapidly changing markets, there is a tendency for companies to invent neologisms.” (p. 10)

Noor, Ahmed. 2016. “The Hololens Revolution.” Mechanical Engineering 138(10):30-35.

Pomerantz, J. (2018). Learning in Three Dimensions: Report on the EDUCAUSE/HP Campus of the Future Project (Louisville, CO; ECAR Research Report, p. 57). https://library.educause.edu/~/media/files/library/2018/8/ers1805.pdf

Rubin, P. (2018). Future Presence: How Virtual Reality Is Changing Human Connection, Intimacy, and the Limits of Ordinary Life (Illustrated edition). HarperOne.

Weiss, P. L., Rand, D., Katz, N., & Kizony, R. (2004). Video capture virtual reality as a flexible and effective rehabilitation tool. Journal of NeuroEngineering and Rehabilitation, 1(1), 12. https://doi.org/10.1186/1743-0003-1-12

https://www.igi-global.com/gateway/book/244559

Chapters:

Holland, B. (2020). Emerging Technology and Today’s Libraries. In Holland, B. (Eds.), Emerging Trends and Impacts of the Internet of Things in Libraries (pp. 1-33). IGI Global. http://doi:10.4018/978-1-7998-4742-7.ch001

The purpose of this chapter is to examine emerging technology and today’s libraries. New technology stands out first and foremost given that they will end up revolutionizing every industry in an age where digital transformation plays a major role. Major trends will define technological disruption. The next-gen of communication, core computing, and integration technologies will adopt new architectures. Major technological, economic, and environmental changes have generated interest in smart cities. Sensing technologies have made IoT possible, but also provide the data required for AI algorithms and models, often in real-time, to make intelligent business and operational decisions. Smart cities consume different types of electronic internet of things (IoT) sensors to collect data and then use these data to manage assets and resources efficiently. This includes data collected from citizens, devices, and assets that are processed and analyzed to monitor and manage, schools, libraries, hospitals, and other community services.

Modest3D Guided Virtual Adventure – iLRN Conference 2020 – Session 1: currently, live session: https://youtu.be/GjxTPOFSGEM

https://mediaspace.minnstate.edu/media/Modest+3D/1_28ejh60g

Instruction and Instructional Design

Presentation 1: Inspiring Faculty (+ Students) with Tales of Immersive Tech (Practitioner Presentation #106)

Authors: Nicholas Smerker

Immersive technologies – 360º video, virtual and augmented realities – are being discussed in many corners of higher education. For an instructor who is familiar with the terms, at least in passing, learning more about why they and their students should care can be challenging, at best. In order to create a font of inspiration, the IMEX Lab team within Teaching and Learning with Technology at Penn State devised its Get Inspired web resource. Building on a similar repository for making technology stories at the sister Maker Commons website, the IMEX Lab Get Inspired landing page invites faculty to discover real world examples of how cutting edge XR tools are being used every day. In addition to very approachable video content and a short summary calling out why our team chose the story, there are also instructional designer-developed Assignment Ideas that allow for quick deployment of exercises related to – though not always relying upon – the technologies highlighted in a given Get Inspired story.

Presentation 2: Lessons Learned from Over A Decade of Designing and Teaching Immersive VR in Higher Education Online Courses (Practitioner Presentation #101)

Authors: Eileen Oconnor

This presentation overviews the design and instruction in immersive virtual reality environments created by the author beginning with Second Life and progressing to open source venues. It will highlight the diversity of VR environment developed, the challenges that were overcome, and the accomplishment of students who created their own VR environments for K12, college and corporate settings. The instruction and design materials created to enable this 100% online master’s program accomplishment will be shared; an institute launched in 2018 for emerging technology study will be noted.

Presentation 3: Virtual Reality Student Teaching Experience: A Live, Remote Option for Learning Teaching Skills During Campus Closure and Social Distancing (Practitioner Presentation #110)

Authors: Becky Lane, Christine Havens-Hafer, Catherine Fiore, Brianna Mutsindashyaka and Lauren Suna

Summary: During the Coronavirus pandemic, Ithaca College teacher education majors needed a classroom of students in order to practice teaching and receive feedback, but the campus was closed, and gatherings forbidden. Students were unable to participate in live practice teaching required for their program. We developed a virtual reality pilot project to allow students to experiment in two third-party social VR programs, AltSpaceVR and Rumii. Social VR platforms allow a live, embodied experience that mimics in-person events to give students a more realistic, robust and synchronous teaching practice opportunity. We documented the process and lessons learned to inform, develop and scale next generation efforts.

Target audience sector: Informal and/or lifelong learning

Supported devices: Desktop/laptop – Windows, Desktop/laptop – Mac

Platform/environment access: Download from a website and install on a desktop/laptop computer

Official website: http://www.secondlife.com

+++++++++++++++++++

Click here to remove from My Sched.

Click here to remove from My Sched.Presentation 1: Evaluating the impact of multimodal Collaborative Virtual Environments on user’s spatial knowledge and experience of gamified educational tasks (Full Paper #91)

Authors: Ioannis Doumanis and Daphne Economou

>>Access Video Presentation<<

Several research projects in spatial cognition have suggested Virtual Environments (VEs) as an effective way of facilitating mental map development of a physical space. In the study reported in this paper, we evaluated the effectiveness of multimodal real-time interaction in distilling understanding of the VE after completing gamified educational tasks. We also measure the impact of these design elements on the user’s experience of educational tasks. The VE used reassembles an art gallery and it was built using REVERIE (Real and Virtual Engagement In Realistic Immersive Environment) a framework designed to enable multimodal communication on the Web. We compared the impact of REVERIE VG with an educational platform called Edu-Simulation for the same gamified educational tasks. We found that the multimodal VE had no impact on the ability of students to retain a mental model of the virtual space. However, we also found that students thought that it was easier to build a mental map of the virtual space in REVERIE VG. This means that using a multimodal CVE in a gamified educational experience does not benefit spatial performance, but also it does not cause distraction. The paper ends with future work and conclusions and suggestions for improving mental map construction and user experience in multimodal CVEs.

Presentation 2: A case study on student’s perception of the virtual game supported collaborative learning (Full Paper #42)

Authors: Xiuli Huang, Juhou He and Hongyan Wang

>>Access Video Presentation<<

The English education course in China aims to help students establish the English skills to enhance their international competitiveness. However, in traditional English classes, students often lack the linguistic environment to apply the English skills they learned in their textbook. Virtual reality (VR) technology can set up an immersive English language environment and then promote the learners to use English by presenting different collaborative communication tasks. In this paper, spherical video-based virtual reality technology was applied to build a linguistic environment and a collaborative learning strategy was adopted to promote their communication. Additionally, a mixed-methods research approach was used to analyze students’ achievement between a traditional classroom and a virtual reality supported collaborative classroom and their perception towards the two approaches. The experimental results revealed that the virtual reality supported collaborative classroom was able to enhance the students’ achievement. Moreover, by analyzing the interview, students’ attitudes towards the virtual reality supported collaborative class were reported and the use of language learning strategies in virtual reality supported collaborative class was represented. These findings could be valuable references for those who intend to create opportunities for students to collaborate and communicate in the target language in their classroom and then improve their language skills

Click here to remove from My Sched.

Click here to remove from My Sched.Presentation 1: Reducing Cognitive Load through the Worked Example Effect within a Serious Game Environment (Full Paper #19)

Authors: Bernadette Spieler, Naomi Pfaff and Wolfgang Slany

>>Access Video Presentation<<

Novices often struggle to represent problems mentally; the unfamiliar process can exhaust their cognitive resources, creating frustration that deters them from learning. By improving novices’ mental representation of problems, worked examples improve both problem-solving skills and transfer performance. Programming requires both skills. In programming, it is not sufficient to simply understand how Stackoverflow examples work; programmers have to be able to adapt the principles and apply them to their own programs. This paper shows evidence in support of the theory that worked examples are the most efficient mode of instruction for novices. In the present study, 42 students were asked to solve the tutorial The Magic Word, a game especially for girls created with the Catrobat programming environment. While the experimental group was presented with a series of worked examples of code, the control groups were instructed through theoretical text examples. The final task was a transfer question. While the average score was not significantly better in the worked example condition, the fact that participants in this experimental group finished significantly faster than the control group suggests that their overall performance was better than that of their counterparts.

Presentation 2: A literature review of e-government services with gamification elements (Full Paper #56)

Authors: Ruth S. Contreras-Espinosa and Alejandro Blanco-M

>>Access Video Presentation<<

Nowadays several democracies are facing the growing problem of a breach in communication between its citizens and their political representatives, resulting in low citizen’s engagement in the participation of political decision making and on public consultations. Therefore, it is fundamental to generate a constructive relationship between both public administration and the citizens by solving its needs. This document contains a useful literature review of the gamification topic and e-government services. The documents contain a background of those concepts and conduct a selection and analysis of the different applications found. A set of three lines of research gaps are found with a potential impact on future studies.

Click here to remove from My Sched.

Click here to remove from My Sched.Presentation 1: Connecting User Experience to Learning in an Evaluation of an Immersive, Interactive, Multimodal Augmented Reality Virtual Diorama in a Natural History Museum & the Importance of Story (Full Paper #51)

Authors: Maria Harrington

>>Access Video Presentation<<

Reported are the findings of user experience and learning outcomes from a July 2019 study of an immersive, interactive, multimodal augmented reality (AR) application, used in the context of a museum. The AR Perpetual Garden App is unique in creating an immersive multisensory experience of data. It allowed scientifically naïve visitors to walk into a virtual diorama constructed as a data visualization of a springtime woodland understory, and interact with multimodal information directly through their senses. The user interface comprised of two different AR data visualization scenarios reinforced with data based ambient bioacoustics, an audio story of the curator’s narrative, and interactive access to plant facts. While actual learning and dwell times were the same between the AR app and the control condition, the AR experience received higher ratings on perceived learning. The AR interface design features of “Story” and “Plant Info” showed significant correlations with actual learning outcomes, while “Ease of Use” and “3D Plants” showed significant correlations with perceived learning. As such, designers and developers of AR apps can generalize these findings to inform future designs.

Presentation 2: The Naturalist’s Workshop: Virtual Reality Interaction with a Natural Science Educational Collection (Short Paper #11)

Authors: Colin Patrick Keenan, Cynthia Lincoln, Adam Rogers, Victoria Gerson, Jack Wingo, Mikhael Vasquez-Kool and Richard L. Blanton

>>Access Video Presentation<<

For experiential educators who utilize or maintain physical collections, The Naturalist’s Workshop is an exemplar virtual reality platform to interact with digitized collections in an intuitive and playful way. The Naturalist’s Workshop is a purpose-developed application for the Oculus Quest standalone virtual reality headset for use by museum visitors on the floor of the North Carolina Museum of Natural Sciences under the supervision of a volunteer attendant. Within the application, museum visitors are seated at a virtual desk. Using their hand controllers and head-mounted display, they explore drawers containing botanical specimens and tools-of-the-trade of a naturalist. While exploring, the participant can receive new information about any specimen by dropping it into a virtual examination tray. 360-degree photography and three-dimensionally scanned specimens are used to allow user-motivated, immersive experience of botanical meta-data such as specimen collection coordinates.

Presentation 3: 360˚ Videos: Entry level Immersive Media for Libraries and Education (Practitioner Presentation #132)

Authors: Diane Michaud

>>Access Video Presentation<<

Within the continuum of XR Technologies, 360˚ videos are relatively easy to produce and need only an inexpensive mobile VR viewer to provide a sense of immersion. 360˚ videos present an opportunity to reveal “behind the scenes” spaces that are normally inaccessible to users of academic libraries. This can promote engagement with unique special collections and specific library services. In December 2019, with little previous experience, I led the production of a short 360˚video tour, a walk-through of our institution’s archives. This was a first attempt; there are plans to transform it into a more interactive, user-driven exploration. The beta version successfully generated interest, but the enhanced version will also help prepare uninitiated users for the process of examining unique archival documents and artefacts. This presentation will cover the lessons learned, and what we would do differently for our next immersive video production. Additionally, I will propose that the medium of 360˚ video is ideal for many institutions’ current or recent predicament with campuses shutdown due to the COVID-19 pandemic. Online or immersive 360˚ video can be used for virtual tours of libraries and/or other campus spaces. Virtual tours would retain their value beyond current campus shutdowns as there will always be prospective students and families who cannot easily make a trip to campus. These virtual tours would provide a welcome alternative as they eliminate the financial burden of travel and can be taken at any time.

++++++++++++

more about Educators in VR in this IMS blog

https://blog.stcloudstate.edu/ims?s=educators+in+vr

IM 690 lab plan for March 3, MC 205: Oculus Go and Quest

Readings:

Lab work (continue):

revision from last week:

How to shoot and edit 360 videos: Ben Claremont

https://www.youtube.com/channel/UCAjSHLRJcDfhDSu7WRpOu-w

and

https://www.youtube.com/channel/UCUFJyy31hGam1uPZMqcjL_A

Climbing

Racketball

Practice interactivity (space station)

Interactivity: communication and working collaboratively with Altspace VR

setting up your avatar

joining a space and collaborating and communicating with other users

+++++++++++++

Enhance your XR instructional Design with other tools: https://blog.stcloudstate.edu/ims/2020/02/07/crs-loop/

https://learn.framevr.io/ (free learning of frame)

https://sketchfab.com/ WebxR technology

https://mixedreality.mozilla.org/hello-webxr/

https://studio.gometa.io/landing

+++++++++++

Plamen Miltenoff, Ph.D., MLIS

Professor

320-308-3072

pmiltenoff@stcloudstate.edu

http://web.stcloudstate.edu/pmiltenoff/faculty/

schedule a meeting: https://doodle.com/digitalliteracy

find my office: https://youtu.be/QAng6b_FJqs

Info on all presentations: https://account.altvr.com/channels/1182698623012438188

$$$$$$$$$$$$$$$$$$$$$$

++++++++++++++++++++++++++++++++++++++++

++++++++++++++++++++++++++++++

+++++++++++++++++++++++

++++++++++++++++++++++++++++

Qlone App for 3D scanning

++++++++++++++++++++++++++++++++++++++

The 2020 Educators in VR International Summit is February 17-22. It features over 170 speakers in 150+ events across multiple social and educational platforms including AltspaceVR, ENGAGE, rumii, Mozilla Hubs, and Somnium Space.

The event requires no registration, and is virtual only, free, and open to the public. Platform access is required, so please install one of the above platforms to attend the International Summit. You may attend in 2D on a desktop or laptop computer with a headphone and microphone (USB gaming headphone recommended), or with a virtual device such as the Oculus Go, Quest, and Rift, Vive, and other mobile and tethered devices. Please note the specifications and requirements of each platform.

The majority of our events are on AltspaceVR. AltspaceVR is available for Samsung Gear, Steam Store for HTC Vive, Windows Mixed Reality, and the Oculus Store for Rift, Go and Quest users. Download and install the 2D version for use on your Windows desktop computer.

Charlie Fink, author, columnist for Forbes magazine, and Adjunct Faculty member of Chapman University, will be presenting “Setting the Table for the Next Decade in XR,” discussing the future of this innovative and immersive technology, at the 2020 Educators in VR International Summit. He will be speaking in AltspaceVR on Tuesday, February 18 at 1:00 PM EST /

Setting the Table for the Next Decade in XR 1PM, Tues, Feb 18 https://account.altvr.com/events/1406089727517393133

Finding a New Literacy for a New Reality 5PM, Tues, Feb 18

https://account.altvr.com/events/1406093036194103494 schedule for new literacy

This workshop with Dr. Sarah Jones will focus on developing a relevant and new literacy for virtual reality, including the core competencies and skills needed to develop and understand how to become an engaged user of the technology in a meaningful way. The workshop will develop into research for a forthcoming book on Uncovering a Literacy for VR due to be published in 2020.

Sarah is listed as one of the top 15 global influencers within virtual reality. After nearly a decade in television news, Sarah began working in universities focusing on future media, future technology and future education. Sarah holds a PhD in Immersive Storytelling and has published extensively on virtual and augmented reality, whilst continuing to make and create immersive experiences. She has advised the UK Government on Immersive Technologies and delivers keynotes and speaks at conferences across the world on imagining future technology. Sarah is committed to diversifying the media and technology industries and regularly champions initiatives to support this agenda.

Currently there are limited ways to connect 3D VR environments to physical objects in the real-world whilst simultaneously conducting communication and collaboration between remote users. Within the context of a solar power plant, the performance metrics of the site are invaluable for environmental engineers who are remotely located. Often two or more remotely located engineers need to communicate and collaborate on solving a problem. If a solar panel component is damaged, the repair often needs to be undertaken on-site thereby incurring additional expenses. This triage of communication is known as inter-cognitive communication and intra-cognitive communication: inter-cognitive communication where information transfer occurs between two cognitive entities with different cognitive capabilities (e.g., between a human and an artificially cognitive system); intra-cognitive communication where information transfer occurs between two cognitive entities with equivalent cognitive capabilities (e.g., between two humans) [Baranyi and Csapo, 2010]. Currently, non-VR solutions offer a comprehensive analysis of solar plant data. A regular PC with a monitor currently have advantages over 3D VR. For example, sensors can be monitored using dedicated software such as EPEVER or via a web browser; as exemplified by the comprehensive service provided by Elseta. But when multiple users are able to collaborate remotely within a three-dimensional virtual simulation, the opportunities for communication, training and academic education will be profound.

Michael Vallance Ed.D. is a researcher in the Department of Media Architecture, Future University Hakodate, Japan. He has been involved in educational technology design, implementation, research and consultancy for over twenty years, working closely with Higher Education Institutes, schools and media companies in UK, Singapore, Malaysia and Japan. His 3D virtual world design and tele-robotics research has been recognized and funded by the UK Prime Minister’s Initiative (PMI2) and the Japan Advanced Institute of Science and Technology (JAIST). He has been awarded by the United States Army for his research in collaborating the programming of robots in a 3D Virtual World.

Create Strategic Snapchat & Instagram AR Campaigns

Augmented Reality Lens is popular among young people thanks to Snapchat’s invention. Business is losing money without fully using of social media targeting young people (14-25). In my presentation, Dominique Wu will show how businesses can generate more leads through Spark AR (Facebook AR/Instagram AR) & Snapchat AR Lens, and how to create a strategic Snapchat & Instagram AR campaigns.

Domnique Wu is an XR social media strategist and expert in UX/UI design.She has her own YouTube and Apple Podcast show called “XReality: Digital Transformation,” covering the technology and techniques of incorporating XR and AR into social media, marketing, and integration into enterprise solutions.

Mixed Reality in Classrooms Near You

Mixed Reality devices like the HoloLens are transforming education now. Mark Christian will discuss how the technology is not about edge use cases or POCs, but real usable products that are at Universities transforming the way we teach and learn. Christian will talk about the products of GIGXR, the story of how they were developed and what the research is saying about their efficacy. It is time to move to adoption of XR technology in education. Learn how one team has made this a reality.

As CEO of forward-thinking virtual reality and software companies, Mark Christian employs asymmetric approaches to rapid, global market adoption, hiring, diversity and revenue. He prides himself on unconventional approaches to building technology companies.

Designing Educational Content in VR

Virtual Reality is an effective medium to impart education to the student only if it is done right.The way VR is considered gimmick or not is by the way the software application are designed/developed by the developers not the hardware limitation.I will be giving insight about the VR development for educational content specifically designed for students of lower secondary school.I will also provide insights about the development of game in unity3D game engine.

Game Developer and VR developer with over 3 years of experience in Game Development.Developer of Zombie Shooter, winner of various national awards in the gaming and entertainment category, Avinash Gyawali is the developer of EDVR, an immersive voice controlled VR experience specially designed for children of age 10-18 years.

| 8:00 AM PST | Research | Virtual Reality Technologies for Learning Designers | Margherita Berti | ASVR |

Virtual Reality (VR) is a computer-generated experience that simulates presence in real or imagined environments (Kerrebrock, Brengman, & Willems, 2017). VR promotes contextualized learning, authentic experiences, critical thinking, and problem-solving opportunities. Despite the great potential and popularity of this technology, the latest two installations of the Educause Horizon Report (2018, 2019) have argued that VR remains “elusive” in terms of mainstream adoption. The reasons are varied, including the expense and the lack of empirical evidence for its effectiveness in education. More importantly, examples of successful VR implementations for those instructors who lack technical skills are still scarce. Margherita Berti will discuss a range of easy-to-use educational VR tools and examples of VR-based activity examples and the learning theories and instructional design principles utilized for their development.

Margherita Berti is a doctoral candidate in Second Language Acquisition and Teaching (SLAT) and Educational Technology at the University of Arizona. Her research specialization resides at the intersection of virtual reality, the teaching of culture, and curriculum and content development for foreign language education.

| Wed | 11:00 AM PST | Special Event | Gamifying the Biblioverse with Metaverse | Amanda Fox | VR Design / Biblioverse / Training & Embodiment | ASVR |

There is a barrier between an author and readers of his/her books. The author’s journey ends, and the reader’s begins. But what if as an author/trainer, you could use gamification and augmented reality(AR) to interact and coach your readers as part of their learning journey? Attend this session with Amanda Fox to learn how the book Teachingland leverages augmented reality tools such as Metaverse to connect with readers beyond the text.

Amanda Fox, Creative Director of STEAMPunksEdu, and author of Teachingland: A Teacher’s Survival Guide to the Classroom Apolcalypse and Zom-Be A Design Thinker. Check her out on the Virtual Reality Podcast, or connect with her on twitter @AmandaFoxSTEM.

| Wed | 10:00 AM PST | Research | Didactic Activity of the Use of VR and Virtual Worlds to Teach Design Fundamentals | Christian Jonathan Angel Rueda | VR Design / Biblioverse / Training & Embodiment | ASVR |

Christian Jonathan Angel Rueda specializaes in didactic activity of the use of virtual reality/virtual worlds to learn the fundamentals of design. He shares the development of a course including recreating in the three-dimensional environment using the fundamentals learned in class, a demonstration of all the works developed throughout the semester using the knowledge of design foundation to show them creatively, and a final project class scenario that connected with the scenes of the students who showed their work throughout the semester.

Christian Jonathan Angel Rueda is a research professor at the Autonomous University of Queretaro in Mexico. With a PhD in educational technology, Christian has published several papers on the intersection of education, pedagogy, and three-dimensional immersive digital environments. He is also an edtech, virtual reality, and social media consultant at Eco Onis.

| Thu | 11:00 AM PST | vCoaching | Closing the Gap Between eLearning and XR | Richard Van Tilborg | XR eLearning / Laughter Medicine | ASVR |

How we can bridge the gap between eLearning and XR. Richard Van Tilborg discusses combining brain insights enabled with new technologies. Training and education cases realised with the CoVince platform: journeys which start on you mobile and continue in VR. The possibilities to earn from your creations and have a central distribution place for learning and data.

Richard Van Tilborg works with the CoVince platform, a VR platform offering training and educational programs for central distribution of learning and data. He is an author and speaker focusing on computers and education in virtual reality-based tasks for delivering feedback.

| Thu | 12:00 PM PST | Research | Assessment of Learning Activities in VR | Evelien Ydo | Technology Acceptance / Learning Assessment / Vaping Prevention | ASVR |

| Thu | 6:00 PM PST | Down to Basics | Copyright and Plagiarism Protections in VR | Jonathan Bailey | ASVR

|

| Thu | 8:00 PM PST | Diversity | Cyberbullying in VR | John Williams, Brennan Hatton, Lorelle VanFossen | ASVR |

IM 690 lab plan for Feb. 18, MC 205: Experience VR and AR

What is an “avatar” and why do we need to know how it works?

How does the book (and the movie) “Ready Player One” project the education of the future

Peter Rubin “Future Present” pictures XR beyond education. How would such changes in the society and our behavior influence education.

Readings:

each group selected one article of this selection: https://blog.stcloudstate.edu/ims/2020/02/11/immersive-reality-and-instructional-design/

to discuss the approach of an Instructional Designer to XR

Announcements:

https://blog.stcloudstate.edu/ims/2020/02/07/educators-in-vr/

https://blog.stcloudstate.edu/ims/2020/01/30/realities360-conference/

Inter

Inter-cognitive and Intra-cognitive communication in VR: https://slides.com/michaelvallance/deck-25c189#/

@EducatorsVR

I’ll be talking about Didactic Activity of the Use of #VR and #VirtualWorlds to Teach Design Fundamentals. I will show the work of my students in @SansarOfficial Join the #EducatorsinVR International Summit the present day at 10:00 AM PST https://t.co/nLV6orr19i pic.twitter.com/yFQomkD7ER— Dr. Christian J. Angel Rueda (@eco_onis) February 19, 2020

https://www.youtube.com/channel/UCGHRSovY-KvlbJHkYnIC-aA

People with dementia

Free resources:

https://blog.stcloudstate.edu/ims?s=free+audio, free sound, free multimedia

Lab work:

– how does this particular technology fit in the instructional design (ID) frames and theories covered so far?

– what models and ideas from the videos you will see seem possible to be replicated by you?

Assignment: Use Google Cardboard to watch at least three of the following options

YouTube:

Elephants (think how it can be used for education)

https://youtu.be/2bpICIClAIg

Sharks (think how it can be used for education)

https://youtu.be/aQd41nbQM-U

Solar system

https://youtu.be/0ytyMKa8aps

Dementia

https://youtu.be/R-Rcbj_qR4g

Facebook

https://www.facebook.com/EgyptVR/photos/a.1185857428100641/1185856994767351/

From Peter Rubin’s Future Presence: here is a link https://blog.stcloudstate.edu/ims/2019/03/25/peter-rubin-future-presence/ if you want to learn more

Empathy, Chris Milk, https://youtu.be/iXHil1TPxvA

Clouds Over Sidra, https://youtu.be/mUosdCQsMkM

Assignment: In 10-15 min (mind your peers, since we have only headset), do your best to evaluate one educational app (e.g., Labster) and one leisure app (games).

Use the same questions to evaluate Lenovo DayDream:

– Does this particular technology fit in the instructional design (ID) frames and theories covered, e.g. PBL, CBL, Activity Theory, ADDIE Model, TIM etc. (https://blog.stcloudstate.edu/ims/2020/01/29/im-690-id-theory-and-practice/ ). Can you connect the current state, but also the potential of this technology with the any of these frameworks and theories, e.g., how would Google Tour Creator or any of these videos fits in the Analysis – Design – Development – Implementation – Evaluation process? Or, how do you envision your Google Tour Creator project or any of these videos to fit in the Entry – Adoption – Adaptation – Infusion – Transformation process?

– how does this particular technology fit in the instructional design (ID) frames and theories covered so far?

– what models and ideas from the videos you will see seem possible to be replicated by you?

+++++++++++

Plamen Miltenoff, Ph.D., MLIS

Professor

320-308-3072

pmiltenoff@stcloudstate.edu

http://web.stcloudstate.edu/pmiltenoff/faculty/

schedule a meeting: https://doodle.com/digitalliteracy

find my office: https://youtu.be/QAng6b_FJqs