Searching for "track model"

https://www.facebook.com/groups/elearngdeveloping/permalink/10164385188890542/

How have you experienced the teachers move from face to face to online learning?

What is the biggest challenge created by the transition ?

How have you managed the challenge?

What are opportunities for instructors , regarding the transition to online learning ?

What have you done to ensure a smooth transition?

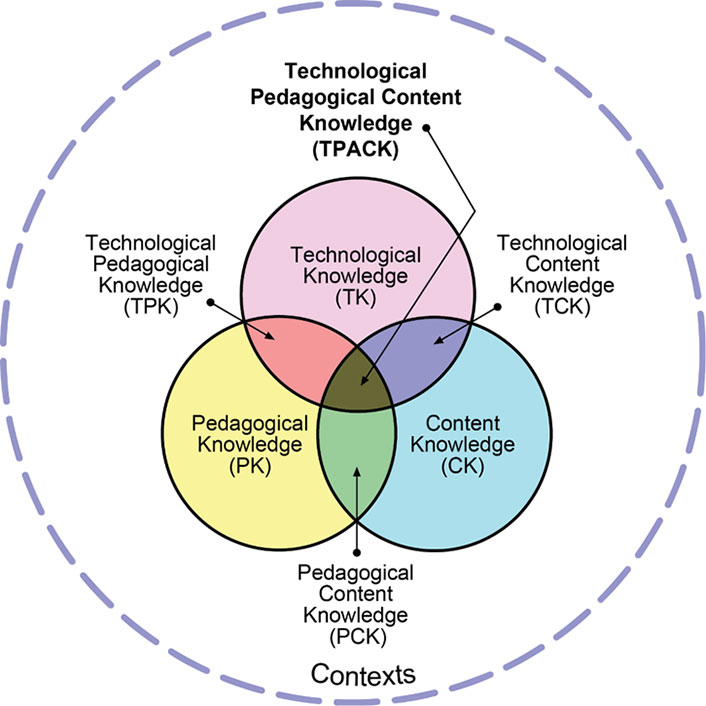

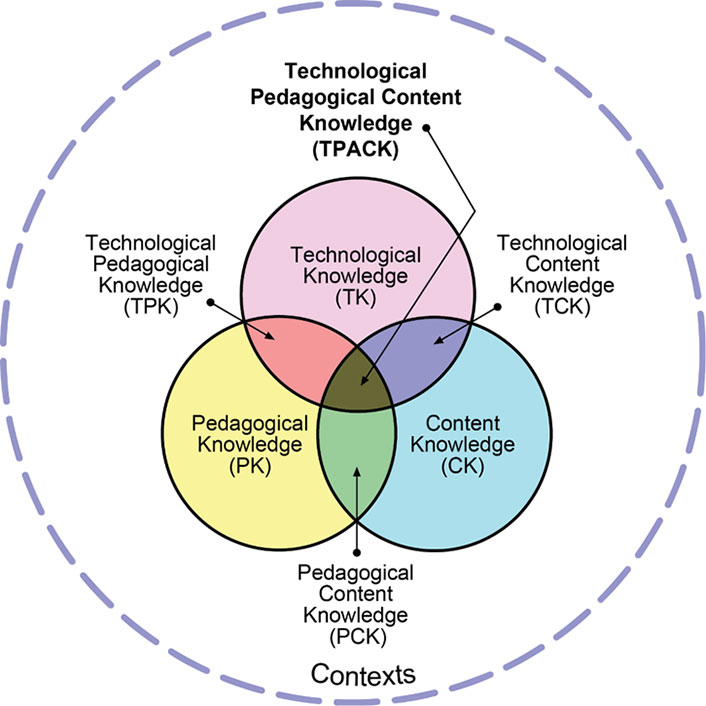

As previously mentioned the core of my arguments centre around the TPACK model. Based on the TPACK I propose that the move to online learning must be supported by the instatement of a Professional Development Program.

+++++++++++++++++

More on TRACK model and SAMR in this blog

https://blog.stcloudstate.edu/ims?s=track+model

Software can spy on what you type in video calls by tracking your arms

https://www.newscientist.com/article/2258682-software-can-spy-on-what-you-type-in-video-calls-by-tracking-your-arms/

A computer model can work out the words that the person is typing just by tracking the movement of their shoulders and arms in the video stream.

+++++++++++++++++++

more on privacy in this IMS blog

https://blog.stcloudstate.edu/ims?s=privacy

More on surveillance in this IMS blog

https://blog.stcloudstate.edu/ims?s=surveillance

https://www.edsurge.com/news/2018-10-18-what-the-samr-model-may-be-missing

Developed by Dr. Ruben Puentedura, the SAMR Model aims to guide teachers in integrating technology into their classrooms. It consists of four steps: Substitution (S), Augmentation (A), Modification (M), and Redefinition (R).

The problem with many personalized learning tools is that they live mostly in realm of Substitution or Augmentation tasks.

It’s in moments like these that we see the SAMR model, while laying an excellent foundation, isn’t enough. When considering which technologies to incorporate into my teaching, I like to consider four key questions, each of which build upon strong foundation that SAMR provides.

1. Does the technology help to minimize complexity?

2. Does the technology help to maximize the individual power and potential of all learners in the room?

use Popplet and iCardSort regularly in my classroom—flexible tools that allow my students to demonstrate their thinking through concept mapping and sorting words and ideas.

3. Will the technology help us to do something previously unimaginable?

4. Will the technology preserve or enhance human connection in the classroom?

Social media is a modern-day breakthrough in human connection and communication. While there are clear consequences to social media culture, there are clear upsides as well. Seesaw, a platform for student-driven digital portfolios, is an excellent example of a tool that enhances human connection.

++++++++++++++++++++++

more on SAMR and TRACK models in this IMS blog

https://blog.stcloudstate.edu/ims/2018/05/17/transform-education-digital-tools/

more on personalized learning in this IMS blog

https://blog.stcloudstate.edu/ims?s=personalized+learning

The Role of Librarians in Supporting ICT Literacy

Lesley Farmer May 9, 2019,

https://er.educause.edu/blogs/2019/5/the-role-of-librarians-in-supporting-ict-literacy

Academic librarians increasingly provide guidance to faculty and students for the integration of digital information into the learning experience.

TPACK: Technological Pedagogical Content Knowledge

Many librarians have shied away from ICT literacy, concerned that they may be asked how to format a digital document or show students how to create a formula in a spreadsheet. These technical skills focus more on a specific tool than on the underlying nature of information.

librarians have begun to use an embedded model as a way to deepen their connection with instructors and offer more systematic collection development and instruction. That is, librarians focus more on their partnerships with course instructors than on a separate library entity.

If TPACK is applied to instruction within a course, theoretically several people could be contributing this knowledge to the course. A good exercise is for librarians to map their knowledge onto TPACK.

ICT reflects the learner side of a course. However, ICT literacy can be difficult to integrate because it does not constitute a core element of any academic domain. Whereas many academic disciplines deal with key resources in their field, such as vocabulary, critical thinking, and research methodologies, they tend not to address issues of information seeking or collaboration strategies, let alone technological tools for organizing and managing information.

Instructional design for online education provides an optimal opportunity for librarians to fully collaborate with instructors.

The outcomes can include identifying the level of ICT literacy needed to achieve those learning outcomes, a task that typically requires collaboration between the librarian and the program’s faculty member. Librarians can also help faculty identify appropriate resources that students need to build their knowledge and skills. As education administrators encourage faculty to use open educational resources (OERs) to save students money, librarians can facilitate locating and evaluating relevant resources. These OERs not only include digital textbooks but also learning objects such as simulations, case studies, tutorials, and videos.

Reading online text differs from reading print both physically and cognitively. For example, students scroll down rather than turn online pages. And online text often includes hyperlinks, which can lead to deeper coverage—as well as distraction or loss of continuity of thought. Also, most online text does not allow for marginalia that can help students reflect on the content. Teachers and students often do not realize that these differences can impact learning and retention. To address this issue, librarians can suggest resources to include in the course that provide guidance on reading online.

My note – why specialist like Tom Hergert and the entire IMS is crucial for the SCSU library and librarians and how neglecting the IMS role hurts the SCSU library –

Similarly, other types of media need to be evaluated, comprehended, and interpreted in light of their critical features or “grammar.” For example, camera angles can suggest a person’s status (as in looking up to someone), music can set the metaphorical tone of a movie, and color choices can be associated with specific genres (e.g., pastels for romances or children’s literature, dark hues for thrillers). Librarians can explain these media literacy concepts to students (and even faculty) or at least suggest including resources that describe these features

My note – on years-long repetition of the disconnect between SCSU ATT, SCSU library and IMS –

instructors need to make sure that students have the technical skills to produce these products. Although librarians might understand how media impacts the representation of knowledge, they aren’t necessarily technology specialists. However, instructors and librarians can collaborate with technology specialists to provide that expertise. While librarians can locate online resources—general ones such as Lynda.com or tool-specific guidance—technology specialists can quickly identify digital resources that teach technical skills (my note: in this case IMS). My note: we do not have IDs, another years-long reminder to middle and upper management. Many instructors and librarians have not had formal courses on instructional design, so collaborations can provide an authentic means to gain competency in this process.

My note: Tom and I for years have tried to make aware SCSU about this combo –

Instructors likely have high content knowledge (CK) and satisfactory technological content knowledge (TCK) and technological knowledge (TK) for personal use. But even though newer instructors acquire pedagogical knowledge (PK), pedagogical content knowledge (PCK), and technological pedagogical knowledge (TPK) early in their careers, veteran instructors may not have received this training. The same limitations can apply to librarians, but technology has become more central in their professional lives. Librarians usually have strong one-to-one instruction skills (an aspect of PK), but until recently they were less likely to have instructional design knowledge. ICT literacy constitutes part of their CK, at least for newly minted professionals. Instructional designers are strong in TK, PK, and TPK, and the level of their CK (and TCK and TPK) will depend on their academic background. And technology specialists have the corner on TK and TCK (and hopefully TPK if they are working in educational settings), but they may not have deep knowledge about ICT literacy.

Therefore, an ideal team for ICT literacy integration consists of the instructor, the librarian, the instructional designer, and the technology specialist. Each member can contribute expertise and cross-train the teammates. Eventually, the instructor can carry the load of ICT literacy, with the benefit of specific just-in-time support from the librarian and instructional designer.

My note: I have been working for more then six years as embedded librarian in the doctoral cohort and had made aware the current library administrator (without any response) about my work, as well as providing lengthy bibliography (e.g. https://blog.stcloudstate.edu/ims/2017/08/24/embedded-librarian-qualifications/ and have had meeting with the current SOE administrator and the library administrator (without any response).

I also have delivered discussions to other institutions (https://blog.stcloudstate.edu/ims/2018/04/12/embedded-librarian-and-gamification-in-libraries/)

Librarians should seriously consider TPACK as a way to embed themselves into the classroom to incorporate information and ICT literacy.

+++++++++++++

more about academic library in this IMS blog

https://blog.stcloudstate.edu/ims?s=academic+library

more on SAMR and TRACK models in this IMS blog

https://blog.stcloudstate.edu/ims/2018/05/17/transform-education-digital-tools/

https://blog.stcloudstate.edu/ims/2015/07/29/mn-esummit-2015/

Makransky, G., & Lilleholt, L. (2018). A structural equation modeling investigation of the emotional value of immersive virtual reality in education.

Educational Technology Research and Development,

66(5), 1141–1164.

https://doi.org/10.1007/s11423-018-9581-2

an affective path in which immersion predicted presence and positive emotions, and a cognitive path in which immersion fostered a positive cognitive value of the task in line with the control value theory of achievement emotions.

business analyses and reports (e.g., Belini et al. 2016; Greenlight and Roadtovr 2016), predict that virtual reality (VR) could be the biggest future computing platform of all time.

better understanding of the utility and impact of VR when it is applied in an educational context.

several different VR systems exist, including cave automatic virtual envi-ronment (CAVE), head mounted displays (HMD) and desktop VR. CAVE is a projection-based VR system with display-screen faces surrounding the user (Cruz-Neira et al. 1992). As the user moves around within the bounds of the CAVE, the correct perspective and stereo projections of the VE are displayed on the screens. The user wears 3D glasses insidethe CAVE to see 3D structures created by the CAVE, thus allowing for a very lifelikeexperience. HMD usually consist of a pair of head mounted goggles with two LCD screens portraying the VE by obtaining the user ́s head orientation and position from a tracking system (Sousa Santos et al. 2008). HMD may present the same image to both eyes (monoscopic), or two separate images (stereoscopic) making depth perception possible. Like the CAVE, HMD offers a very realistic and lifelike experience by allowing the user to be completely surrounded by the VE. As opposed to CAVE and HMD, desktop VR does not allow the user to be surrounded by the VE. Instead desktop VR enables the user to interact with a VE displayed on a computer monitor using keyboard, mouse, joystick or touch screen (Lee and Wong 2014; Lee et al. 2010).

the use of simulations results in at least as good or better cognitive outcomes and attitudes

toward learning than do more traditional teaching methods (Bayraktar 2000; Rutten et al.

2012; Smetana and Bell 2012; Vogel et al. 2006). However, a recent report concludes that

there are still many questions that need to be answered regarding the value of simulations

in education (Natioan Research Council 2011). In the past, virtual learning simulations

were primarily accessed through desktop VR. With the increased use of immersive VR it is

now possible to obtain a much higher level of immersion in the virtual world, which

enhances many virtual experiences (Blascovich and Bailenson 2011).

an understanding of how to harness the emotional appeal of e-learning tools is a central issue for learning and instruction, since research shows that initial situ-ational interest can be a first step in promoting learning

several educational theories that describe the affective, emotional, and motivational factors that play a role in multimedia learning which are relevant for understanding the role of immersion in VR learning environments.

the cognitive-affective theory of learning with media (Moreno and

Mayer 2007),

and

the integrated cognitive affective model of learning with multimedia

(ICALM; Plass and Kaplan 2016)

control-value theory of achievement emotion CVTAE

https://psycnet.apa.org/record/2014-09239-007

Presence, intrinsic motivation, enjoyment, and control and active learning are the affective factors used in this study. defintions

The sample consisted of 104 students (39 females and 65 males; average age =23.8 years)

from a large European university.

immersive VR (Samsung Gear VR with Samsung Galaxy S6) and

the desktop VR version of a virtual laboratory simulation (on a standard computer). The

participants were randomly assigned to two groups: the first used the immersive VR

followed by the desktop VR version, and the second used the two platforms in the opposite

sequence.

The VR learning simulation used in this experiment was developed by the company Labster and designed to facilitate learning within the field of biology at a university level. The VR simulation was based on a realistic murder case in which the participants were required to investigate a crime scene, collect blood samples and perform DNA analysis in a high-tech laboratory in order to identify and implicate the murderer

we conclude that the emotional value of the immersive VR version of the learning simulation is significantly greater than the desktop VR version. This is a major empirical contribution of this study.

Imagining a better world at the Unity for Humanity Summit

https://blog.unity.com/news/imagining-a-better-world-at-the-unity-for-humanity-summit

On October 12, the Unity for Humanity Summit will once again take place virtually to celebrate creators who are using real-time 3D (RT3D) for social impact.

Since the inaugural Unity for Humanity Summit in October 2020, we have awarded more than $2.5 million to support current and future social impact creators who are building RT3D experiences that have a positive and meaningful impact on society and the planet through the Unity for Humanity program and the Unity Charitable Fund.

four content tracks and some of the exciting topics and workshops

Education and Inclusive Economic Opportunity

- Workshop: How to make your first Unity build

- Different global models for creating inclusive economic opportunity

- How to leverage real-time 3D for workforce development and training

Environment and Sustainability

- What the gaming industry can do to support a healthier planet: From decarbonization of gaming operations to activating users

- Visualizing the future of climate change

- How the fashion industry is innovating for sustainability using RT3D

Digital Health and Wellbeing

- Using AI for social good in and beyond the age of COVID

- The role RT3D can play in the advancement of patient care, healthcare training, and more

- Funding healthcare innovations and real-time 3D tools

Tools for Changemakers

- Monetizing your social impact mobile game

- An AR tutorial taught through multiple use cases

- Pitching brands to support your impact projects

- Visual scripting workshop

++++++++++++++

more on Unity in this IMS blog

https://blog.stcloudstate.edu/ims?s=unity

https://www.techlearning.com/news/updating-blooms-taxonomy-for-digital-learning

The use of technology has been integrated into the model, creating what is now known as Bloom’s Digital Taxonomy.

+++++++++++++++++++++

more on Bloom Digital Taxonomy in this IMS blog:

https://blog.stcloudstate.edu/ims?s=bloom+digital+taxonomy

On June 25, Brian Beatty was a guest to Bryan Alexander’s “Future Forum.”

He will be a guest again this coming Thursday, September 24, 2020, 1PM Central.

Here is the recording from the June 25th session:

https://blog.stcloudstate.edu/ims/2020/06/25/hyflex-model/

On June 25, it was agreed Brian will bring updates and new developments, considering the pandemic impact on that mode of teaching.

To RSVP ahead of time, or to jump straight in, just click these links:

https://shindig.com/login/event/hyflex2

The EDUCAUSE XR (Extended Reality) Community Group Listserv <XR@LISTSERV.EDUCAUSE.EDU>

Greetings to you all! Presently, I am undertaking a masters course in “Instruction Design and Technology” which has two components: Coursework and Research. For my research, I would like to pursue it in the field of Augmented Reality (AR) and Mobile Learning. I am thinking of an idea that could lead to collaboration among students and directly translate into enhanced learning for students while using an AR application. However, I am having a problem with coming up with an application because I don’t have any computing background. This, in turn, is affecting my ability to come up with a good research topic.

I teach gross anatomy and histology to many students of health sciences at Mbarara University, and this is where I feel I could make a contribution to learning anatomy using AR since almost all students own smartphones. I, therefore, kindly request you to let me know which of the freely-available AR app authoring tools could help me in this regard. In addition, I request for your suggestions regarding which research area(s) I should pursue in order to come up with a good research topic.

Hoping to hear from you soon.

Grace Muwanga Department of Anatomy Mbarara University Uganda (East Africa)

++++++++++++

matthew.macvey@journalism.cuny.edu

Dear Grace, a few augmented reality tools which I’ve found are relatively easy to get started with:

For iOS, iPhone, iPad: https://www.torch.app/ or https://www.adobe.com/products/aero.html

To create AR that will work on social platforms like Facebook and Snapchat (and will work on Android, iOS) try https://sparkar.facebook.com/ar-studio/ or https://lensstudio.snapchat.com/ . You’ll want to look at the tutorials for plane tracking or target tracking https://sparkar.facebook.com/ar-studio/learn/documentation/tracking-people-and-places/effects-in-surroundings/

https://lensstudio.snapchat.com/guides/general/tracking/tracking-modes/

One limitation with Spark and Snap is that file sizes need to be small.

If you’re interested in creating AR experiences that work directly in a web browser and are up for writing some markup code, look at A-Frame AR https://aframe.io/blog/webxr-ar-module/.

For finding and hosting 3D models you can look at Sketchfab and Google Poly. I think both have many examples of anatomy.

Best, Matt

+++++++++++

“Beth L. Ritter-Guth” <britter-guth@NORTHAMPTON.EDU>

I’ve been using Roar. They have a 99$ a year license.

++++++++++++

I have recently been experimenting with an AR development tool called Zappar, which I like because the end users do not have to download an app to view the AR content. Codes can be scanned either with the Zappar app or at web.zappar.com.

From a development standpoint, Zappar has an easy to use drag-and-drop interface called ZapWorks Designer that will help you build basic AR experiences quickly, but for a more complicated, more interactive use case such as learning anatomy, you will probably need ZapWorks Studio, which will have much more of a learning curve. The Hobby (non-commercial) license is free if you are interested in trying it out.

You can check out an AR anatomy mini-lesson with models of the human brain, liver, and heart using ZapWorks here: https://www.zappar.com/campaigns/secrets-human-body/. Even if you choose to go with a different development tool, this example might help nail down ideas for your own project.

Hope this helps,

Brighten

Brighten Jelke Academic Assistant for Virtual Technology Lake Forest College bjelke@lakeforest.edu Office: DO 233 | Phone: 847-735-5168

http://www.lakeforest.edu/academics/resources/innovationspaces/virtualspace.php

+++++++++++++++++

more on XR in education in this IMS blog

https://blog.stcloudstate.edu/ims?s=xr+education

VR Takes The Stage As Conferences Cancel

https://www.forbes.com/sites/charliefink/2020/03/11/vr-takes-the-stage-as-conferences-cancel/

Katie Kelly, Program Owner at AltspaceVR. “I did a rough estimate and factoring in the travel time and CO2e estimates that would have been spent. This summit took about 9 thousand cars off the road for the week of the summit and saved attendees around 5 million miles of travel. So whether we’re combating a global outbreak, climate change or remote work – there’s a lot that AltspaceVR and other VR platforms can do to help.”

HTC is moving its annual Vive Ecosystem Conference (VEC) in China to a virtual world built by Engage, a product of Immersive VR Education.

The crisis has put new wind in the sails of the first virtual world, Second Life, which continues to thrive. “We are seeing increased interest in Second Life as it is a safe place for people and organizations to socialize and work during this time of great anxiety and social distancing,” said Ebbe Altberg, CEO of Linden Lab, which created and operates Second Life.

VictoryXR is an AR & VR school curriculum provider that also uses the Engage platform. They are now in the process of becoming accredited in Iowa and California to become an online virtual reality school (as opposed to a virtual school on a platform like Second Life, which is PC based).

Exp Realty is a virtual, cloud-based real estate brokerage founded nearly a decade ago, similar to Second Life. It is also optimized for PCs and laptops. The virtual real estate company has more than 27,000 agents who they log on to “eXp World.” The technology is provided by VirBELA, which also builds virtual tools for universities and virtual classes like Coursera.

Glue, based in Helsinki, Finland, today introduced significant upgrades to its virtual world platform, Glue Team Space. Team Space is immersive 3D environments optimized for teams of up to twenty people.

Spatial.io, a new remote conferencing system that works with every device and creates convincing avatars who can collaborate in the virtual space where participants can share whiteboards, post-its, videos and 3D models.